Inference-Time Compute Scaling Methods to Improve Reasoning Models

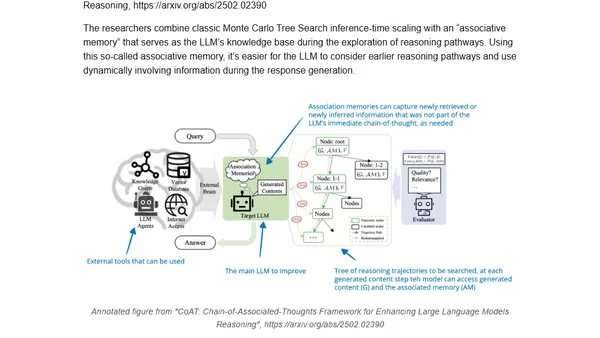

Read OriginalThis article details recent research advancements in improving LLM reasoning, focusing specifically on inference-time compute scaling techniques. It covers methods like Test-Time Preference Optimization, Chain-of-Associated-Thoughts, and Step Back to Leap Forward, explaining how increasing computational power during inference can boost performance on complex tasks like coding and math problems without altering the base model's training.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes