How to Fine-Tune LLMs in 2024 with Hugging Face

A practical guide to fine-tuning open-source large language models (LLMs) using Hugging Face's TRL and Transformers libraries in 2024.

A practical guide to fine-tuning open-source large language models (LLMs) using Hugging Face's TRL and Transformers libraries in 2024.

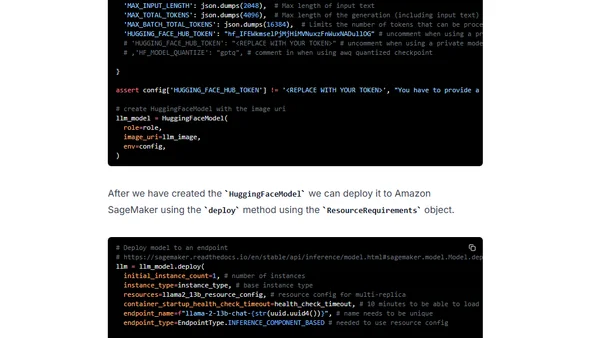

Guide to scaling LLM inference on Amazon SageMaker using new multi-replica endpoints for improved throughput and cost efficiency.

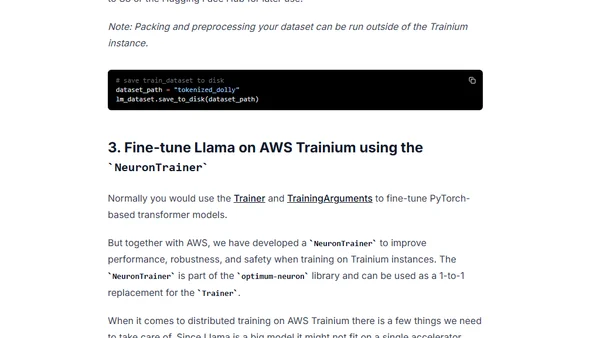

A technical tutorial on fine-tuning the Llama 2 7B large language model using AWS Trainium instances and Hugging Face libraries.

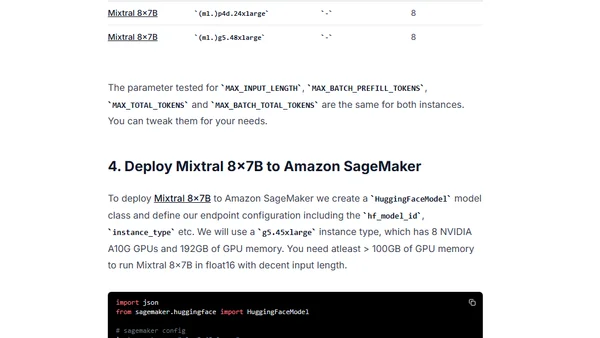

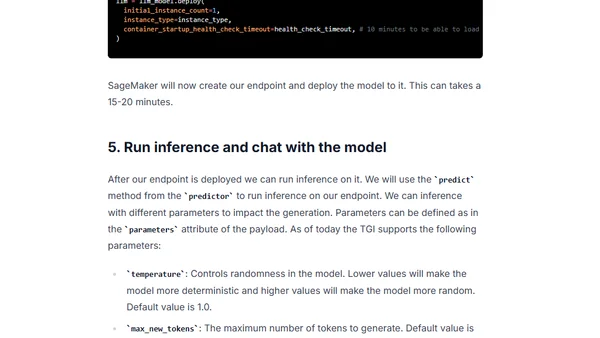

A technical guide on deploying the Mixtral 8x7B open-source LLM from Mistral AI to Amazon SageMaker using the Hugging Face LLM DLC.

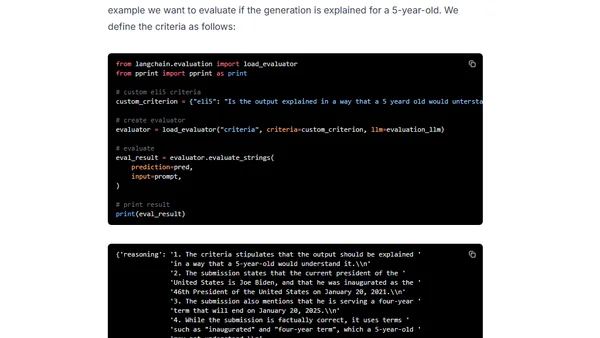

A hands-on guide to evaluating LLMs and RAG systems using Langchain and Hugging Face, covering criteria-based and pairwise evaluation methods.

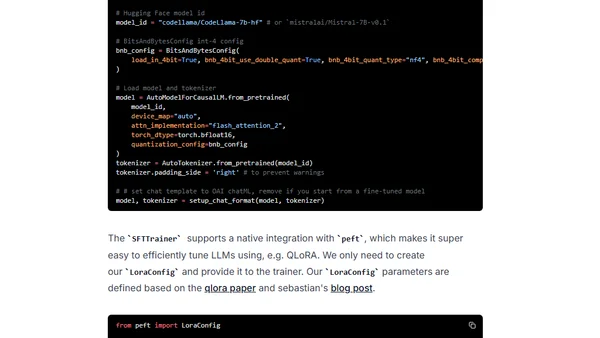

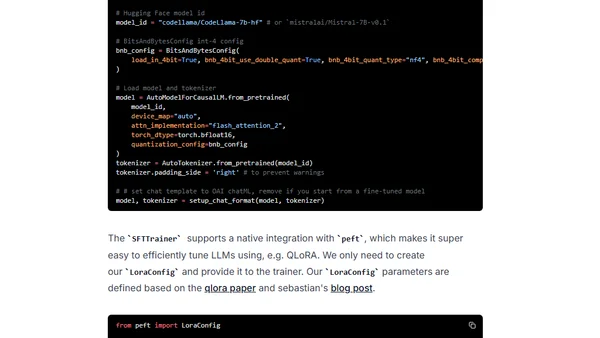

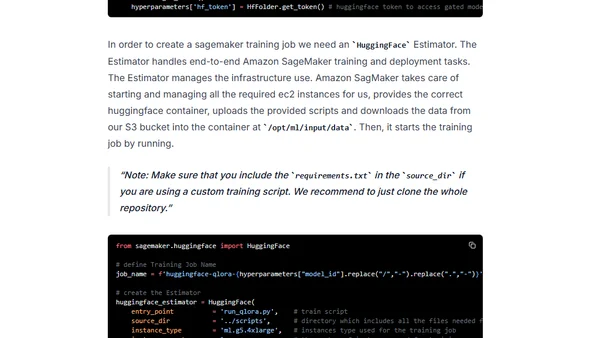

A technical guide on fine-tuning the Mistral 7B large language model using QLoRA and deploying it on Amazon SageMaker with Hugging Face tools.

A technical guide on deploying the Falcon 180B open-source large language model to Amazon SageMaker using the Hugging Face LLM DLC.

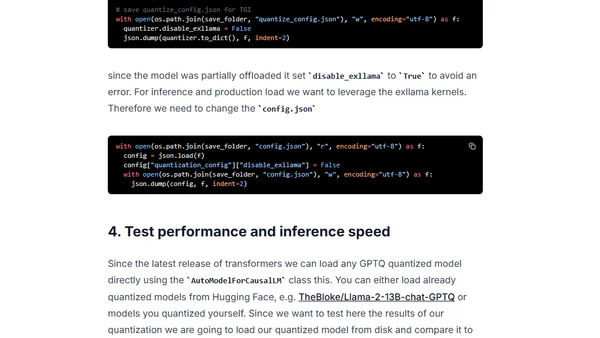

A guide to using GPTQ quantization with Hugging Face Optimum to compress open-source LLMs for efficient deployment on smaller hardware.

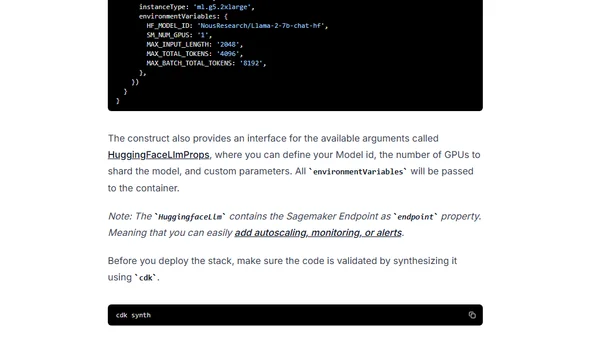

A technical guide on deploying open-source LLMs like Llama 2 using Infrastructure as Code with AWS CDK and the Hugging Face LLM construct.

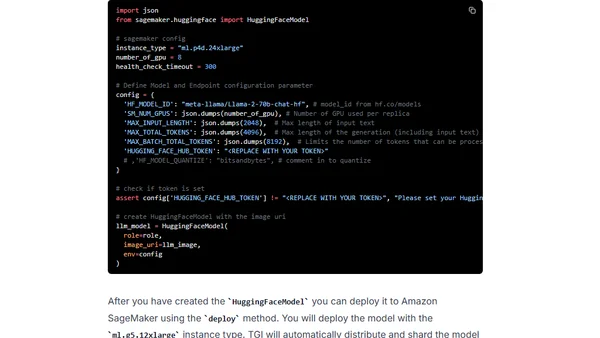

A technical guide on deploying Meta's Llama 2 large language models (7B, 13B, 70B) on Amazon SageMaker using the Hugging Face LLM DLC.

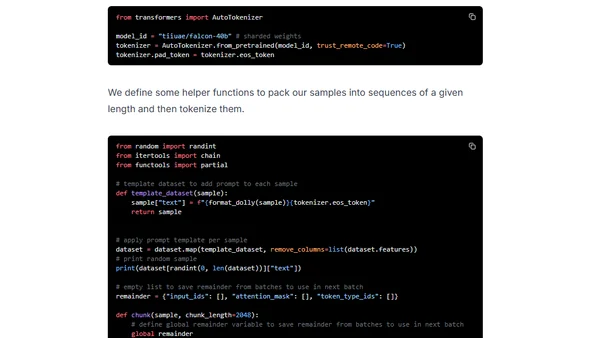

A technical guide on using QLoRA to efficiently fine-tune the Falcon 40B large language model on Amazon SageMaker.

A guide to deploying open-source Large Language Models (LLMs) like Falcon using Hugging Face's managed Inference Endpoints service.

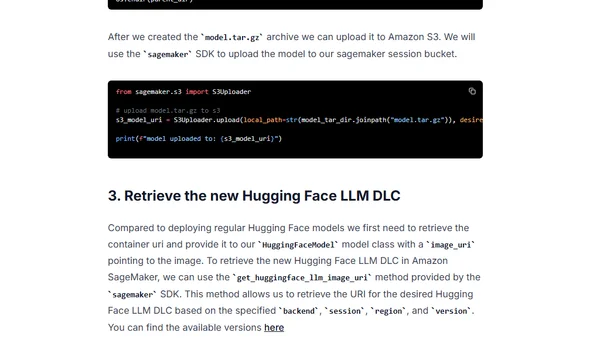

A technical guide on deploying open-source Large Language Models (LLMs) from Amazon S3 to Amazon SageMaker using Hugging Face's LLM Inference Container within a VPC.

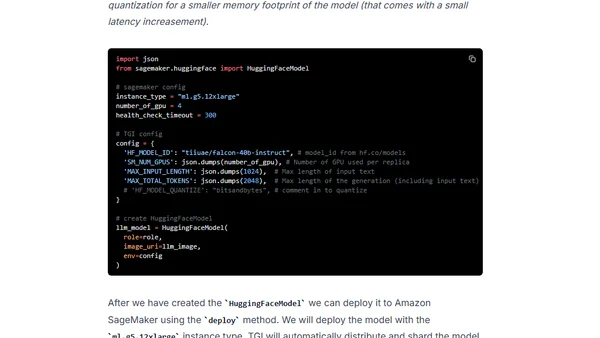

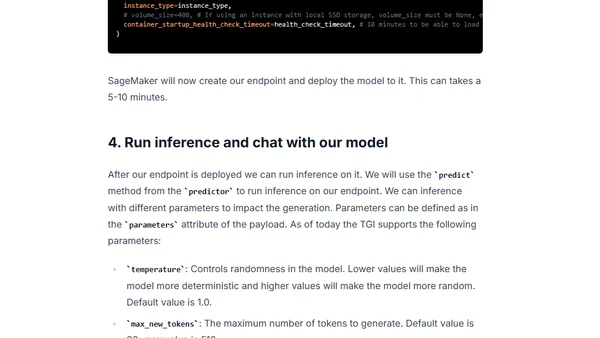

A technical guide on deploying the open-source Falcon 7B and 40B large language models to Amazon SageMaker using the Hugging Face LLM Inference Container.

Guide to deploying open-source LLMs like BLOOM and Open Assistant to Amazon SageMaker using Hugging Face's new LLM Inference Container.

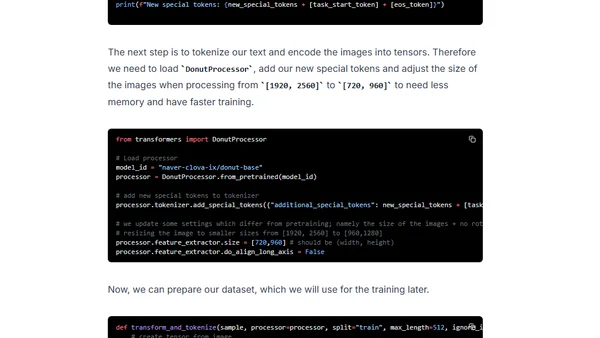

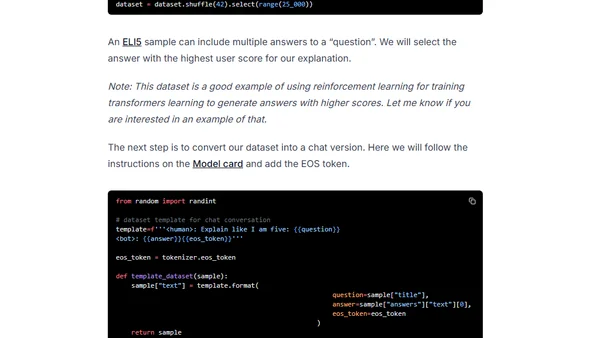

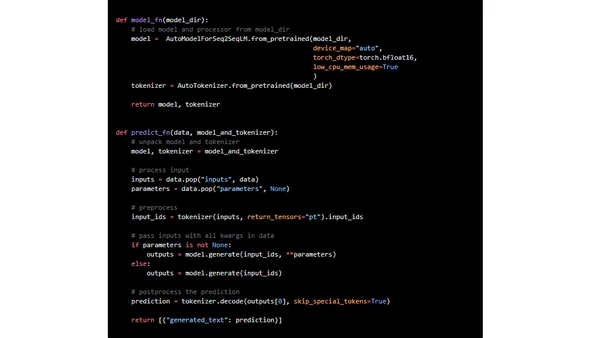

Tutorial on fine-tuning and deploying the Donut model for OCR-free document understanding using Hugging Face and Amazon SageMaker.

A technical tutorial on fine-tuning a 20B+ parameter LLM using PyTorch FSDP and Hugging Face on Amazon SageMaker's multi-GPU infrastructure.

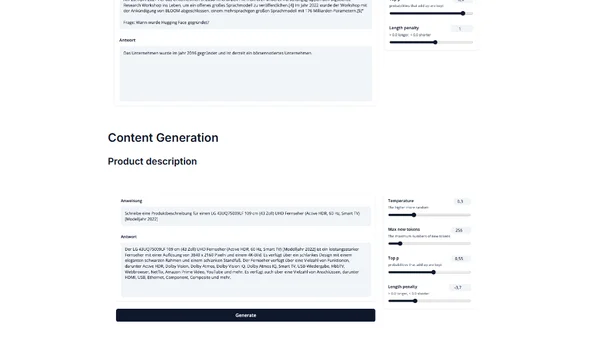

Introduces IGEL, an instruction-tuned German large language model based on BLOOM, for NLP tasks like translation and QA.

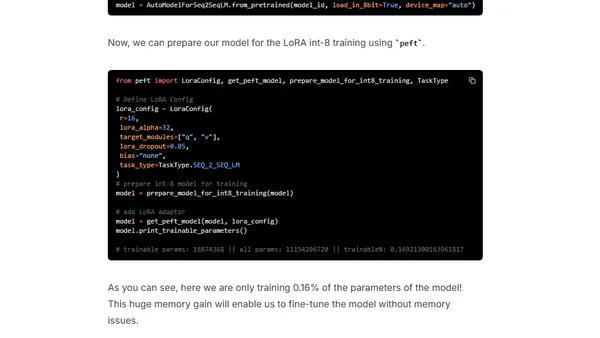

A technical guide on fine-tuning the large FLAN-T5 XXL model efficiently using LoRA and Hugging Face libraries on a single GPU.

A technical guide on deploying Google's FLAN-UL2 20B large language model for real-time inference using Amazon SageMaker and Hugging Face.