Quoting Andrej Karpathy

Explores Andrej Karpathy's concept of Software 2.0, where AI writes programs through objectives and gradient descent, focusing on task verifiability.

Explores Andrej Karpathy's concept of Software 2.0, where AI writes programs through objectives and gradient descent, focusing on task verifiability.

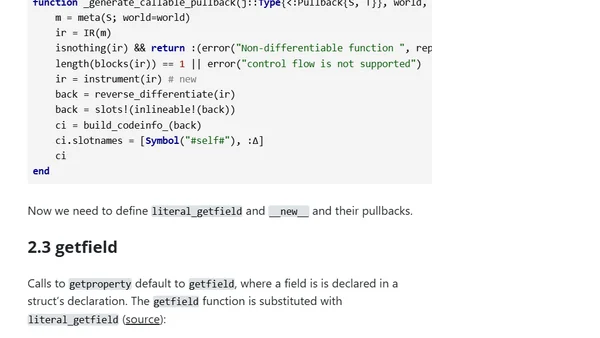

Extends a Julia automatic differentiation library (MicroGrad.jl) to handle map, getfield, and anonymous functions, enabling gradient descent for polynomial fitting.

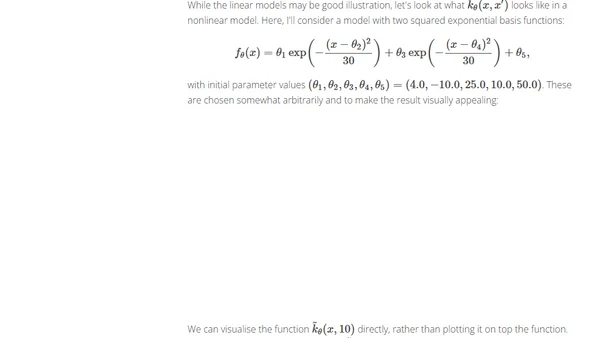

Explains the Neural Tangent Kernel concept through simple 1D regression examples to illustrate how neural networks evolve during training.

A guide to implementing logistic regression with gradient descent in JavaScript to solve classification problems.

A guide to implementing multivariate linear regression with gradient descent in JavaScript, including feature scaling.

A guide to implementing vectorized gradient descent in JavaScript for machine learning, improving efficiency over unvectorized approaches.

Explores methods to optimize the gradient descent algorithm in JavaScript, focusing on selecting the right learning rate for convergence.

Explains polynomial regression as a solution to under-fitting in machine learning when data has a nonlinear correlation.

A guide to implementing linear regression with gradient descent in JavaScript, using a housing price prediction example.

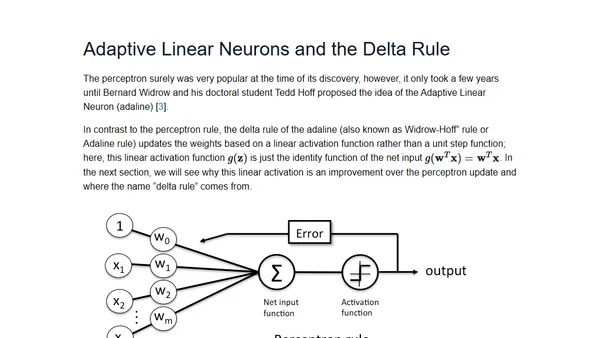

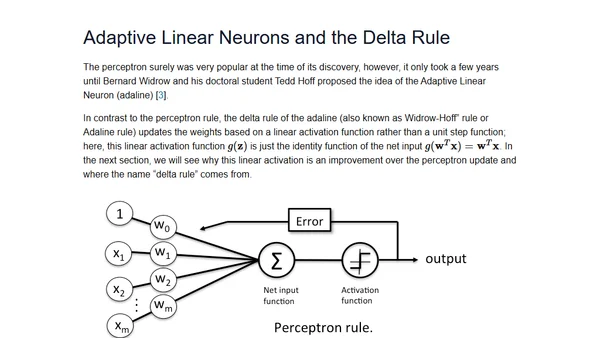

An introduction to single-layer neural networks, covering the Perceptron and Adaline models, with Python implementations and gradient descent.

An introduction to single-layer neural networks, covering the history, perceptrons, adaptive linear neurons, and the gradient descent algorithm with Python implementations.