On the Consumption of AI-Generated Content at Scale

A researcher explores the cognitive impact of consuming AI-generated content, discussing signal degradation and verification challenges in the AI era.

Shreya Shankar is a PhD researcher sharing insights on AI, machine learning, HCI, and data science. Her work explores LLM applications, AI evaluation, and practical ML engineering challenges, reaching a wide audience across blogs and social media.

17 articles from this blog

A researcher explores the cognitive impact of consuming AI-generated content, discussing signal degradation and verification challenges in the AI era.

A defense of systematic AI evaluation (evals) in development, arguing they are essential for measuring application quality and improving models.

A technical writer's analysis of common pitfalls in LLM-generated writing and practical strategies for using AI tools effectively while maintaining quality.

A framework for building data flywheels to dynamically improve LLM applications through continuous evaluation, monitoring, and feedback loops.

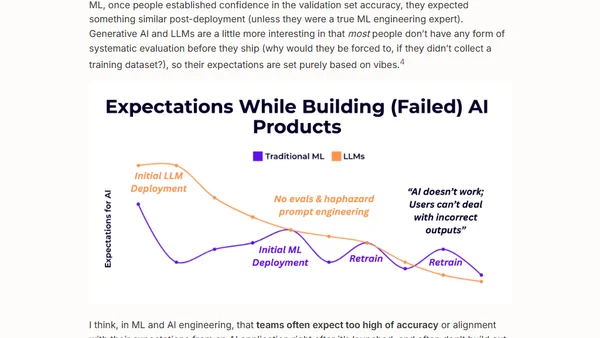

A reflection on how LLMs have simplified AI prototyping compared to traditional ML, but may lead to similar deployment disappointments.

A developer compares 8 LLMs on a custom retrieval task using medical transcripts, analyzing performance on simple to complex questions.

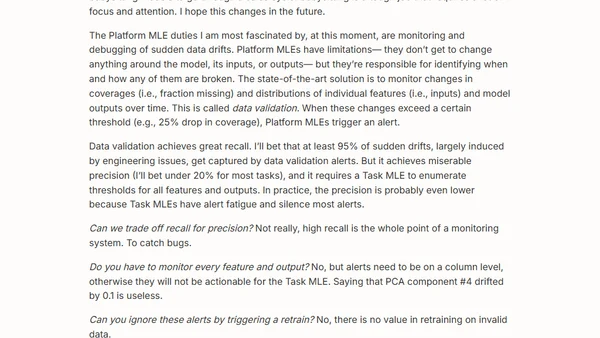

A PhD student reflects on the complexities of ML engineering, distinguishing between Task MLEs and Platform MLEs, and shares practical lessons from production systems.

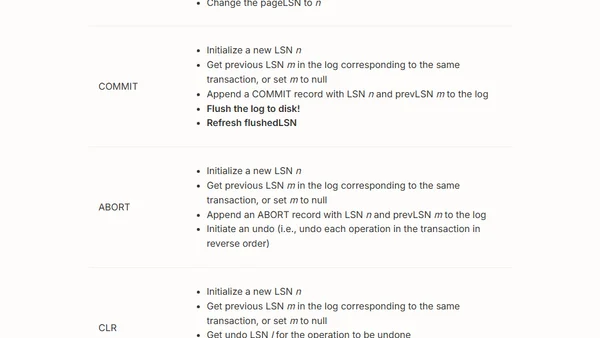

Explains the ARIES database transaction recovery algorithm, covering its motivation, data structures, and how it ensures atomicity and durability.

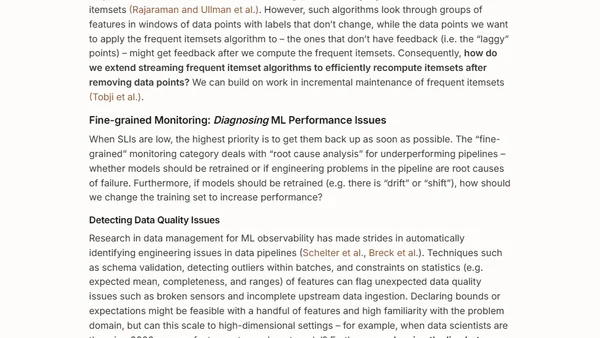

Final part of a series proposing a research agenda for ML monitoring, focusing on data management challenges like metric computation and real-time SLI tracking.

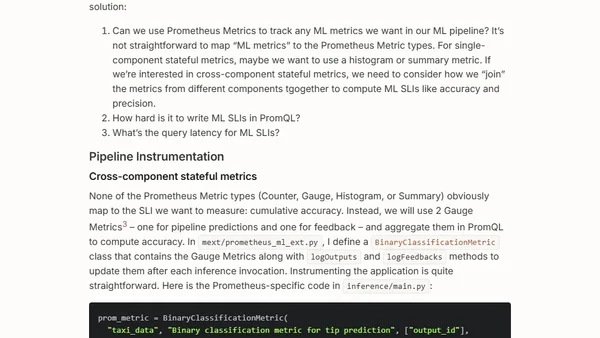

Explores the challenges of using Prometheus for ML pipeline monitoring, highlighting terminology issues and technical inadequacies.

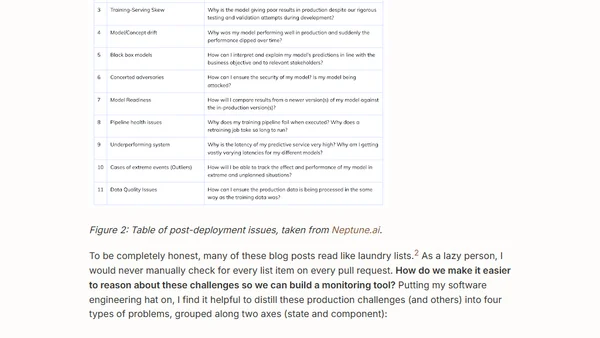

Analyzes post-deployment ML issues and categorizes them to advocate for better monitoring tools, using Zillow's case as an example.

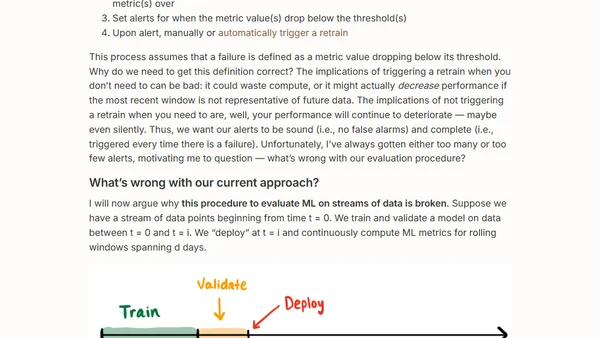

A critique of current ML monitoring tools and a proposal for rethinking evaluation in streaming machine learning systems.

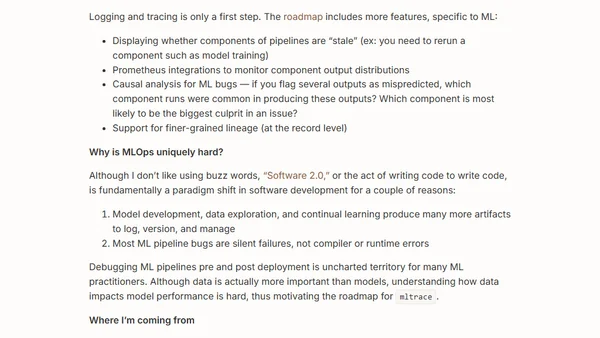

Introducing mltrace, an open-source lineage and tracing tool for debugging and maintaining production machine learning pipelines.

The article argues that the choice of machine learning library (like PyTorch or TensorFlow) is less critical than building robust data and production pipelines.

The article critiques the 'AI Savior' mentality in machine learning, where practitioners overestimate AI's ability to solve complex problems without deep domain expertise.

A personal analysis of the pros and cons for CS grads choosing between pursuing a PhD or entering the tech industry, focusing on machine learning careers.

A machine learning engineer reflects on the gap between ML research and real-world production, emphasizing the critical importance of data over models.