Never Been Easier to Learn

Discusses how LLMs like ChatGPT can boost self-learning by helping understand problems and verify solutions, making skill acquisition easier.

Saeed Esmaili is a data scientist in Amsterdam working at Spotify, focused on developer productivity, platform strategy, LLMs, and recommendation systems, sharing notes and insights along the way.

31 articles from this blog

Discusses how LLMs like ChatGPT can boost self-learning by helping understand problems and verify solutions, making skill acquisition easier.

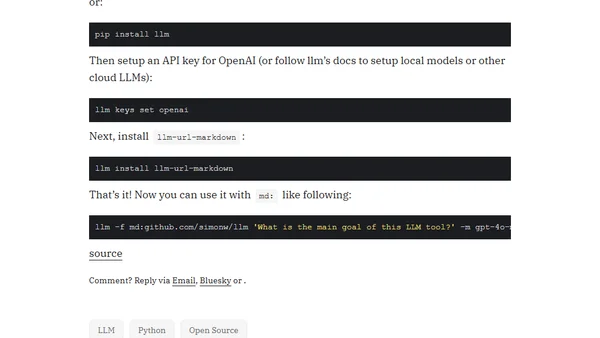

Introducing llm-url-markdown, a new plugin for Simon Willison's llm CLI tool that fetches web content as markdown for use as LLM context fragments.

Part two of building a personal recommendation system, covering data collection from Pocket and content extraction using the Jina Reader API.

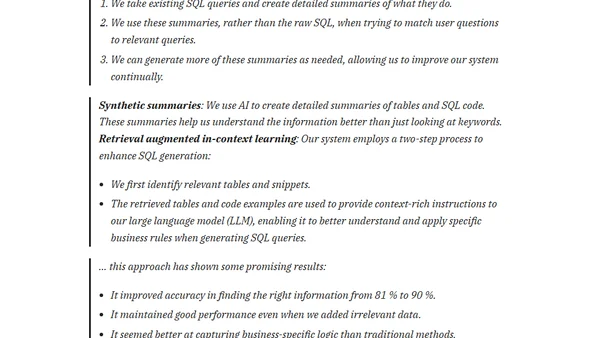

Explains a technique using AI-generated summaries of SQL queries to improve the accuracy of text-to-SQL systems with LLMs.

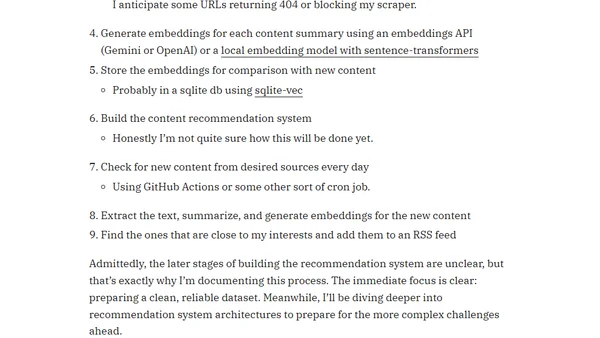

A developer documents the first steps in building a personalized content recommendation system using saved articles, text embeddings, and algorithms.

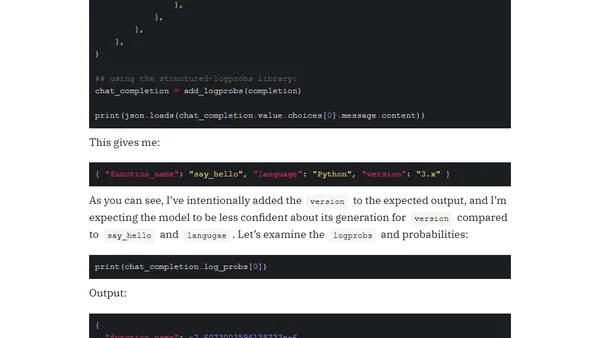

Explains how to extract logprobs from OpenAI's structured JSON outputs using the structured-logprobs Python library for better LLM confidence insights.

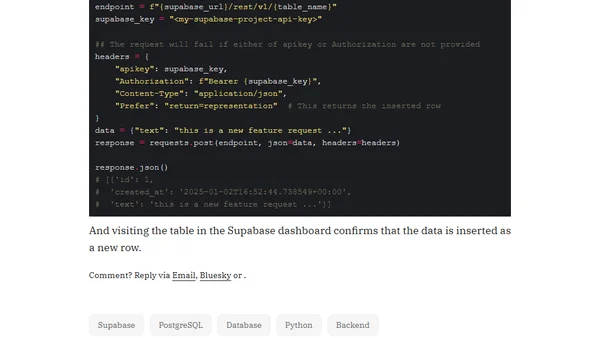

A tutorial on using Python to insert data into a Supabase PostgreSQL table via its REST API.

Introduces Label-Studio, an open-source tool for annotating text, image, audio, and video data for AI/ML projects, highlighting its ease of use and features.

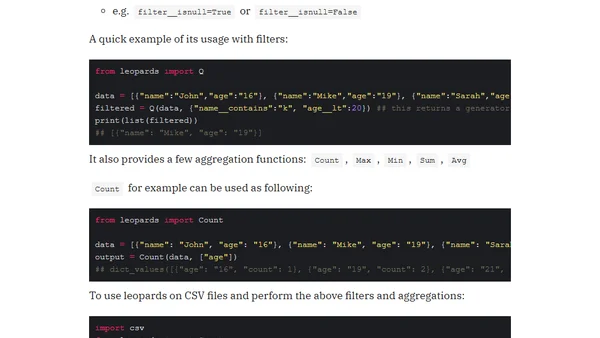

Introduces the 'leopards' Python library for filtering and aggregating lists, offering a lightweight alternative to pandas for basic data operations.

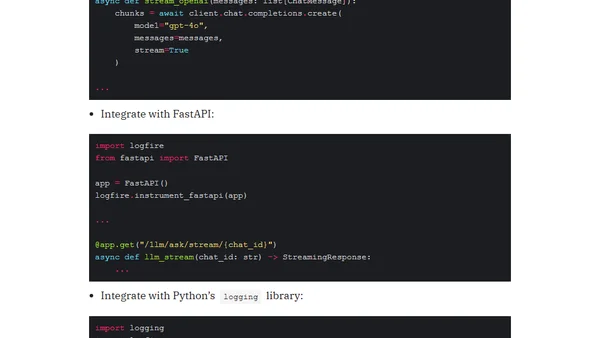

Introducing Logfire, Pydantic's new observability tool for Python, with easy integration for OpenAI LLM calls, FastAPI, and logging.

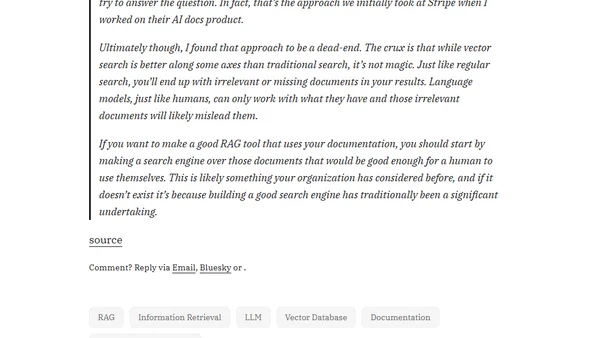

Argues that building a good search engine is more critical for effective RAG than just using a vector database, as poor retrieval misleads AI.

Learn how to use the OpenAI Python library to interact with Google's Gemini LLM for text generation, images, and more.

Explains the concept and purpose of input masking in LLM fine-tuning, using a practical example with Axolotl for a code PR classification task.

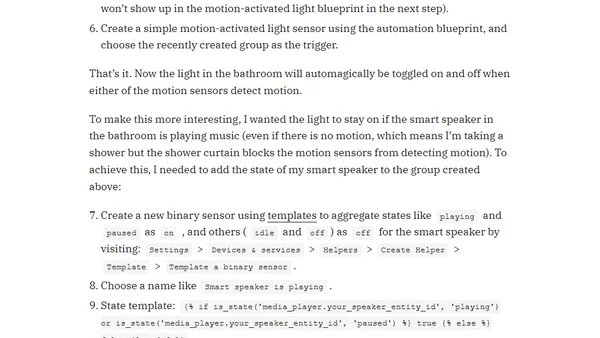

A guide to using Home Assistant groups to control a single smart light with multiple motion sensors, including adding media player state.

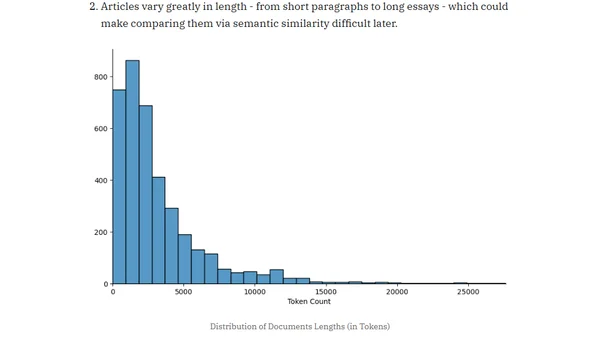

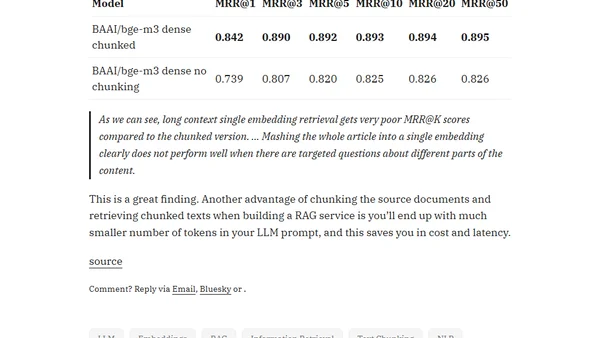

An experiment comparing retrieval performance of chunked vs. non-chunked documents using long-context embedding models like BGE-M3.

Practical lessons from integrating LLMs into a product, focusing on prompt design pitfalls like over-specification and handling null responses.

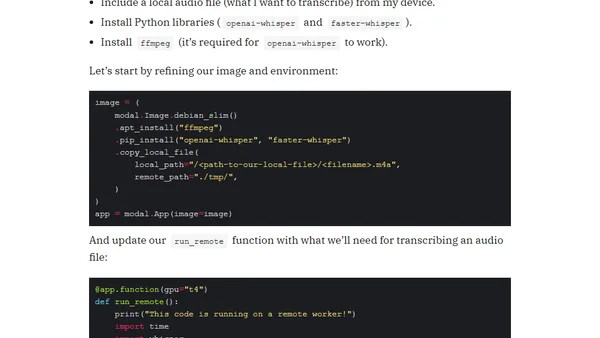

A guide to running Python code on serverless GPU instances using Modal.com for faster machine learning inference, demonstrated with a speech-to-text example.

Argues against using LLMs to generate SQL queries for novel business questions, highlighting the importance of human analysts for precision.

Discusses effective anti-spam techniques like shadowbanning, adding friction, and filtering bot traffic, based on a Hacker News thread.

Explores how preparation and pre-thinking can compensate for a lack of quick-wittedness in meetings and technical discussions.