Noteworthy LLM Research Papers of 2024

A curated list of 12 influential LLM research papers from 2024, highlighting key advancements in AI and machine learning.

SebastianRaschka.com is the personal blog of Sebastian Raschka, PhD, an LLM research engineer whose work bridges academia and industry in AI and machine learning. On his blog and notes section he publishes deep, well-documented articles on topics such as LLMs (large language models), reasoning models, machine learning in Python, neural networks, data science workflows, and deep learning architecture. Recent posts explore advanced themes like “reasoning LLMs”, comparisons of modern open-weight transformer architectures, and guides for building, training, or analyzing neural networks and model internals.

103 articles from this blog

A curated list of 12 influential LLM research papers from 2024, highlighting key advancements in AI and machine learning.

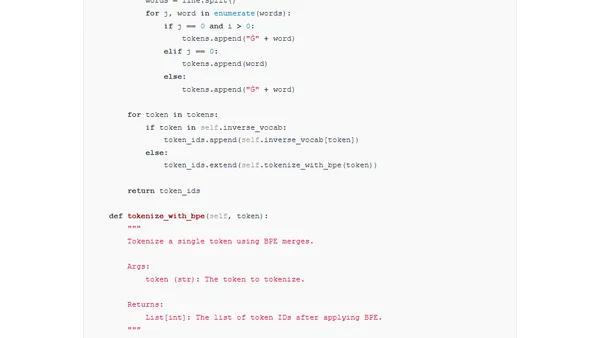

A step-by-step guide to implementing the Byte Pair Encoding (BPE) tokenizer from scratch, used in models like GPT and Llama.

A curated list of notable LLM and AI research papers published in 2024, providing a resource for those interested in the latest developments.

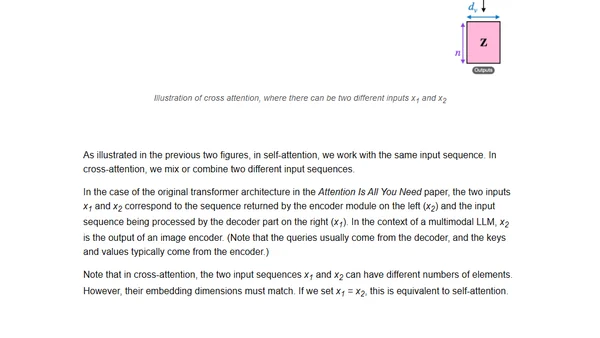

Explains how multimodal LLMs work, compares recent models like Llama 3.2, and outlines two main architectural approaches for building them.

A 3-hour coding workshop teaching how to implement, train, and use Large Language Models (LLMs) from scratch with practical examples.

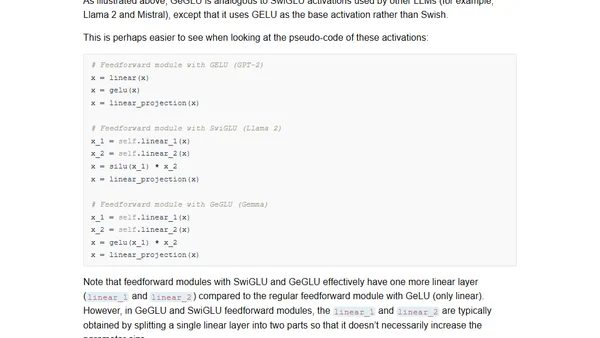

Analyzes the latest pre-training and post-training methodologies used in state-of-the-art LLMs like Qwen 2, Apple's models, Gemma 2, and Llama 3.1.

Explores recent research on instruction finetuning for LLMs, including a cost-effective method for generating synthetic training data from scratch.

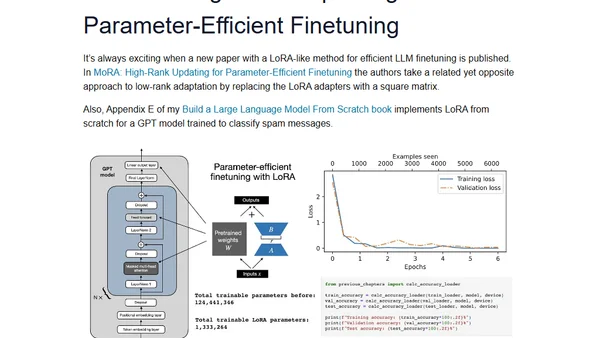

Analysis of new LLM research on instruction masking and LoRA finetuning methods, with practical insights for developers.

A 1-hour presentation on the LLM development cycle, covering architecture, training, finetuning, and evaluation methods.

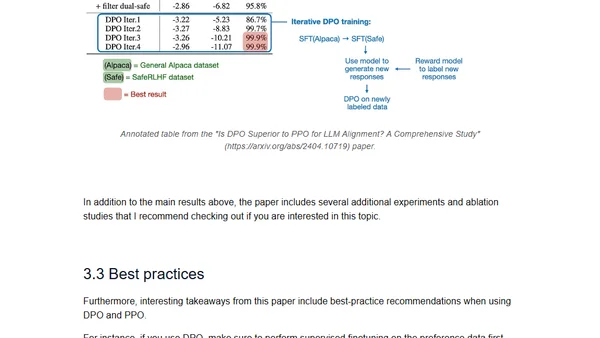

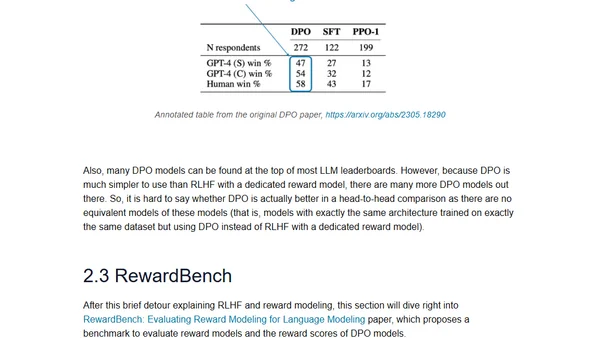

A technical review of April 2024's major open LLM releases (Mixtral, Llama 3, Phi-3, OpenELM) and a comparison of DPO vs PPO for LLM alignment.

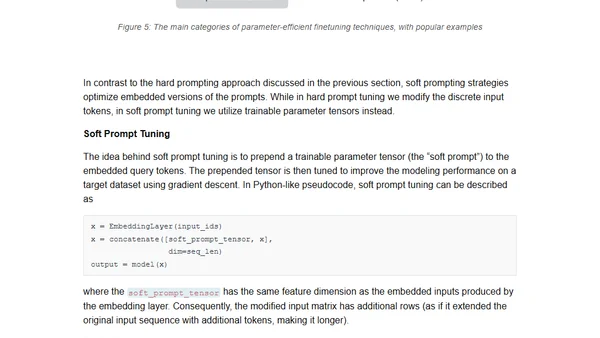

Explores methods for using and finetuning pretrained large language models, including feature-based approaches and parameter updates.

Analysis of recent AI research papers on continued pretraining for LLMs and reward modeling for RLHF, with insights into model updates and alignment.

A summary of February 2024 AI research, covering new open-source LLMs like OLMo and Gemma, and a study on small, fine-tuned models for text summarization.

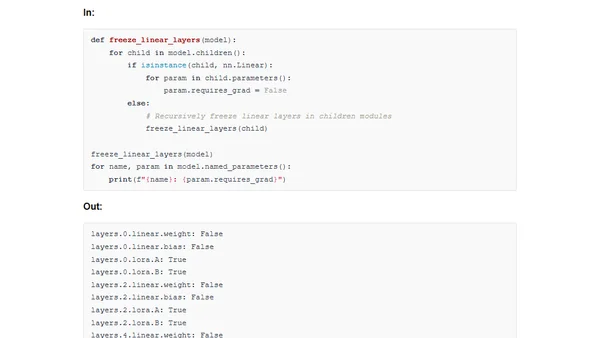

A guide to implementing LoRA and the new DoRA method for efficient model finetuning in PyTorch from scratch.

Strategies for improving LLM performance through dataset-centric fine-tuning, focusing on instruction datasets rather than model architecture changes.

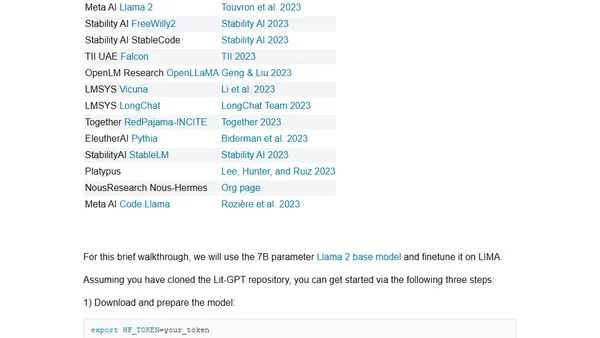

A guide to participating in the NeurIPS 2023 LLM Efficiency Challenge, focusing on efficient fine-tuning of large language models on a single GPU.

Techniques to reduce memory usage by up to 20x when training LLMs and Vision Transformers in PyTorch.

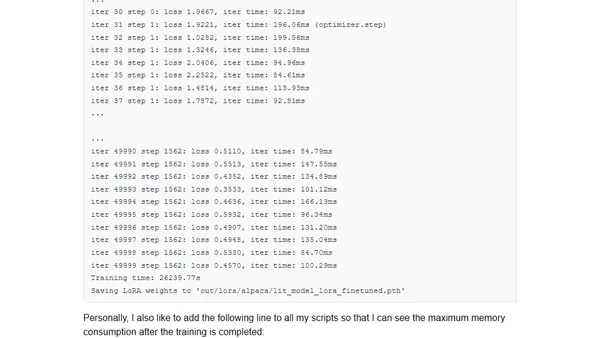

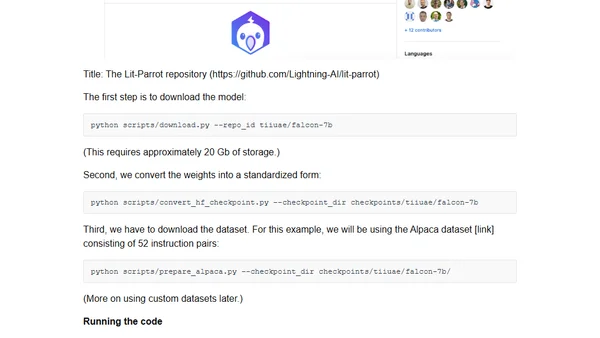

A guide to efficiently finetuning Falcon LLMs using parameter-efficient methods like LoRA and Adapters to reduce compute time and cost.

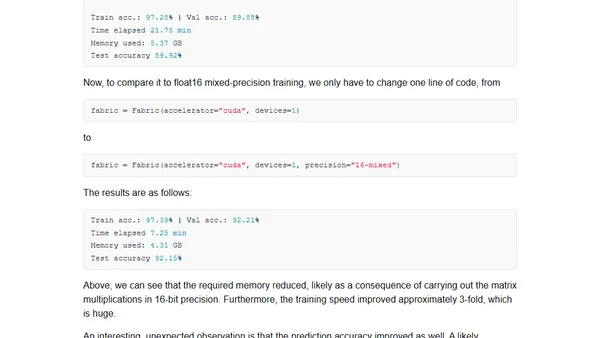

Exploring mixed-precision techniques to speed up large language model training and inference by up to 3x without losing accuracy.

Learn about Low-Rank Adaptation (LoRA), a parameter-efficient method for finetuning large language models with reduced computational costs.