How Good Are the Latest Open LLMs? And Is DPO Better Than PPO?

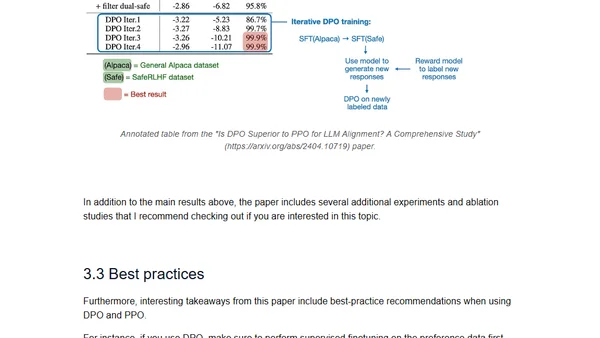

Read OriginalThis article provides a detailed technical analysis of four major open-source Large Language Model (LLM) releases from April 2024: Mixtral 8x22B, Meta's Llama 3, Microsoft's Phi-3, and Apple's OpenELM. It compares their architectures, performance on benchmarks like MMLU, and key innovations. The article also delves into reinforcement learning for LLM alignment, specifically comparing the Direct Preference Optimization (DPO) and Proximal Policy Optimization (PPO) algorithms.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser