LLM Research Insights: Instruction Masking and New LoRA Finetuning Experiments?

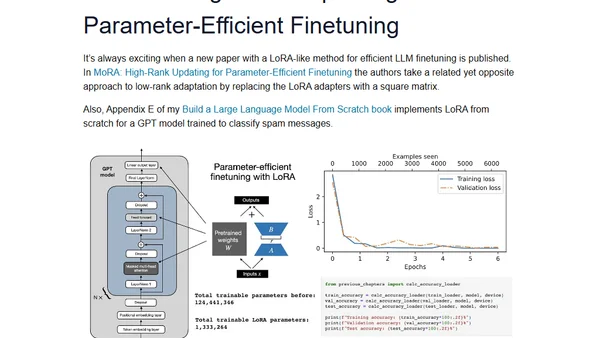

Read OriginalThis article examines three recent research papers on instruction finetuning and parameter-efficient finetuning with LoRA in large language models. It focuses particularly on a study questioning the common practice of instruction masking during loss calculation, comparing performance differences between masked and unmasked approaches. The author provides practical context from working with these methods in LitGPT and discusses implications for LLM development.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes