Deploy Mixtral 8x7B on AWS Inferentia2 with Hugging Face Optimum

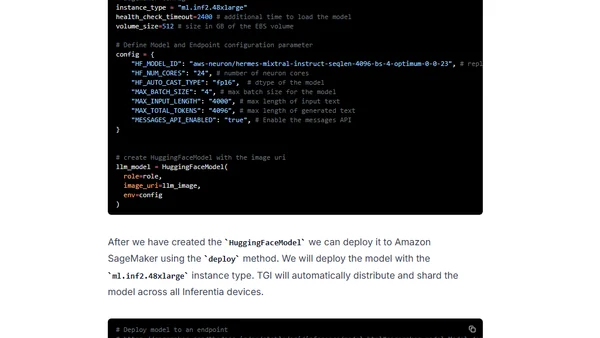

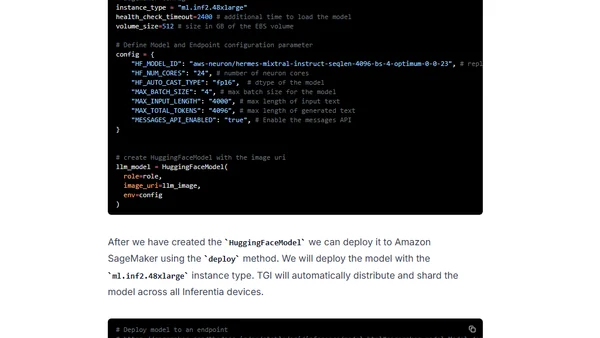

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

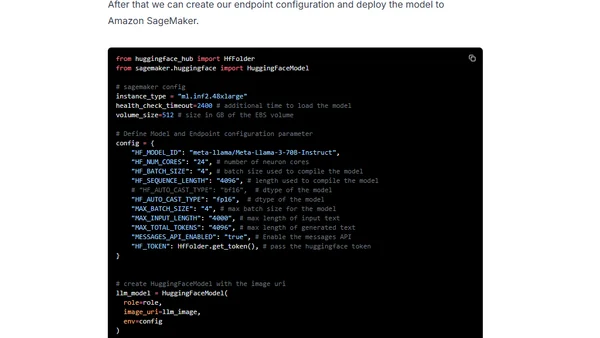

A technical guide on deploying Meta's Llama 3 70B Instruct model on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

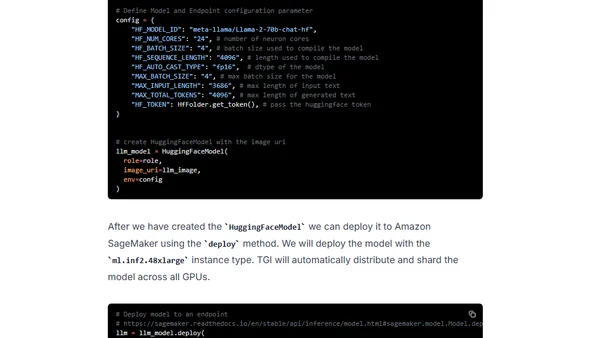

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

Tutorial on deploying embedding models using AWS Inferentia2 and Amazon SageMaker for accelerated inference performance.

A tutorial on deploying Meta's Llama 2 7B model on AWS Inferentia2 using Amazon SageMaker and the optimum-neuron library.

A tutorial on deploying Stable Diffusion XL for accelerated inference using AWS Inferentia2 and Amazon SageMaker.

A tutorial on optimizing and deploying a BERT model for low-latency inference using AWS Inferentia2 accelerators and Amazon SageMaker.