Common Python Reference Cycle Patterns

Analyzes Python reference cycles in high-performance deep learning systems, showing patterns to avoid memory leaks when garbage collection is disabled.

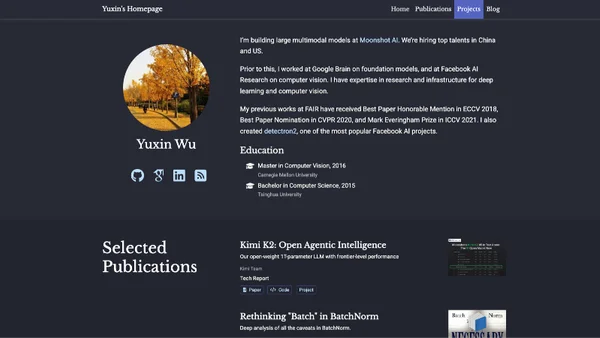

Yuxin Wu shares in-depth insights on Python, PyTorch, and large-scale software design. Explore tutorials on memory optimization, data loading, stacked diffs, static initialization, and building efficient deep learning systems.

10 articles from this blog

Analyzes Python reference cycles in high-performance deep learning systems, showing patterns to avoid memory leaks when garbage collection is disabled.

Analyzes why the registration pattern in config systems fails to scale, highlighting import-time costs and proposing alternatives.

A deep dive into solving C++ static initialization order fiasco (SIOF) and destruction issues encountered while maintaining PyTorch at Google.

Explains why PyTorch multi-process data loaders cause massive RAM duplication and provides solutions to share dataset memory across processes.

Argues that separating 'loss functions' from models is a flawed abstraction in deep learning systems, proposing a more flexible design.

Principles for designing and maintaining clean, modular core APIs in research software libraries, focusing on deep learning and experimental code.

Explores techniques for flattening and unflattening nested data structures in TensorFlow, JAX, and PyTorch for efficient deep learning model development.

Compares PyTorch's TorchScript tracing and scripting methods for model deployment, advocating for tracing as the preferred default approach.

Best practices for using Python's logging module in libraries to avoid common mistakes and improve log control.

Explores how images are discretized into pixels, the impact of sampling grids on deep learning models, and inconsistencies in image processing libraries.