Mortal Komputation: On Hinton's argument for superhuman AI.

Analyzes Geoffrey Hinton's technical argument comparing biological and digital intelligence, concluding digital AI will surpass human capabilities.

Analyzes Geoffrey Hinton's technical argument comparing biological and digital intelligence, concluding digital AI will surpass human capabilities.

Explains the intuition behind the Attention mechanism and Transformer architecture, focusing on solving issues in machine translation and language modeling.

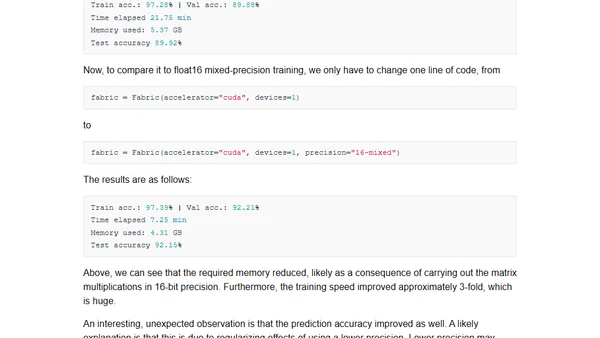

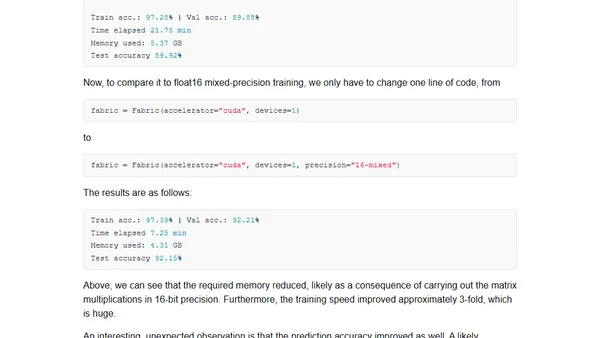

Explores how mixed-precision training techniques can speed up large language model training and inference by up to 3x, reducing memory use.

Exploring mixed-precision techniques to speed up large language model training and inference by up to 3x without losing accuracy.

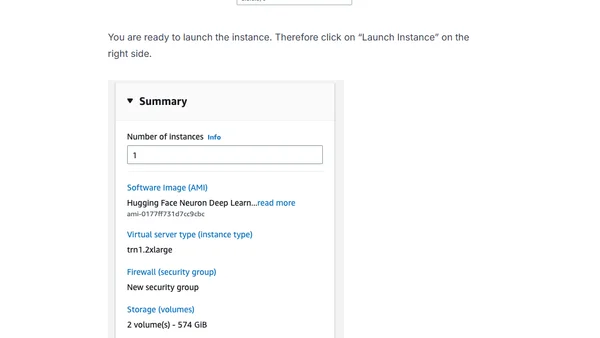

A guide to setting up an AWS Trainium instance using the Hugging Face Neuron Deep Learning AMI to fine-tune a BERT model for text classification.

A reflection on past skepticism of deep learning and why similar dismissal of Large Language Models (LLMs) might be a mistake.

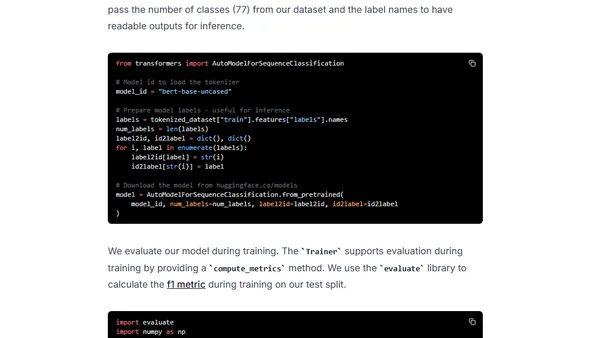

A tutorial on fine-tuning a BERT model for text classification using the new PyTorch 2.0 framework and the Hugging Face Transformers library.

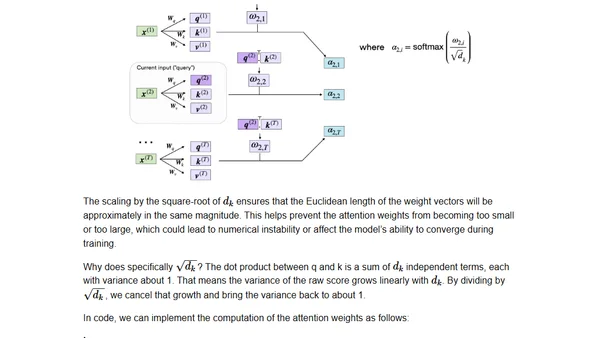

A technical guide to coding the self-attention mechanism from scratch, as used in transformers and large language models.

An updated, comprehensive overview of the Transformer architecture and its many recent improvements, including detailed notation and attention mechanisms.

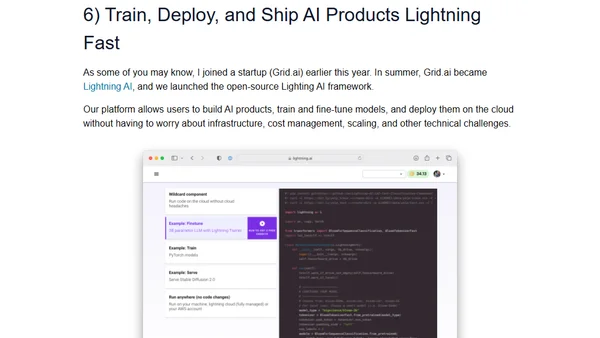

A curated list of the top 10 open-source machine learning and AI projects released or updated in 2022, including PyTorch 2.0 and scikit-learn 1.2.

A curated list of the top 10 open-source releases in Machine Learning & AI for 2022, including PyTorch 2.0 and scikit-learn 1.2.

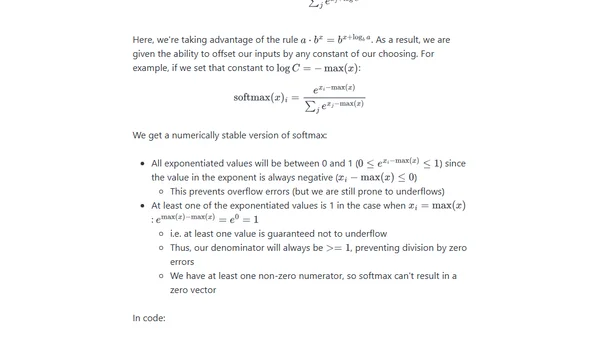

Explains numerical instability in naive softmax and cross-entropy implementations and provides stable alternatives for deep learning.

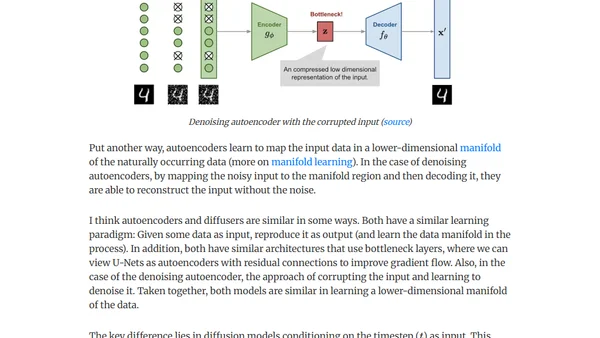

Compares autoencoders and diffusers, explaining their architectures, learning paradigms, and key differences in deep learning.

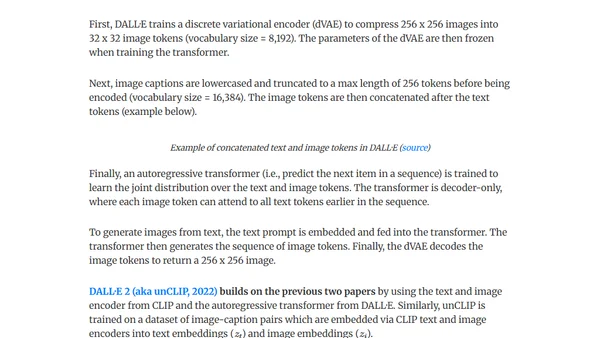

Explains core concepts behind modern text-to-image AI models like DALL-E 2 and Stable Diffusion, including diffusion, text conditioning, and latent space.

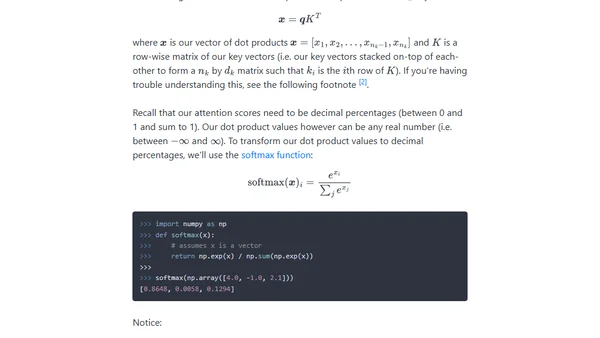

A technical explanation of the attention mechanism in transformers, building intuition from key-value lookups to the scaled dot product equation.

Author announces a new monthly AI newsletter, 'Ahead Of AI,' and shares updates on a passion project and conference appearances.

Author announces the launch of 'Ahead of AI', a monthly newsletter covering AI trends, educational content, and personal updates on machine learning projects.

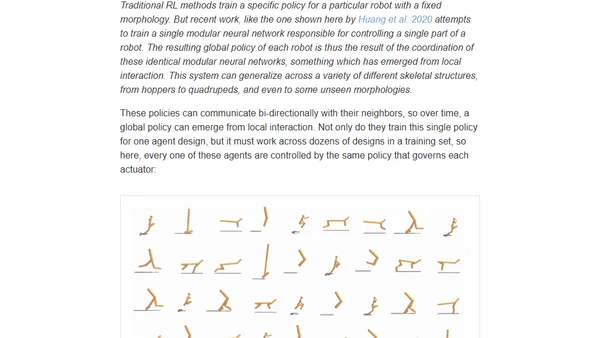

A survey exploring how concepts from collective intelligence, like swarm behavior and emergence, are being applied to improve deep learning systems.

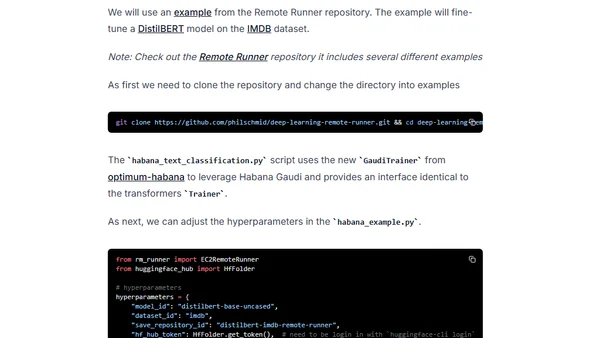

A guide to simplifying deep learning workflows using AWS EC2 Remote Runner and Habana Gaudi processors for efficient, cost-effective model training.

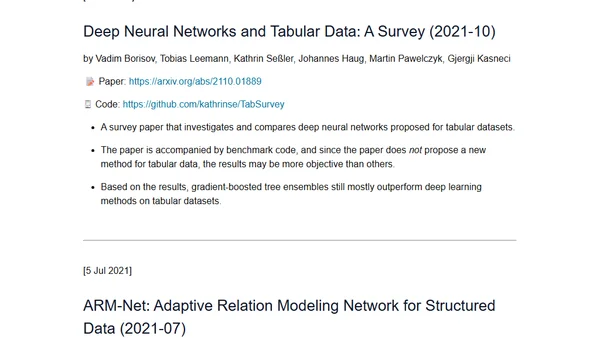

A curated list and summary of recent research papers exploring deep learning methods specifically designed for tabular data.