Fine-tune Llama 3 with PyTorch FSDP and Q-Lora on Amazon SageMaker

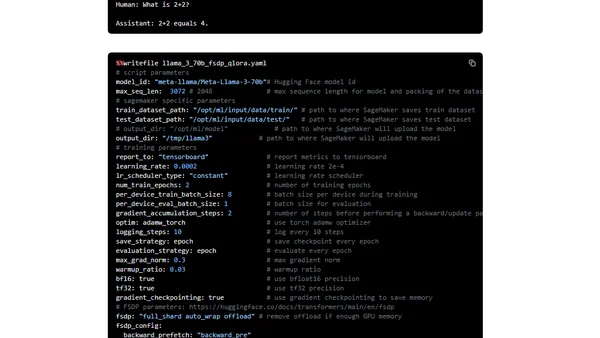

Read OriginalThis detailed tutorial explains how to fine-tune the Llama 3 large language model using PyTorch FSDP (Fully Sharded Data Parallel) and Q-Lora techniques on Amazon SageMaker. It covers setting up the environment with Hugging Face libraries, preparing datasets, and optimizing the training process for specific AWS instances to reduce memory footprint and computational requirements.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes