What happens if AI labs train for pelicans riding bicycles?

A humorous look at AI model benchmarking using the challenge of generating an SVG of a pelican riding a bicycle, and the risks of labs 'gaming' the test.

A humorous look at AI model benchmarking using the challenge of generating an SVG of a pelican riding a bicycle, and the risks of labs 'gaming' the test.

A developer shares insights and practical tips from a week of experimenting with local LLMs, including model recommendations and iterative improvement patterns.

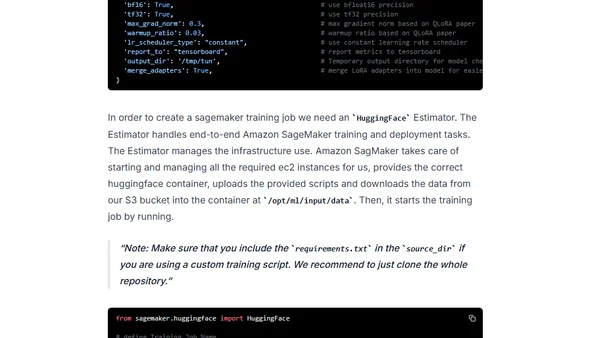

A technical guide on fine-tuning and evaluating open-source Large Language Models (LLMs) using Amazon SageMaker and Hugging Face libraries.

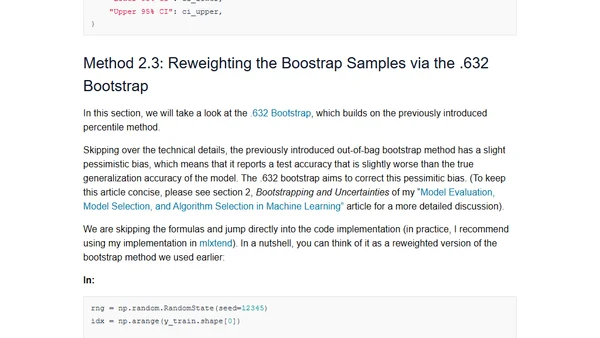

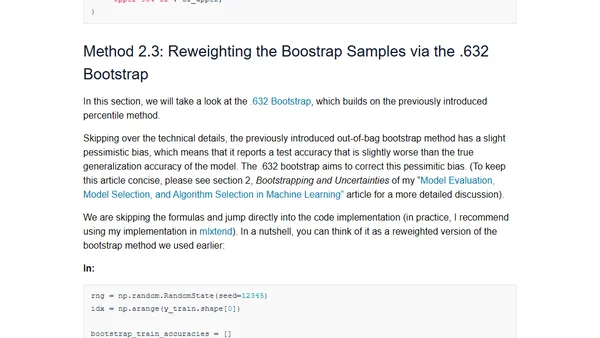

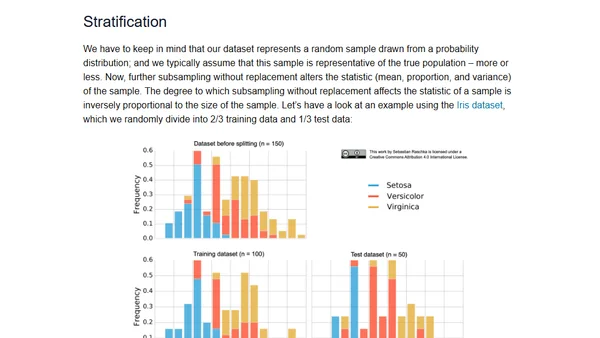

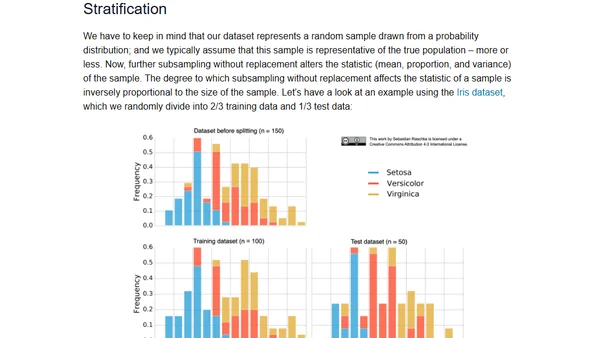

A guide to creating confidence intervals for evaluating machine learning models, covering multiple methods to quantify performance uncertainty.

A technical guide explaining methods for creating confidence intervals to measure uncertainty in machine learning model performance.

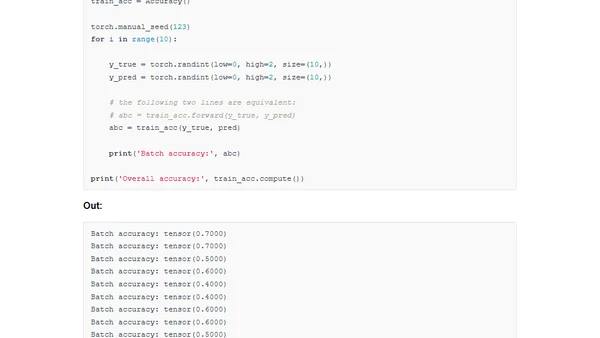

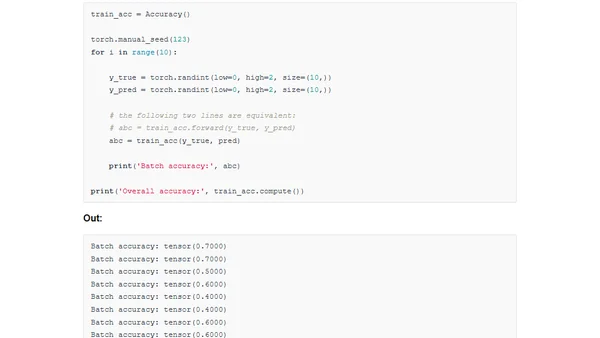

Explains the difference between .update() and .forward() methods in the TorchMetrics library for evaluating PyTorch models.

Explains the difference between .update() and .forward() in TorchMetrics, a PyTorch library for tracking model performance during training.

Final part of a series proposing a research agenda for ML monitoring, focusing on data management challenges like metric computation and real-time SLI tracking.

A technical exploration of Mean Squared Error, breaking it down into bias and variance to understand model performance and irreducible uncertainty.

A guide to model evaluation, selection, and algorithm comparison in machine learning to ensure models generalize well to new data.

A guide to evaluating machine learning models, selecting the best models, and choosing appropriate algorithms to ensure good generalization performance.