Tips for LLM Pretraining and Evaluating Reward Models

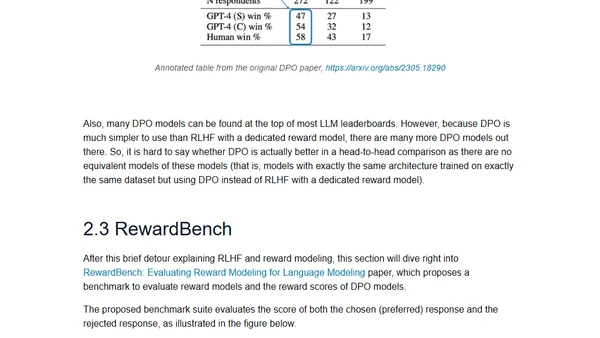

Read OriginalThis technical article analyzes recent AI research, focusing on two key papers. It first explores scalable strategies for continually pretraining large language models (LLMs) to update them with new knowledge or adapt them to new domains. It then discusses reward modeling used in Reinforcement Learning from Human Feedback (RLHF) for aligning LLMs with human preferences and a new benchmark for evaluation.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

Quoting Thariq Shihipar

Simon Willison

•

2 votes

2

Top picks — 2026 January

Paweł Grzybek

•

1 votes

3

In Praise of –dry-run

Henrik Warne

•

1 votes

4

Deep Learning is Powerful Because It Makes Hard Things Easy - Reflections 10 Years On

Ferenc Huszár

•

1 votes

5

Vibe coding your first iOS app

William Denniss

•

1 votes

6

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

7

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes