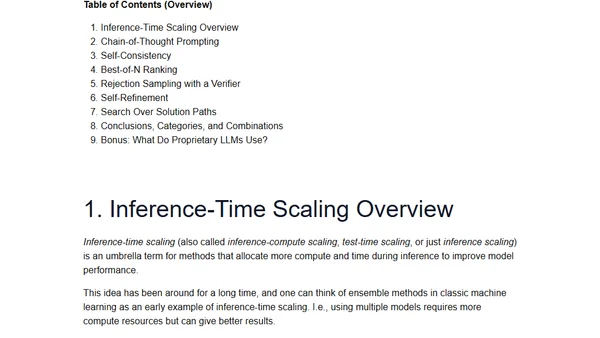

Categories of Inference-Time Scaling for Improved LLM Reasoning

Read OriginalThis technical article categorizes and explains inference-time scaling methods used to enhance the reasoning and accuracy of large language models (LLMs). It discusses techniques such as chain-of-thought prompting, self-consistency, and rejection sampling, based on the author's research and experimentation for a book on building reasoning models. The content is aimed at practitioners and researchers in AI and machine learning.

0 comments

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes