ChatGPT Is Not a Blurry JPEG of the Web

Argues against the 'lossy compression' analogy for LLMs like ChatGPT, proposing instead that they are simulators creating temporary simulacra.

Argues against the 'lossy compression' analogy for LLMs like ChatGPT, proposing instead that they are simulators creating temporary simulacra.

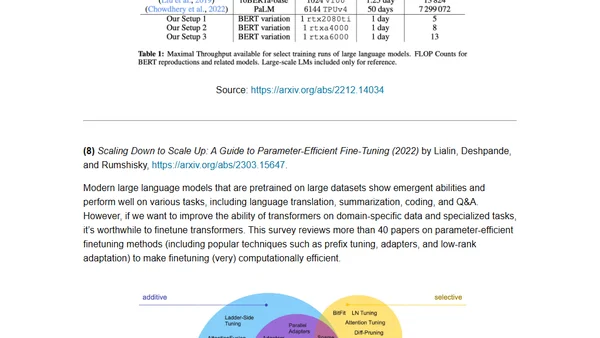

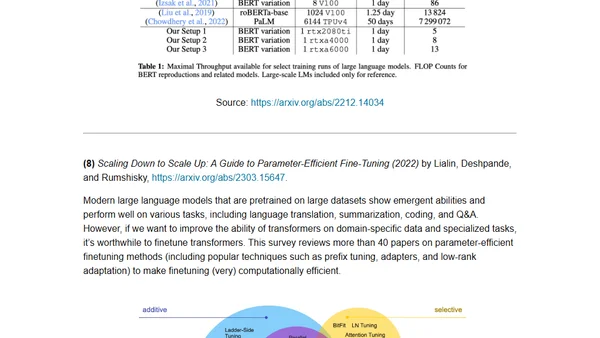

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

A curated reading list of key academic papers for understanding the development and architecture of large language models and transformers.

Analyzes the limitations of AI chatbots like ChatGPT in providing accurate technical answers and discusses the need for curated data and human experts.

Discusses the limitations of AI chatbots like ChatGPT in providing accurate technical answers and proposes curated resources and expert knowledge as future solutions.

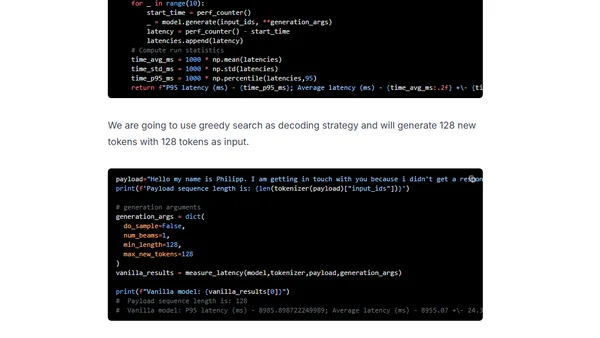

Learn to optimize GPT-J inference using DeepSpeed-Inference and Hugging Face Transformers for faster GPU performance.

An engineer shares insights and tutorials on applying Cohere's large language models for real-world tasks like prompt engineering and semantic search.

Explores how Large Language Models perform implicit Bayesian inference through in-context learning, connecting exchangeable sequence models to prompt-based learning.

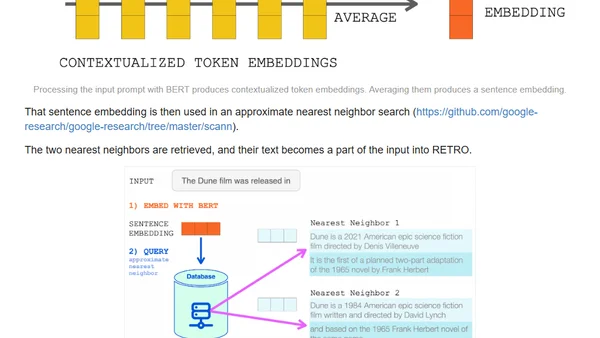

Explains how retrieval-augmented language models like RETRO achieve GPT-3 performance with far fewer parameters by querying external knowledge.

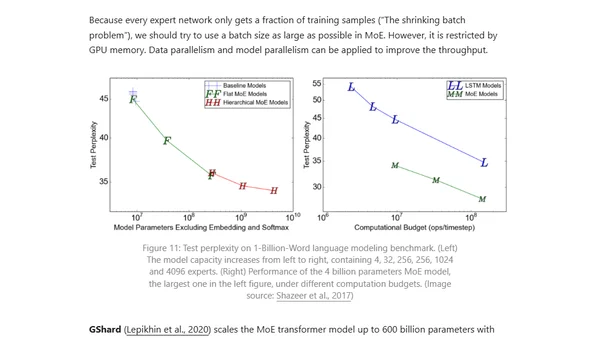

Explores parallelism techniques and memory optimization strategies for training massive neural networks across multiple GPUs.