How to fine-tune open LLMs in 2025 with Hugging Face

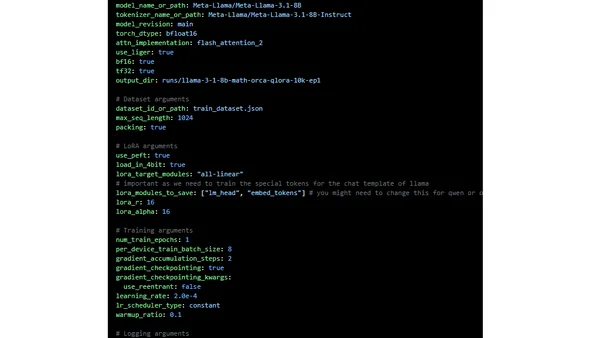

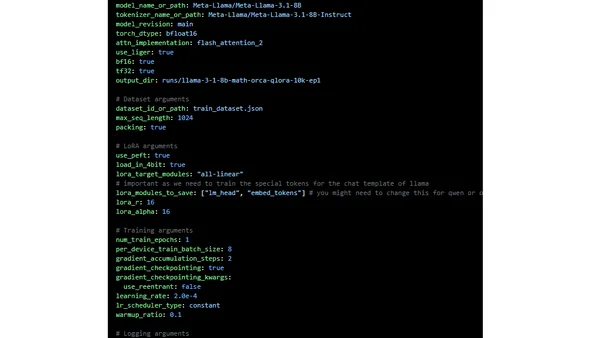

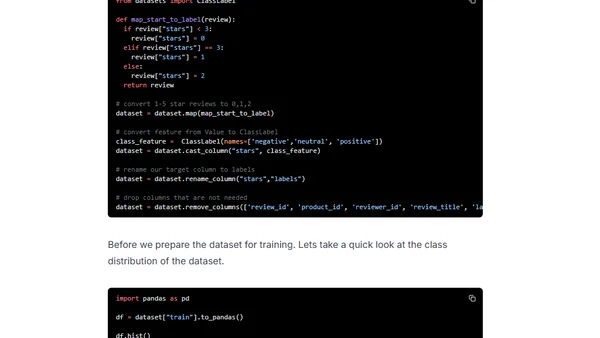

A technical guide on optimizing and scaling the fine-tuning of open-source large language models using Hugging Face tools in 2025.

A technical guide on optimizing and scaling the fine-tuning of open-source large language models using Hugging Face tools in 2025.

A guide to accelerating multilingual BERT fine-tuning using Hugging Face Transformers with distributed training on Amazon SageMaker.

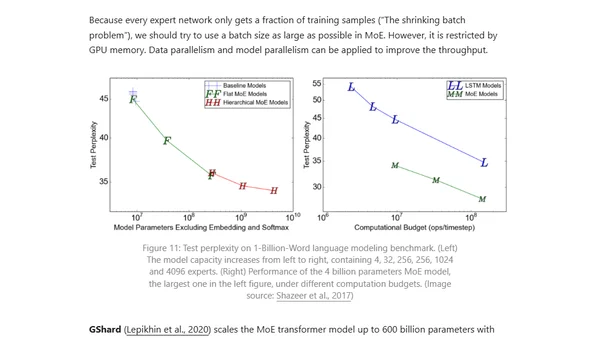

Explores parallelism techniques and memory optimization strategies for training massive neural networks across multiple GPUs.

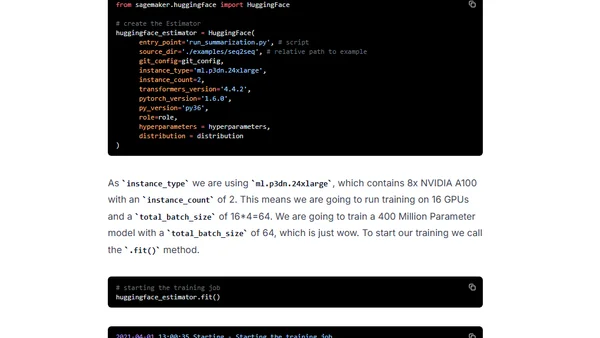

A tutorial on using Hugging Face Transformers and Amazon SageMaker for distributed training of BART/T5 models on a text summarization task.