How to Train Really Large Models on Many GPUs?

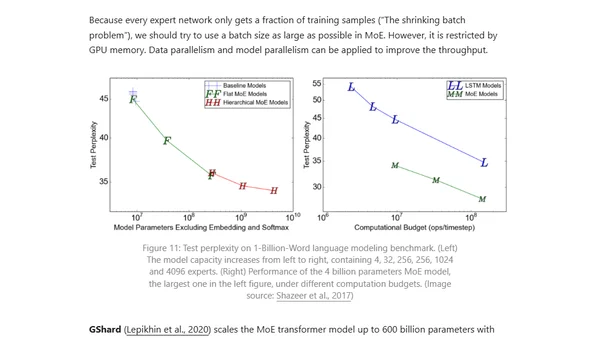

Read OriginalThis technical article details the challenges of training large neural networks that exceed single GPU memory limits. It explains various parallelism paradigms like data and model parallelism, synchronization methods (BSP, ASP), and memory-saving designs to efficiently distribute training across many GPUs.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes