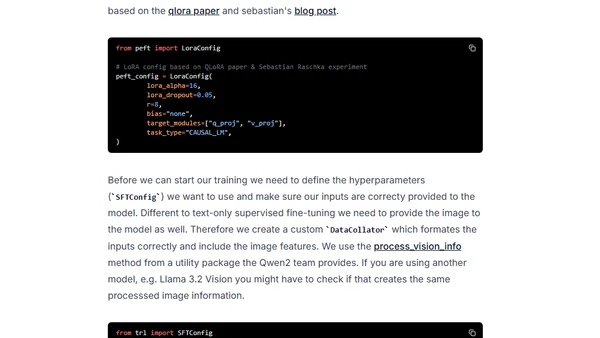

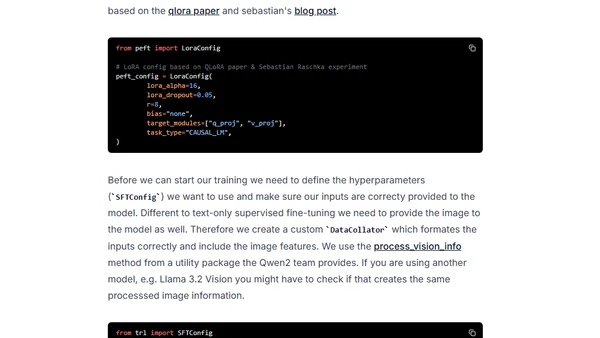

How to Fine-Tune Multimodal Models or VLMs with Hugging Face TRL

A technical guide on fine-tuning Vision-Language Models (VLMs) using Hugging Face's TRL library for custom applications like image-to-text generation.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

A technical guide on fine-tuning Vision-Language Models (VLMs) using Hugging Face's TRL library for custom applications like image-to-text generation.

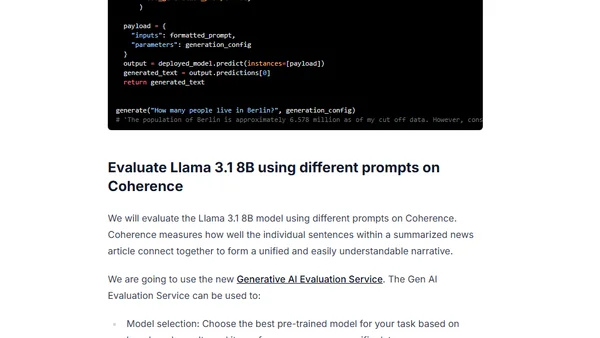

A technical guide on using Google's Vertex AI Gen AI Evaluation Service with Gemini to evaluate open LLM models like Llama 3.1.

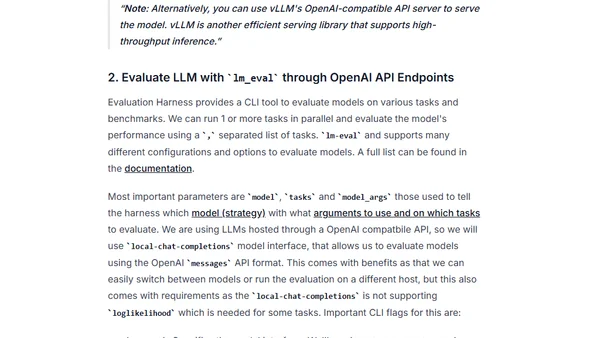

A guide to evaluating Large Language Models (LLMs) using the Evaluation Harness framework and optimized serving tools like Hugging Face TGI and vLLM.

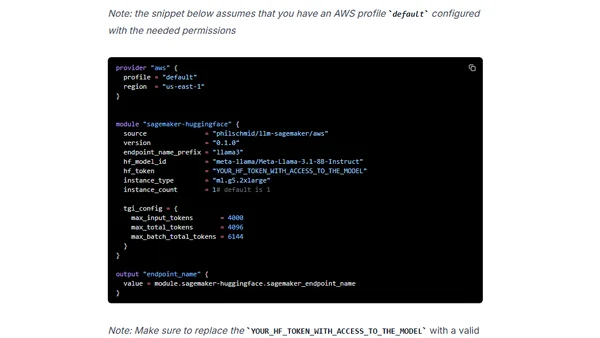

A guide to deploying open-source LLMs like Llama 3 to Amazon SageMaker using Terraform for Infrastructure as Code.

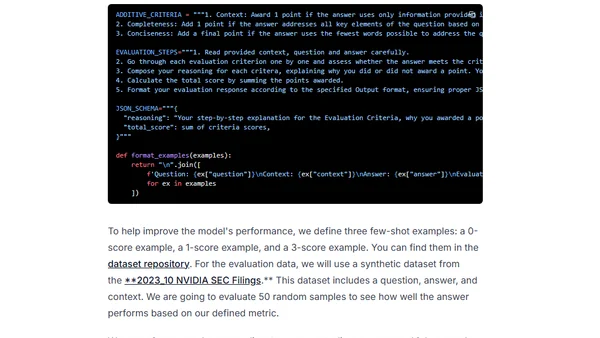

A guide to simplifying LLM evaluation workflows using clear metrics, chain-of-thought, and few-shot prompts, inspired by real-world examples.

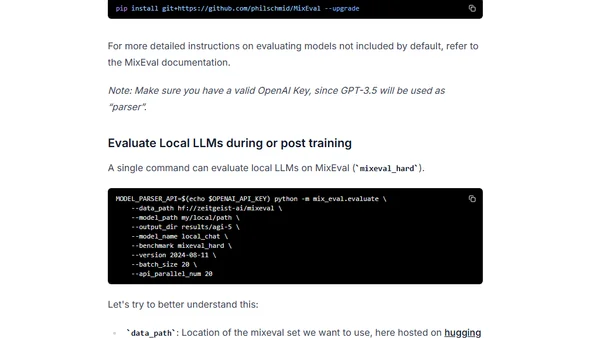

Introduces MixEval, a cost-effective LLM benchmark with high correlation to Chatbot Arena, for evaluating open-source language models.

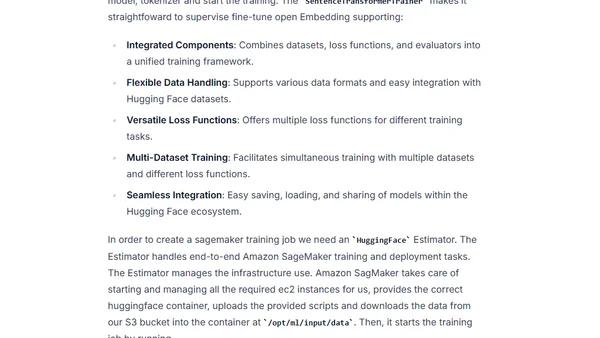

A guide to fine-tuning and deploying custom embedding models for RAG applications on Amazon SageMaker using Sentence Transformers v3.

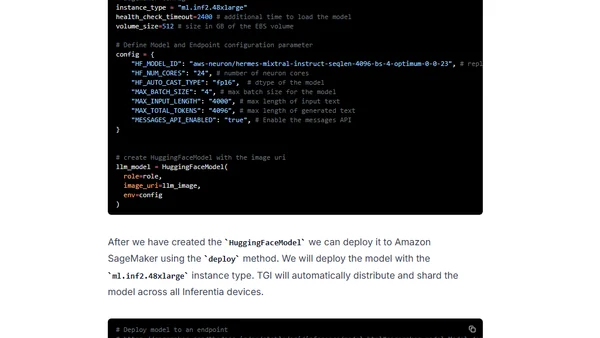

A technical guide on deploying the Mixtral 8x7B LLM on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

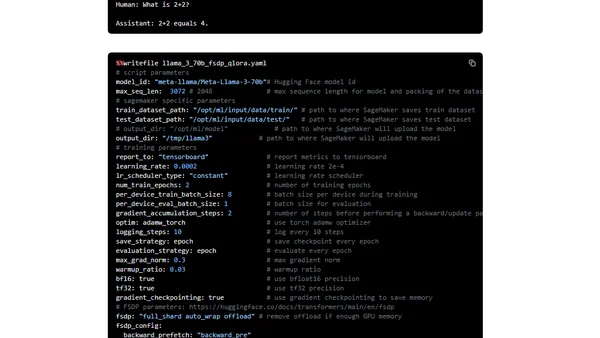

A technical guide on fine-tuning the Llama 3 LLM using PyTorch FSDP and Q-Lora on Amazon SageMaker for efficient training.

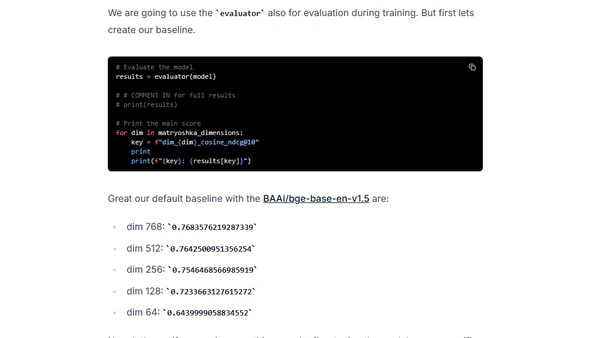

A guide to fine-tuning embedding models for RAG applications using Sentence Transformers 3, featuring Matryoshka Representation Learning for efficiency.

Analyzes the complex total cost of ownership for deploying generative AI models in production, beyond just raw compute expenses.

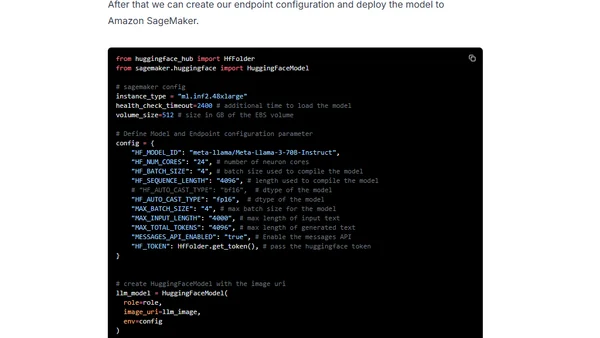

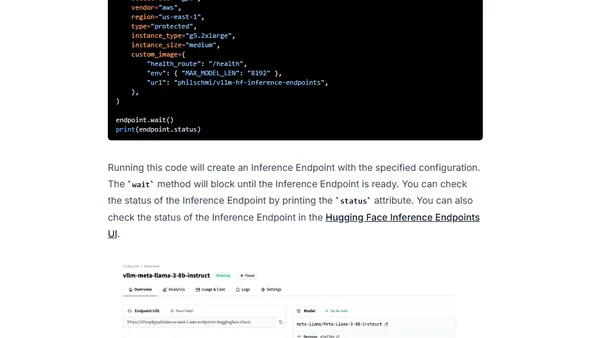

A technical guide on deploying Meta's Llama 3 70B Instruct model on AWS Inferentia2 using Hugging Face Optimum and Amazon SageMaker.

A tutorial on deploying open-source large language models (LLMs) like Llama 3 using the vLLM framework on Hugging Face Inference Endpoints.

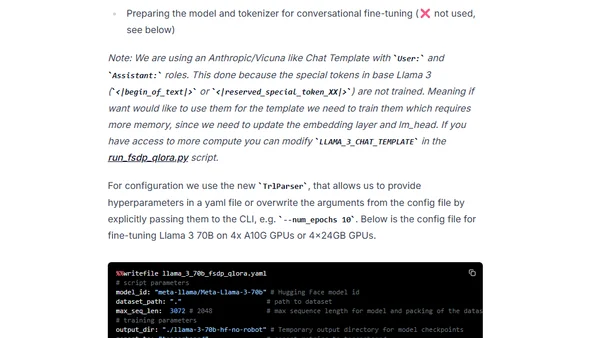

A technical guide on fine-tuning the Llama 3 70B model using PyTorch FSDP and Q-Lora for efficient training on limited GPU hardware.

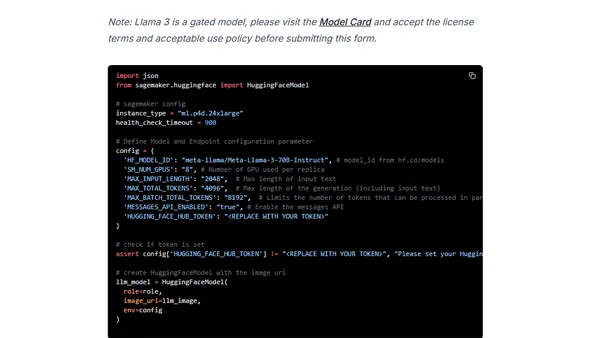

A technical guide on deploying Meta's Llama 3 70B model on Amazon SageMaker using the Hugging Face LLM DLC and Text Generation Inference.

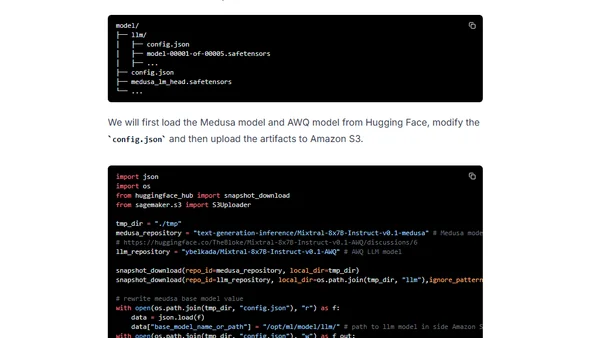

A technical guide on accelerating the Mixtral 8x7B LLM using speculative decoding (Medusa) and quantization (AWQ) for deployment on Amazon SageMaker.

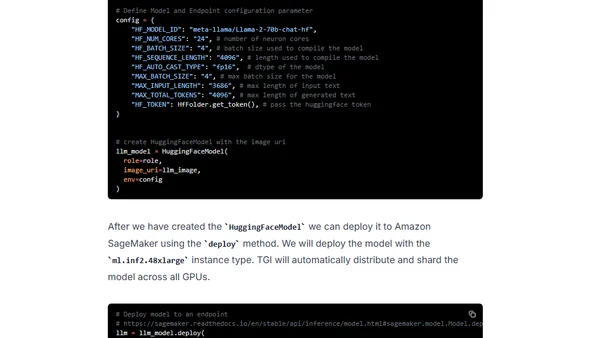

A technical guide on deploying Meta's Llama 2 70B large language model on AWS Inferentia2 hardware using Hugging Face Optimum and SageMaker.

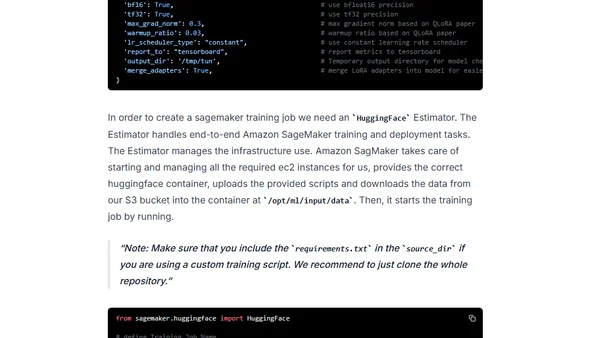

A technical guide on fine-tuning and evaluating open-source Large Language Models (LLMs) using Amazon SageMaker and Hugging Face libraries.

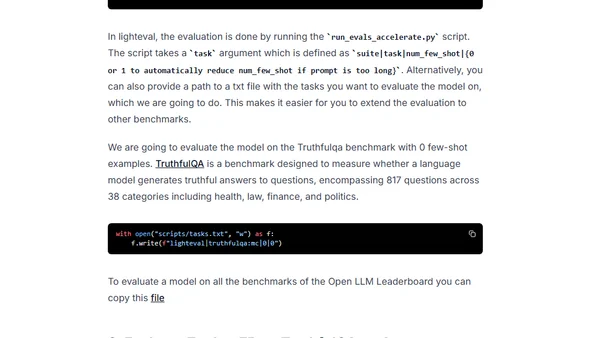

A tutorial on evaluating Large Language Models using Hugging Face's Lighteval library on Amazon SageMaker, focusing on benchmarks like TruthfulQA.

A technical guide on fine-tuning Google's Gemma open LLMs using the ChatML format and Hugging Face's TRL library for efficient training on consumer GPUs.