Efficiently fine-tune Llama 3 with PyTorch FSDP and Q-Lora

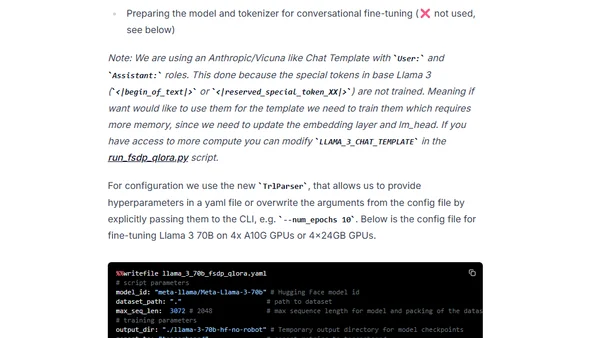

Read OriginalThis article provides a step-by-step tutorial for efficiently fine-tuning large language models like Meta's Llama 3 70B. It explains how to use PyTorch FSDP (Fully Sharded Data Parallel) and Q-Lora, combined with Hugging Face's TRL and PEFT libraries, to reduce memory requirements and enable training on consumer-grade GPUs. The guide covers environment setup, dataset preparation, and the fine-tuning process.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes