Quoting Nicholas Carlini

Explores how LLMs could enable malware to find personal secrets for blackmail, moving beyond simple ransomware attacks.

Explores how LLMs could enable malware to find personal secrets for blackmail, moving beyond simple ransomware attacks.

Martin Fowler's blog fragments on LLM browser security, AI-assisted coding debates, and the literary significance of the Doonesbury comic strip.

Explores the unique security risks of Agentic AI systems, focusing on the 'Lethal Trifecta' of vulnerabilities and proposed mitigation strategies.

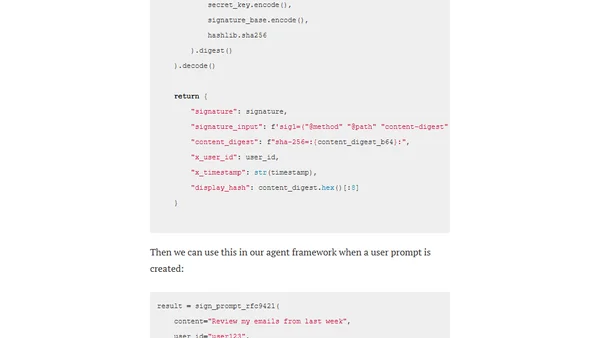

Explores the A2AS framework and Agentgateway as a security approach to mitigate prompt injection attacks in AI/LLM systems by embedding behavioral contracts and cryptographic verification.

Explores strategies and Azure OpenAI features to mitigate inappropriate use and enhance safety in AI chatbot implementations.