Llama 2 on Amazon SageMaker a Benchmark

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

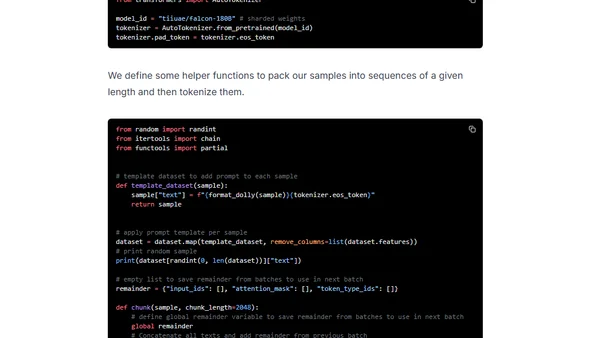

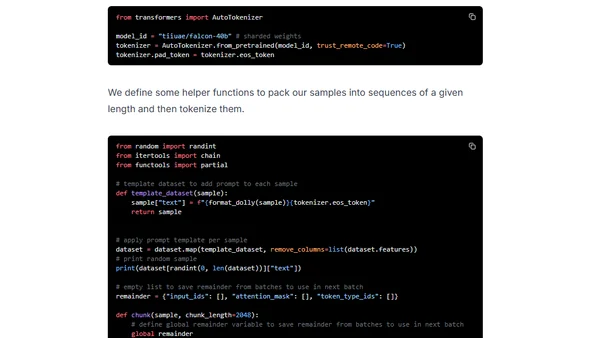

A technical guide on fine-tuning the massive Falcon 180B language model using QLoRA and Flash Attention on Amazon SageMaker.

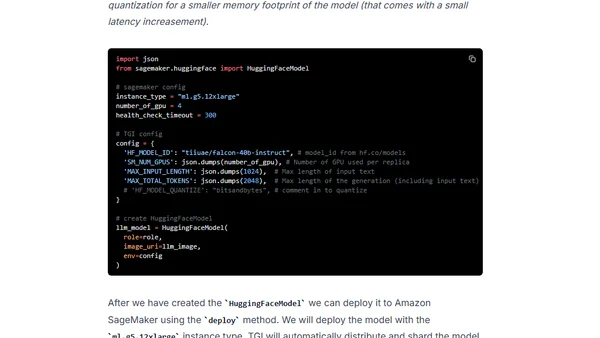

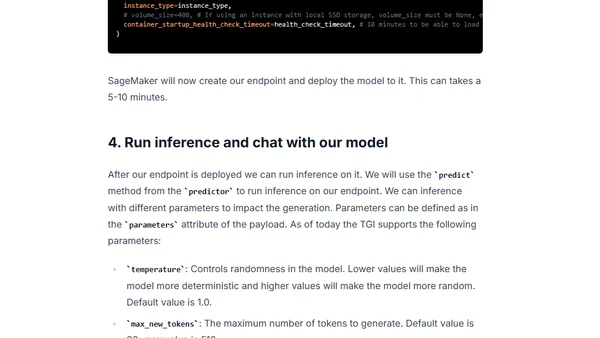

A technical guide on deploying the Falcon 180B open-source large language model to Amazon SageMaker using the Hugging Face LLM DLC.

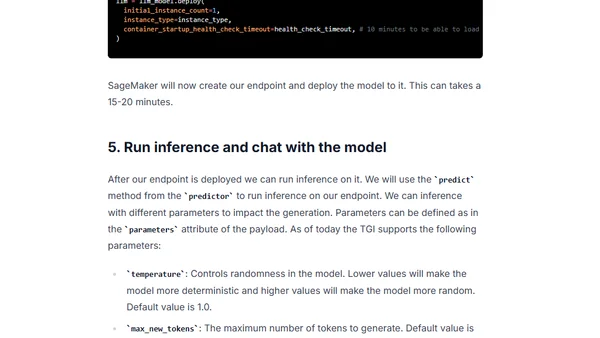

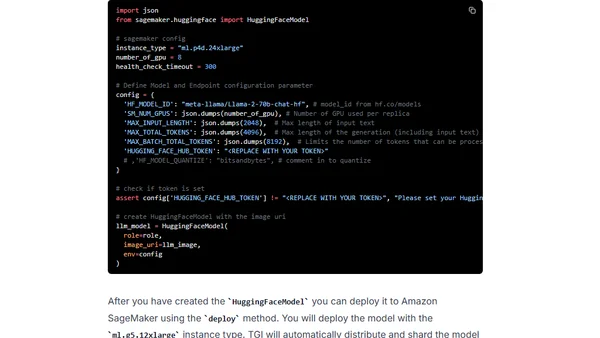

A technical guide on deploying Meta's Llama 2 large language models (7B, 13B, 70B) on Amazon SageMaker using the Hugging Face LLM DLC.

A technical guide on fine-tuning LLaMA 2 models (7B to 70B) using QLoRA and PEFT on Amazon SageMaker for efficient large language model adaptation.

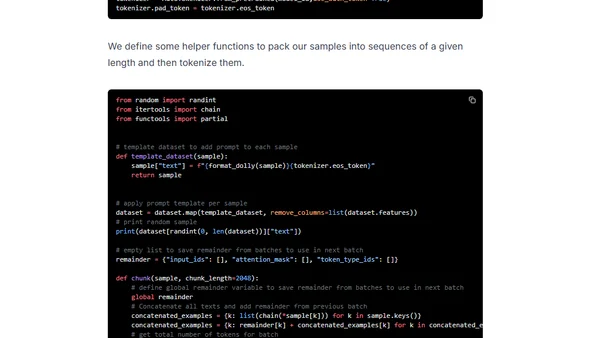

A technical guide on using QLoRA to efficiently fine-tune the Falcon 40B large language model on Amazon SageMaker.

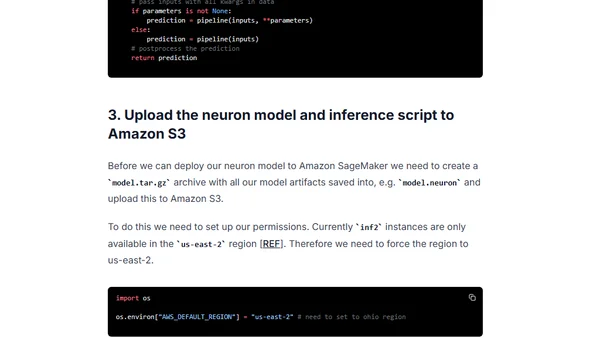

A tutorial on optimizing and deploying a BERT model for low-latency inference using AWS Inferentia2 accelerators and Amazon SageMaker.

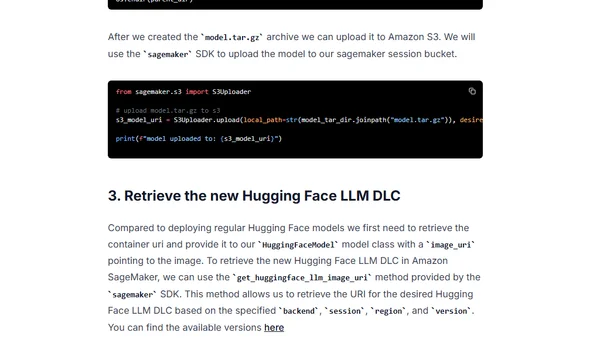

A technical guide on deploying open-source Large Language Models (LLMs) from Amazon S3 to Amazon SageMaker using Hugging Face's LLM Inference Container within a VPC.

A technical guide on deploying the open-source Falcon 7B and 40B large language models to Amazon SageMaker using the Hugging Face LLM Inference Container.

Guide to deploying open-source LLMs like BLOOM and Open Assistant to Amazon SageMaker using Hugging Face's new LLM Inference Container.

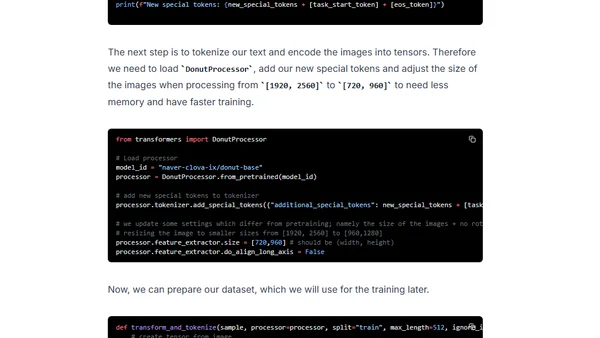

Tutorial on fine-tuning and deploying the Donut model for OCR-free document understanding using Hugging Face and Amazon SageMaker.

A technical tutorial on fine-tuning a 20B+ parameter LLM using PyTorch FSDP and Hugging Face on Amazon SageMaker's multi-GPU infrastructure.

A technical guide on fine-tuning the BLOOMZ language model using PEFT and LoRA techniques, then deploying it on Amazon SageMaker.

A technical guide on deploying Google's FLAN-UL2 20B large language model for real-time inference using Amazon SageMaker and Hugging Face.

Guide to fine-tuning the large FLAN-T5 XXL model using Amazon SageMaker managed training and DeepSpeed for optimization.

A technical guide on deploying the FLAN-T5-XXL large language model for real-time inference using Amazon SageMaker and Hugging Face.

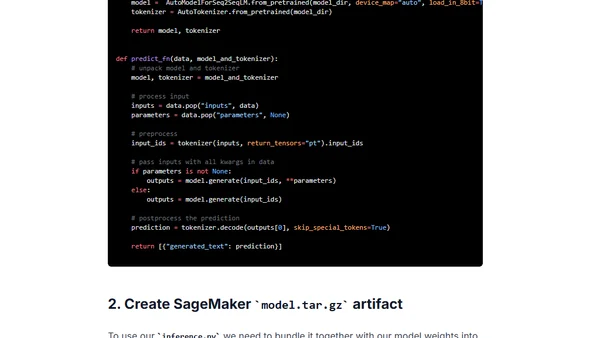

A technical guide on deploying the Stable Diffusion text-to-image model to Amazon SageMaker for real-time inference using the Hugging Face Diffusers library.

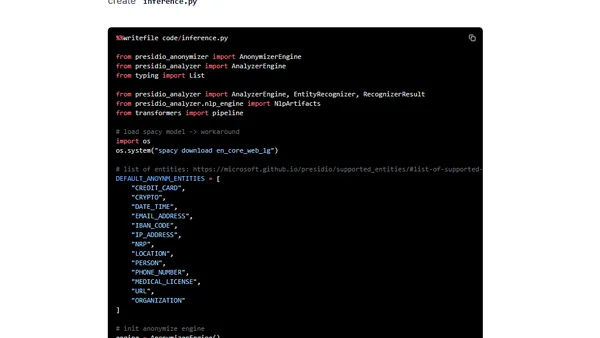

A technical guide on using Hugging Face Transformers and Amazon SageMaker to detect and anonymize Personally Identifiable Information (PII) in text.

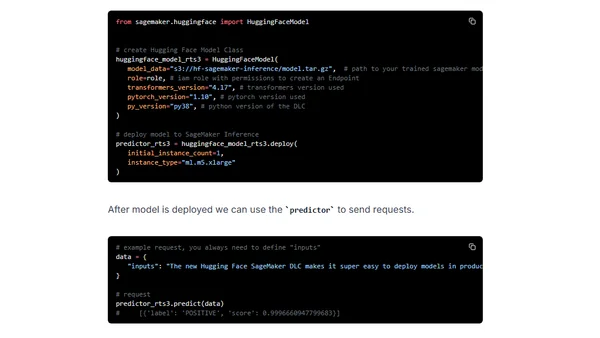

Compares Amazon SageMaker's four inference options for deploying Hugging Face Transformers models, covering latency, use cases, and pricing.

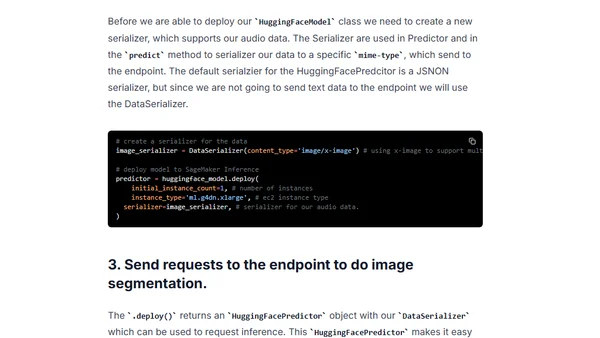

A technical guide on using Hugging Face's SegFormer model with Amazon SageMaker for semantic image segmentation tasks.