Introducing EasyLLM - streamline open LLMs

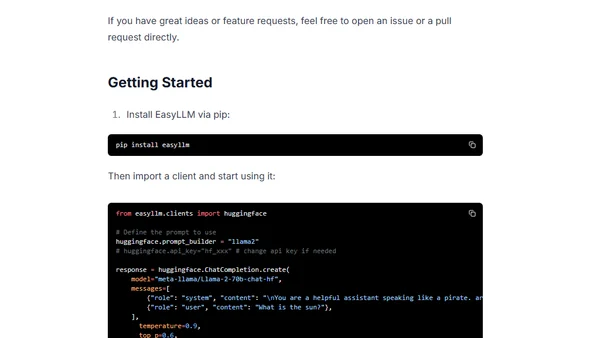

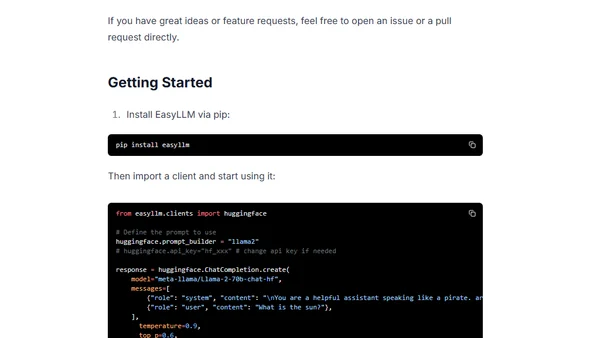

Introduces EasyLLM, an open-source Python package for streamlining work with open large language models via OpenAI-compatible clients.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

Introduces EasyLLM, an open-source Python package for streamlining work with open large language models via OpenAI-compatible clients.

A technical guide on instruction-tuning Meta's Llama 2 model to generate instructions from inputs, enabling personalized LLM applications.

A comprehensive guide to Meta's LLaMA 2 open-source language model, covering resources, playgrounds, benchmarks, and technical details.

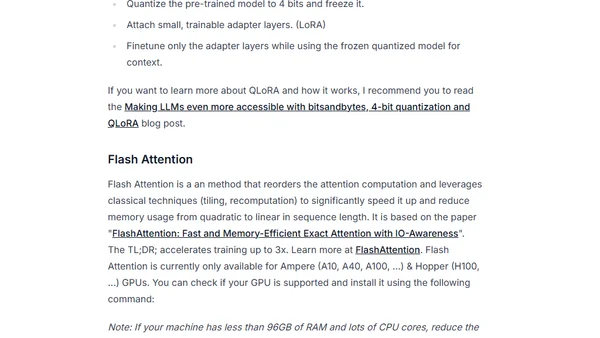

A technical guide on fine-tuning LLaMA 2 models (7B to 70B) using QLoRA and PEFT on Amazon SageMaker for efficient large language model adaptation.

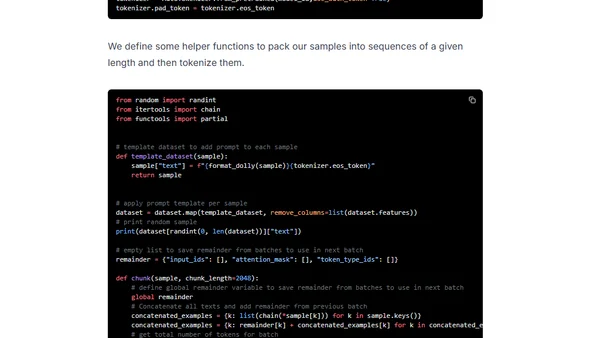

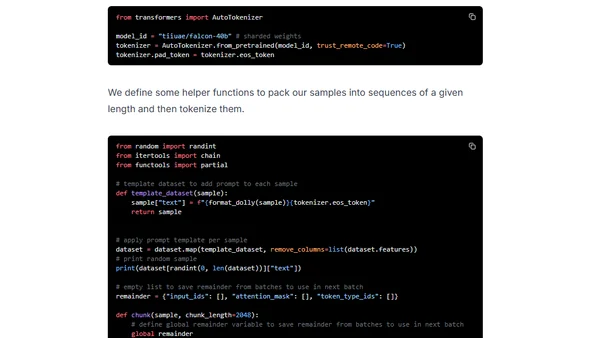

A technical guide on using QLoRA to efficiently fine-tune the Falcon 40B large language model on Amazon SageMaker.

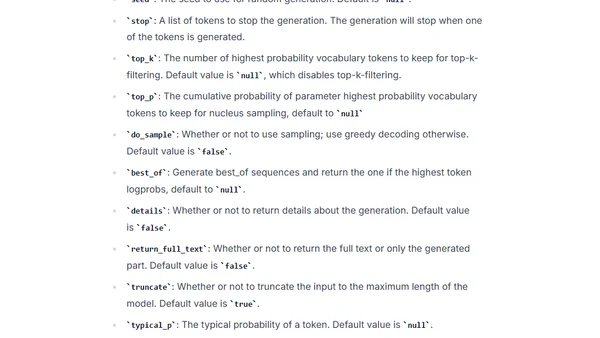

A guide to deploying open-source Large Language Models (LLMs) like Falcon using Hugging Face's managed Inference Endpoints service.

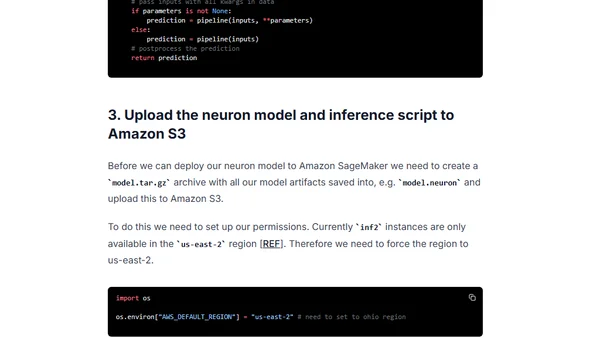

A tutorial on optimizing and deploying a BERT model for low-latency inference using AWS Inferentia2 accelerators and Amazon SageMaker.

A technical guide on deploying open-source Large Language Models (LLMs) from Amazon S3 to Amazon SageMaker using Hugging Face's LLM Inference Container within a VPC.

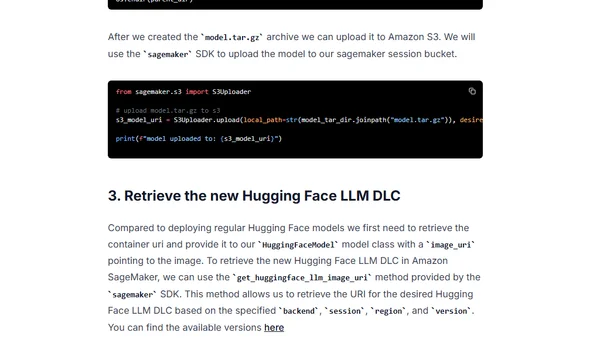

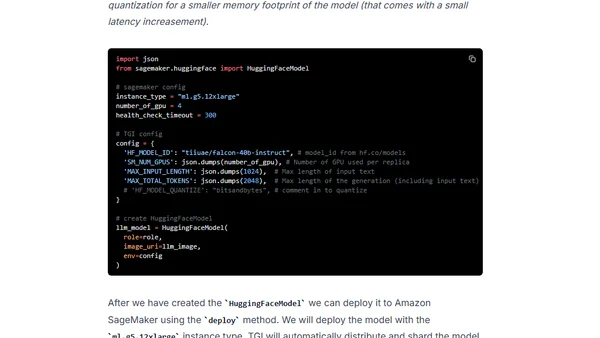

A technical guide on deploying the open-source Falcon 7B and 40B large language models to Amazon SageMaker using the Hugging Face LLM Inference Container.

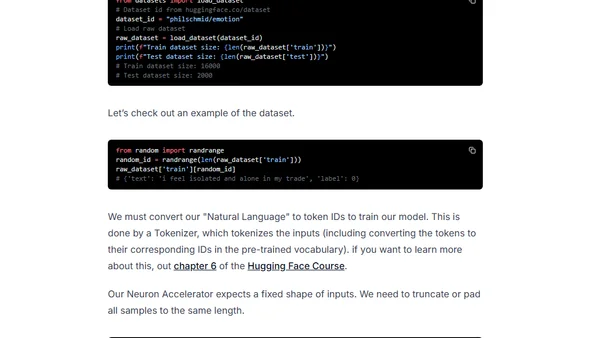

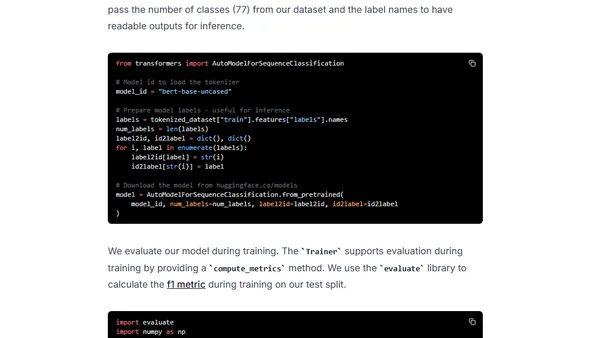

A tutorial on fine-tuning a BERT model for text classification using AWS Trainium instances and Hugging Face Transformers.

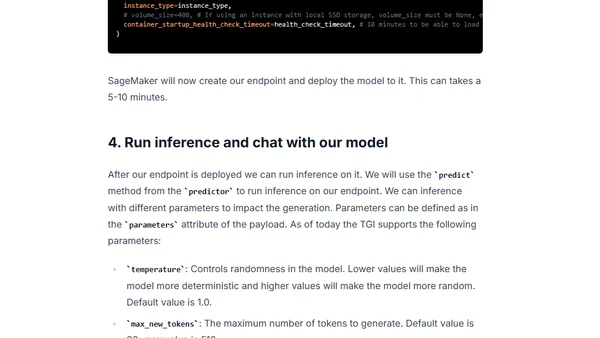

Guide to deploying open-source LLMs like BLOOM and Open Assistant to Amazon SageMaker using Hugging Face's new LLM Inference Container.

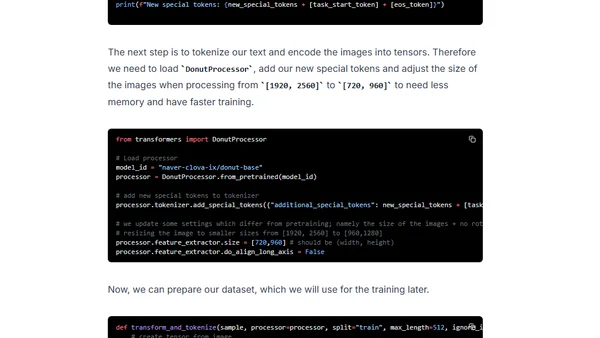

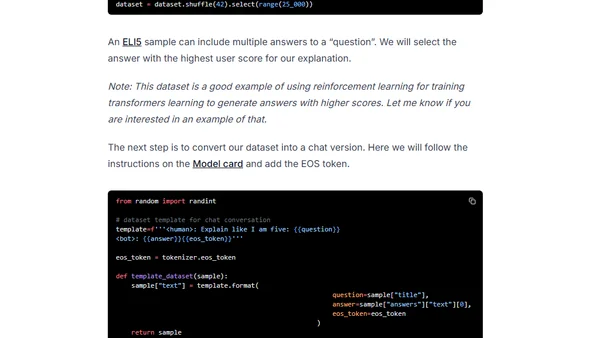

Tutorial on fine-tuning and deploying the Donut model for OCR-free document understanding using Hugging Face and Amazon SageMaker.

A technical tutorial on fine-tuning a 20B+ parameter LLM using PyTorch FSDP and Hugging Face on Amazon SageMaker's multi-GPU infrastructure.

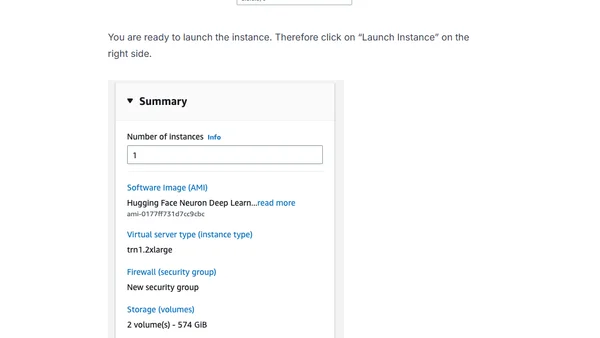

A guide to setting up an AWS Trainium instance using the Hugging Face Neuron Deep Learning AMI to fine-tune a BERT model for text classification.

A technical guide on fine-tuning the BLOOMZ language model using PEFT and LoRA techniques, then deploying it on Amazon SageMaker.

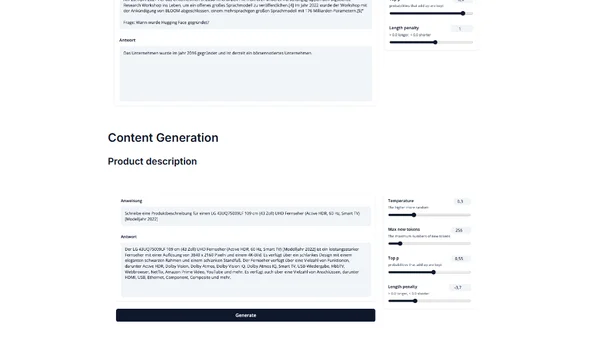

Introduces IGEL, an instruction-tuned German large language model based on BLOOM, for NLP tasks like translation and QA.

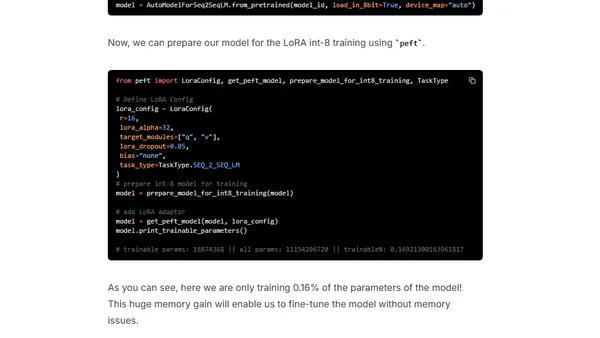

A technical guide on fine-tuning the large FLAN-T5 XXL model efficiently using LoRA and Hugging Face libraries on a single GPU.

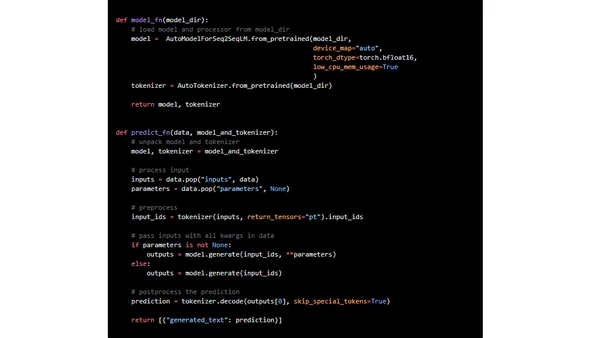

A technical guide on deploying Google's FLAN-UL2 20B large language model for real-time inference using Amazon SageMaker and Hugging Face.

A tutorial on fine-tuning a BERT model for text classification using the new PyTorch 2.0 framework and the Hugging Face Transformers library.

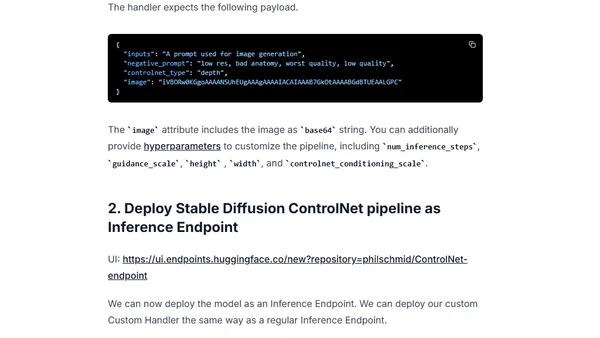

Learn how to deploy and use ControlNet for controlled text-to-image generation via Hugging Face Inference Endpoints as a scalable API.