How to Fine-Tune LLMs in 2024 with Hugging Face

A practical guide to fine-tuning open-source large language models (LLMs) using Hugging Face's TRL and Transformers libraries in 2024.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

A practical guide to fine-tuning open-source large language models (LLMs) using Hugging Face's TRL and Transformers libraries in 2024.

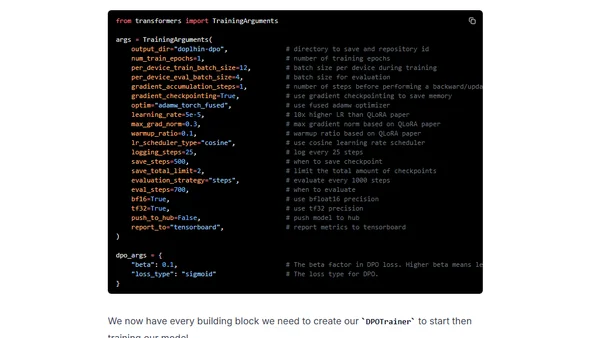

A technical guide on using Direct Preference Optimization (DPO) with Hugging Face's TRL library to align and improve open-source large language models in 2024.

Guide to scaling LLM inference on Amazon SageMaker using new multi-replica endpoints for improved throughput and cost efficiency.

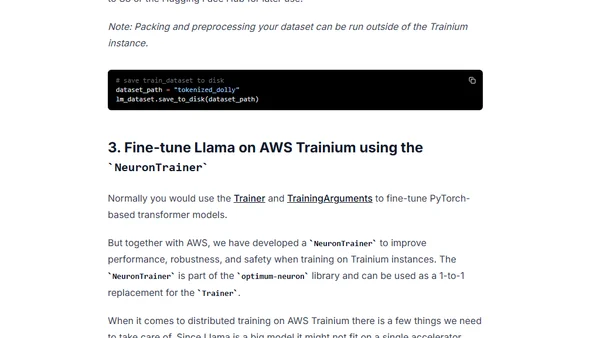

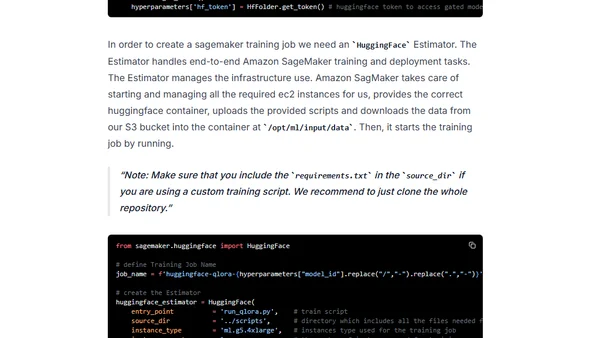

A technical tutorial on fine-tuning the Llama 2 7B large language model using AWS Trainium instances and Hugging Face libraries.

Learn to programmatically manage Hugging Face Inference Endpoints using the huggingface_hub Python library for automated model deployment.

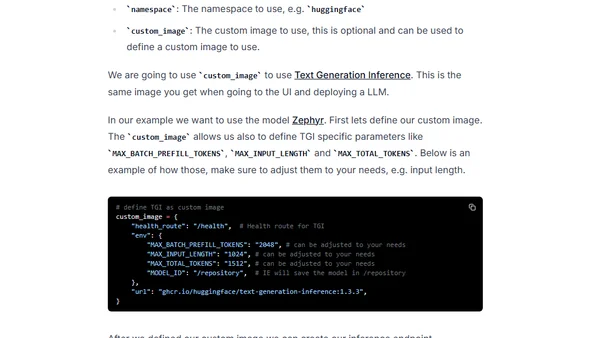

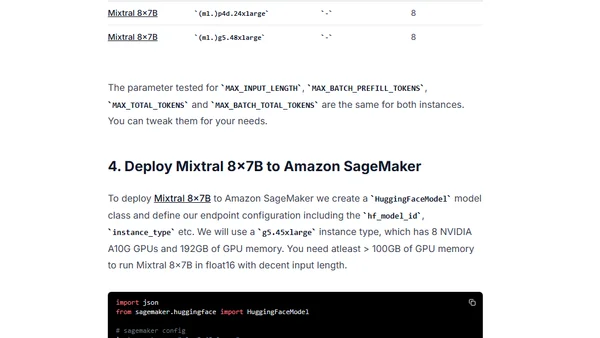

A technical guide on deploying the Mixtral 8x7B open-source LLM from Mistral AI to Amazon SageMaker using the Hugging Face LLM DLC.

Tutorial on deploying embedding models using AWS Inferentia2 and Amazon SageMaker for accelerated inference performance.

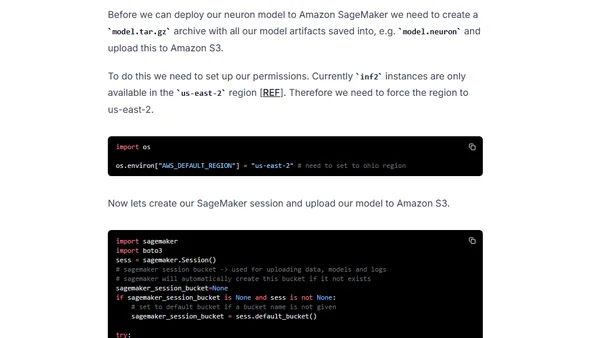

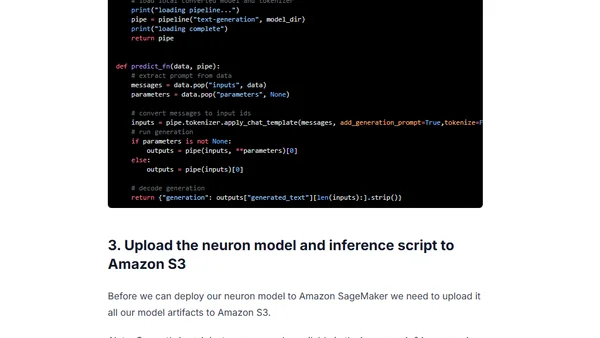

A tutorial on deploying Meta's Llama 2 7B model on AWS Inferentia2 using Amazon SageMaker and the optimum-neuron library.

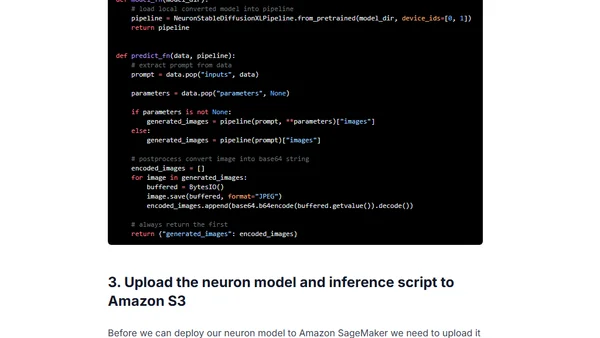

A tutorial on deploying Stable Diffusion XL for accelerated inference using AWS Inferentia2 and Amazon SageMaker.

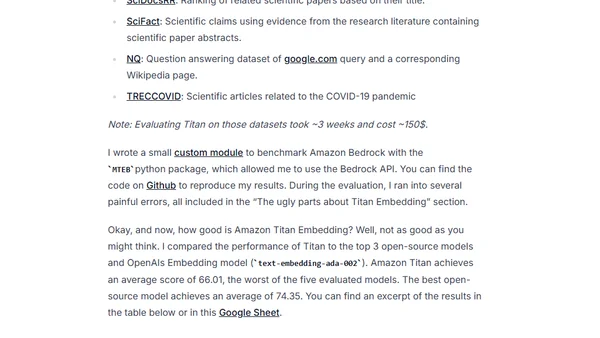

An evaluation of Amazon Titan Embeddings on the MTEB benchmark, analyzing its performance, use cases, and lack of transparency.

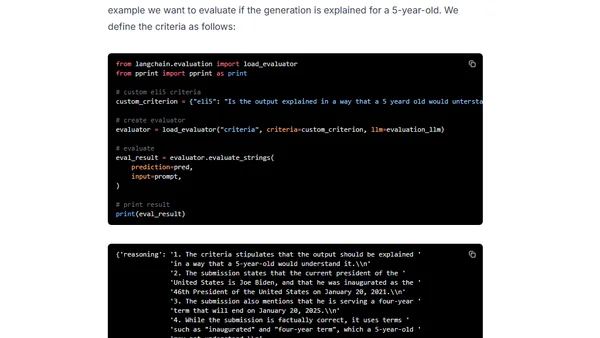

A hands-on guide to evaluating LLMs and RAG systems using Langchain and Hugging Face, covering criteria-based and pairwise evaluation methods.

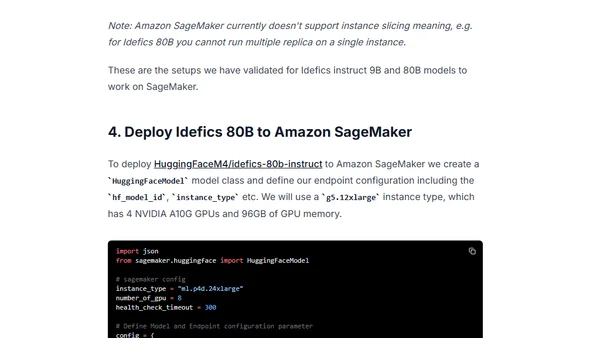

A technical guide on deploying Hugging Face's IDEFICS visual language models (9B & 80B parameters) to Amazon SageMaker using the LLM DLC.

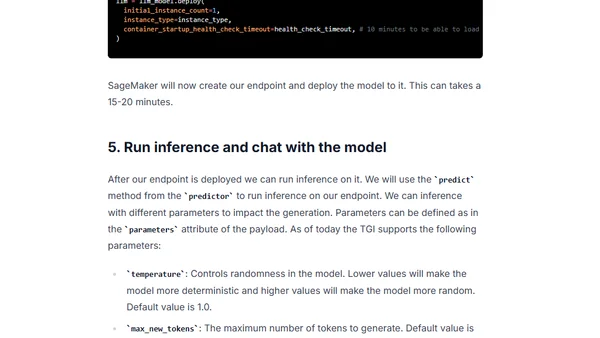

A technical guide on fine-tuning the Mistral 7B large language model using QLoRA and deploying it on Amazon SageMaker with Hugging Face tools.

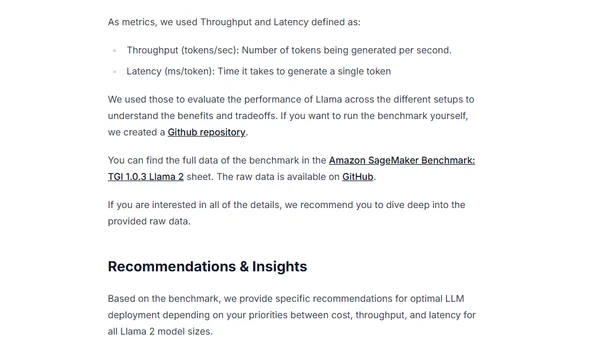

A benchmark analysis of deploying Meta's Llama 2 models on Amazon SageMaker using Hugging Face's LLM Inference Container, evaluating cost, latency, and throughput.

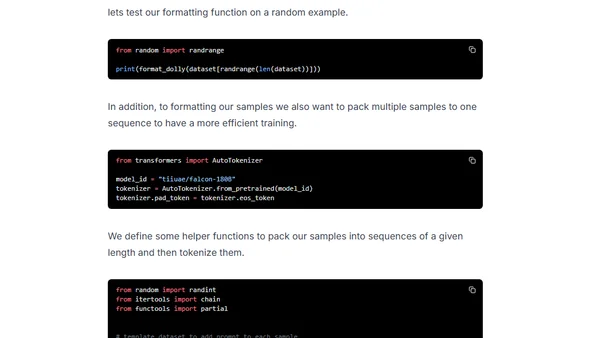

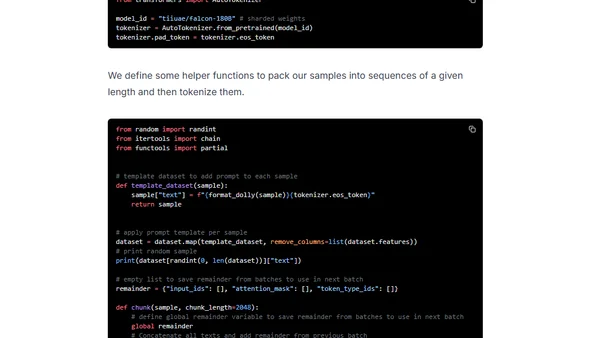

A technical guide on fine-tuning the massive Falcon 180B language model using DeepSpeed ZeRO, LoRA, and Flash Attention for efficient training.

A technical guide on fine-tuning the massive Falcon 180B language model using QLoRA and Flash Attention on Amazon SageMaker.

A technical guide on deploying the Falcon 180B open-source large language model to Amazon SageMaker using the Hugging Face LLM DLC.

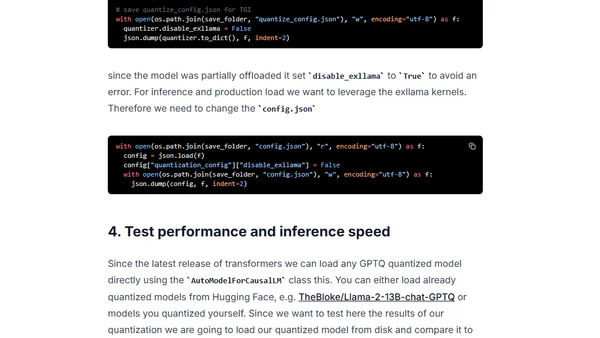

A guide to using GPTQ quantization with Hugging Face Optimum to compress open-source LLMs for efficient deployment on smaller hardware.

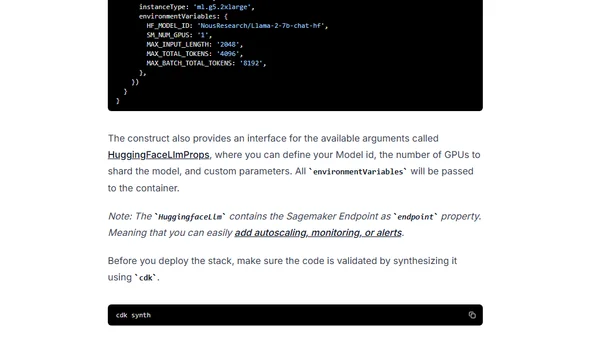

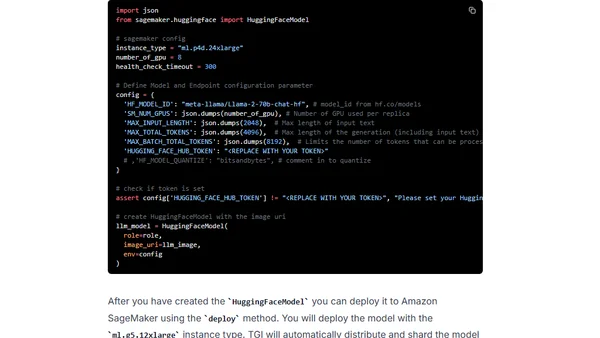

A technical guide on deploying open-source LLMs like Llama 2 using Infrastructure as Code with AWS CDK and the Hugging Face LLM construct.

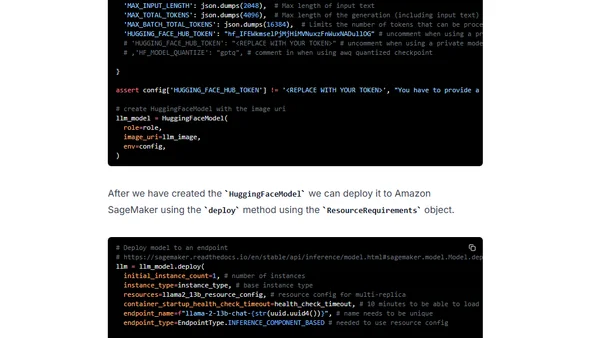

A technical guide on deploying Meta's Llama 2 large language models (7B, 13B, 70B) on Amazon SageMaker using the Hugging Face LLM DLC.