RLHF in 2024 with DPO and Hugging Face

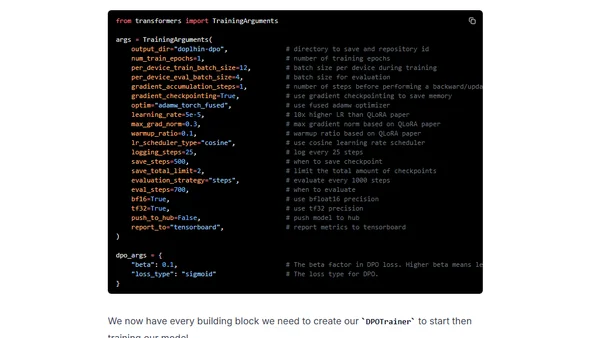

Read OriginalThis article provides a step-by-step tutorial on implementing Reinforcement Learning from Human Feedback (RLHF) using the Direct Preference Optimization (DPO) method. It covers setting up the development environment with PyTorch and Hugging Face libraries, preparing a preference dataset, aligning a fine-tuned Mistral 7B model with the DPOTrainer from TRL, and includes considerations for single-GPU setups and evaluation.

0 comments

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes