Integrating Long-Term Memory with Gemini 2.5

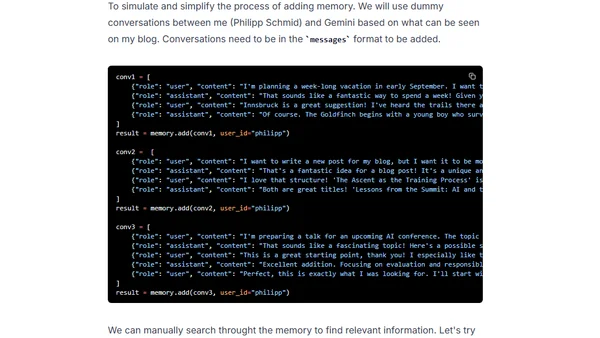

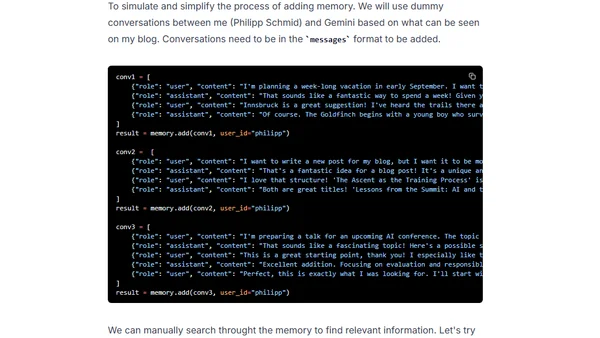

A guide to adding long-term memory to a Gemini 2.5 chatbot using the Mem0 library and vector databases for personalized AI interactions.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

A guide to adding long-term memory to a Gemini 2.5 chatbot using the Mem0 library and vector databases for personalized AI interactions.

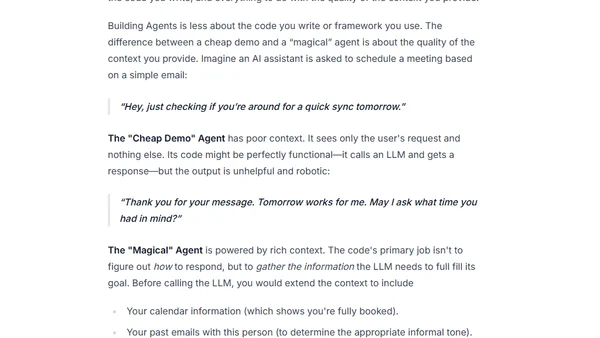

Explains why Context Engineering, not just prompt crafting, is the key skill for building effective AI agents and systems.

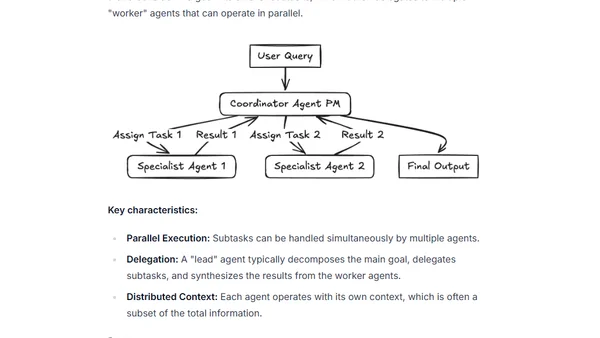

Explores the trade-offs between single-agent and multi-agent AI systems, discussing their characteristics, pros, and cons for different tasks.

Explores common design patterns for building AI agents and workflows, discussing when to use them and how to implement core concepts.

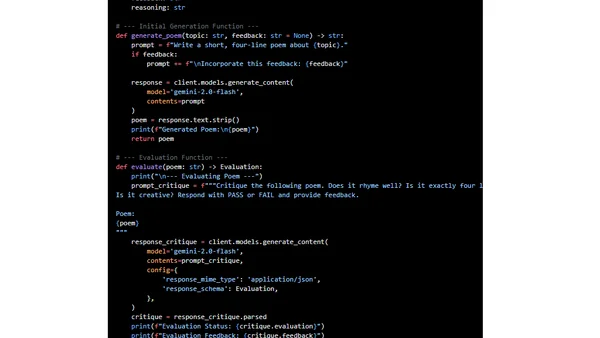

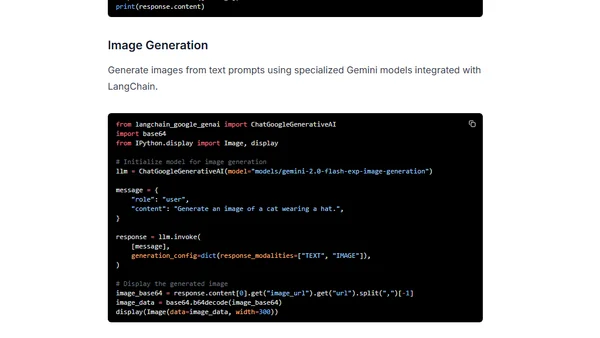

A technical cheatsheet for using Google's Gemini AI models with the LangChain framework, covering setup, chat models, prompt templates, and image inputs.

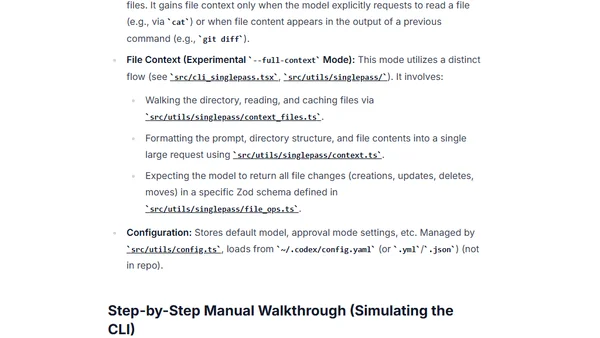

Explains the architecture and workflow of OpenAI's Codex CLI, a terminal-based AI tool for chat-driven software development.

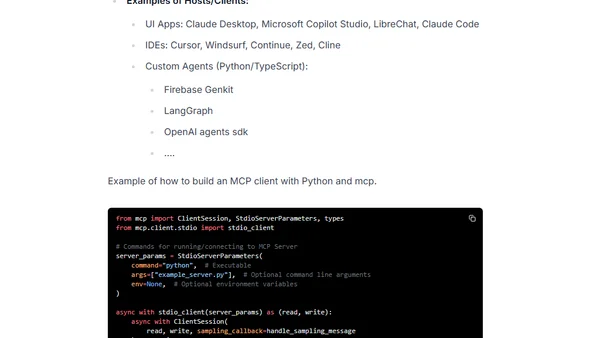

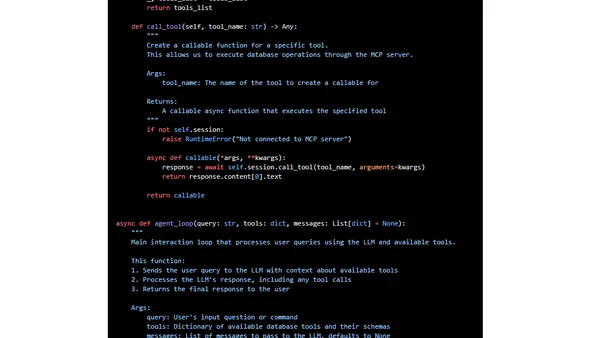

An overview of the Model Context Protocol (MCP), an open standard for connecting AI applications to external tools and data sources.

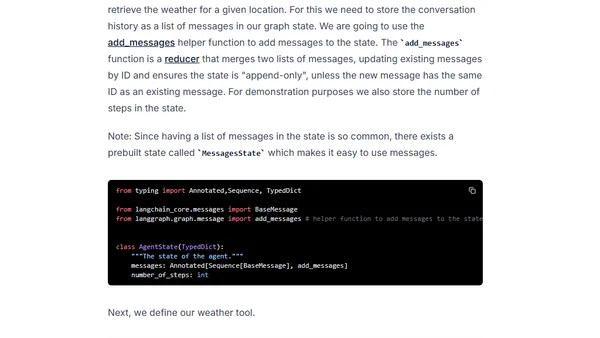

A tutorial on building a ReAct AI agent from scratch using Google's Gemini 2.5 Pro/Flash and the LangGraph framework for complex reasoning and tool use.

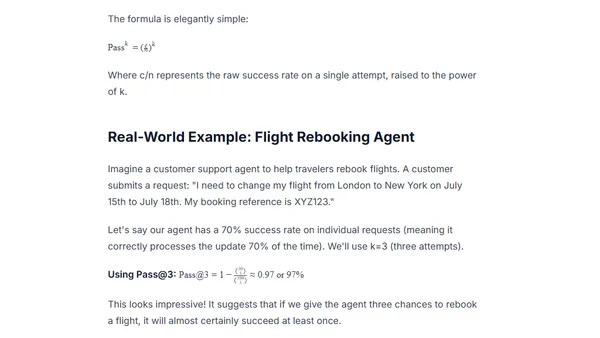

Explains the difference between Pass@k and Pass^k metrics for evaluating AI agent reliability, highlighting why consistency matters in production.

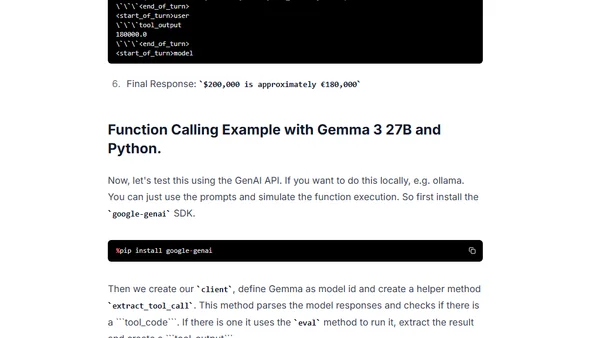

A tutorial on implementing function calling with Google's Gemma 3 27B LLM, showing how to connect it to external tools and APIs.

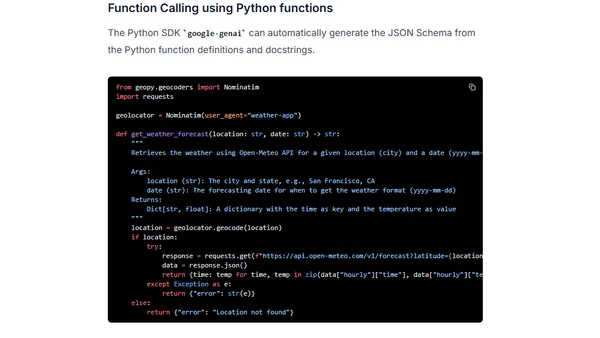

A practical guide to implementing function calling with Google's Gemini 2.0 Flash model, enabling LLMs to interact with external tools and APIs.

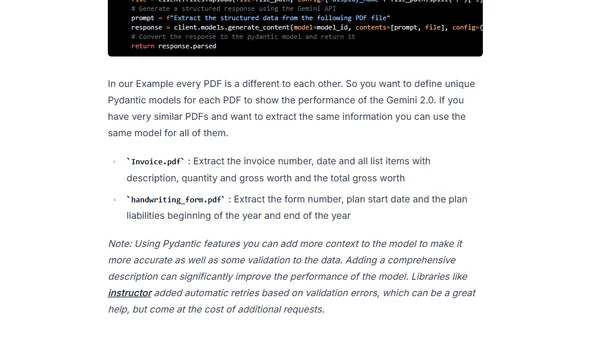

A tutorial on using Google's Gemini 2.0 AI models to extract structured data like invoice numbers and dates from PDF documents.

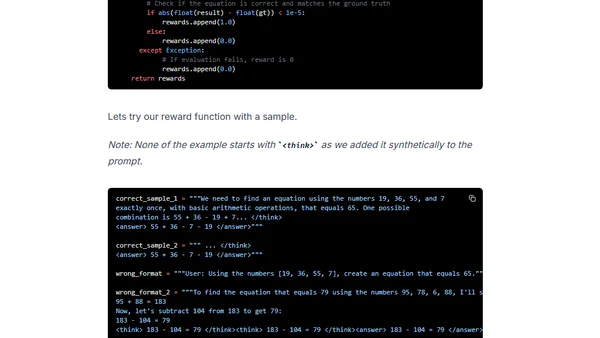

A tutorial on reproducing DeepSeek R1's RL 'aha moment' using Group Relative Policy Optimization (GRPO) to train a model on the Countdown numbers game.

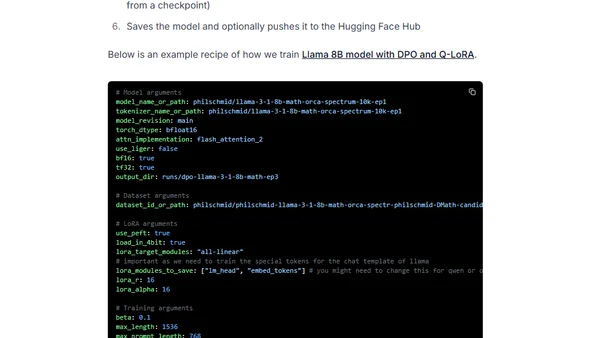

A technical guide on aligning open-source large language models (LLMs) in 2025 using Direct Preference Optimization (DPO) and synthetic data.

A guide on using Anthropic's Model Context Protocol (MCP) to connect AI agents with tools and data sources using various LLMs like OpenAI or Gemini.

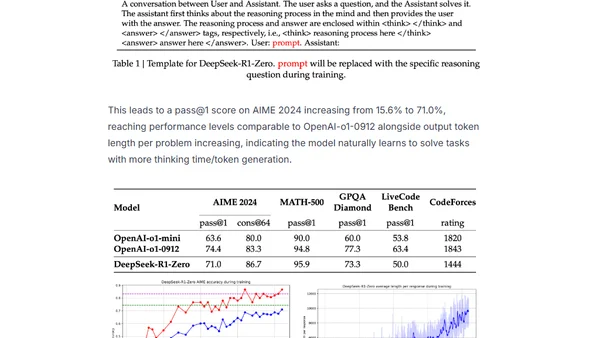

Explains the training of DeepSeek-R1, focusing on the Group Relative Policy Optimization (GRPO) reinforcement learning method.

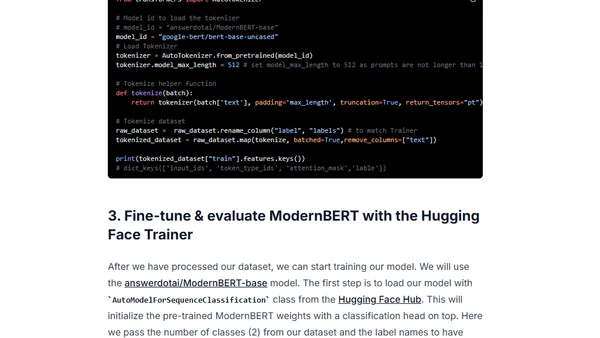

A tutorial on fine-tuning the ModernBERT model for classification tasks to build an efficient LLM router, covering setup, training, and evaluation.

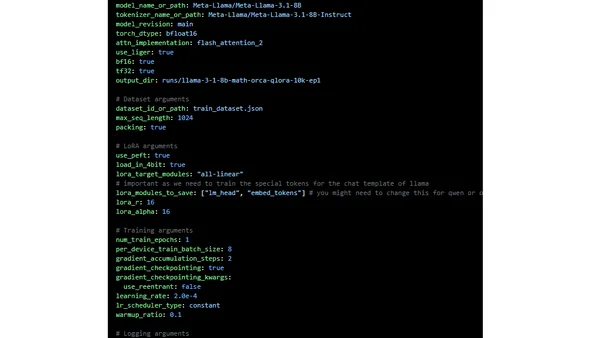

A technical guide on optimizing and scaling the fine-tuning of open-source large language models using Hugging Face tools in 2025.

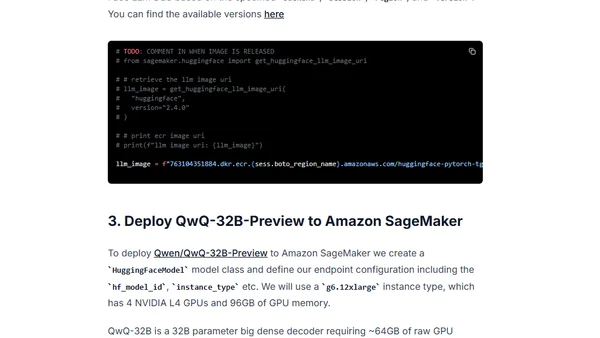

A technical guide on deploying the QwQ-32B-Preview open-source reasoning model on AWS SageMaker using Hugging Face's tools.

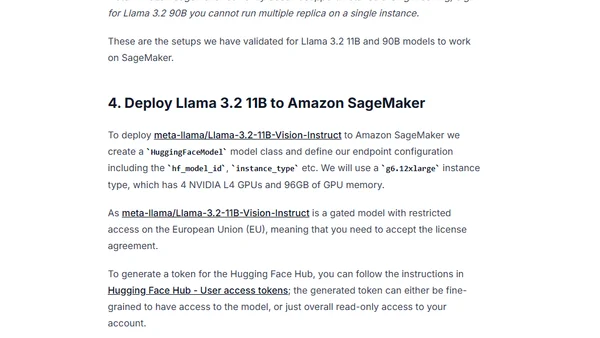

A technical guide on deploying Meta's Llama 3.2 Vision model on Amazon SageMaker using the Hugging Face LLM DLC.