Representation Theory for Robotics

Explores efficient state representations for robots to accelerate Reinforcement Learning training, comparing pixel-based and model-based approaches.

Explores efficient state representations for robots to accelerate Reinforcement Learning training, comparing pixel-based and model-based approaches.

A professor reflects on teaching new Machine Learning and Deep Learning courses at UW-Madison and showcases student projects from those classes.

A professor reflects on teaching new Machine Learning and Deep Learning courses at UW-Madison and showcases impressive student projects.

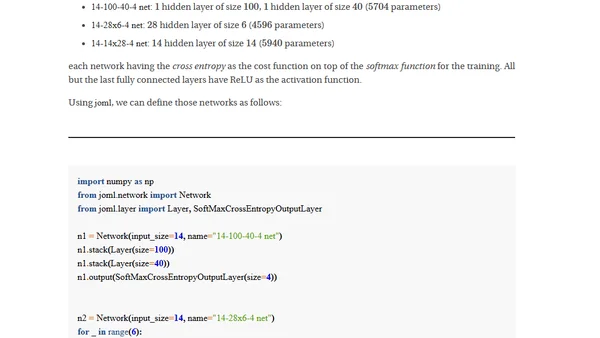

A deep dive into designing and implementing a Multilayer Perceptron from scratch, exploring the core concepts of neural network architecture and training.

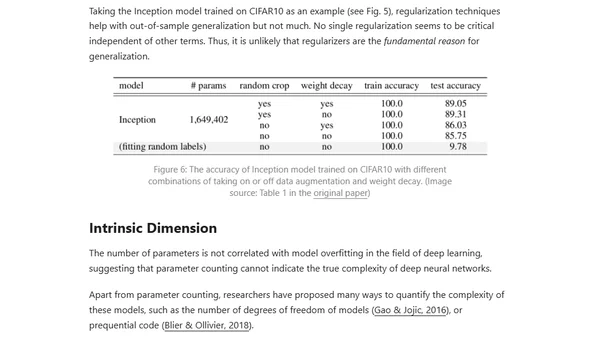

Explores the paradox of why deep neural networks generalize well despite having many parameters, discussing theories like Occam's Razor and the Lottery Ticket Hypothesis.

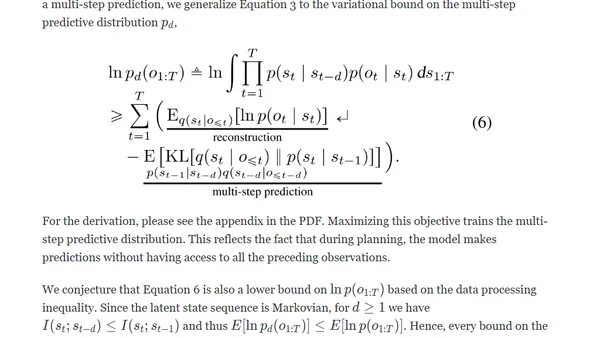

Introduces PlaNet, a model-based AI agent that learns environment dynamics from pixels and plans actions in latent space for efficient control tasks.

Explores handling Out-of-Vocabulary (OOV) values in machine learning, using deep learning for dynamic data in recommender systems as an example.

Explains how Graph Neural Networks and node2vec use graph structure and random walks to generate embeddings for machine learning tasks.

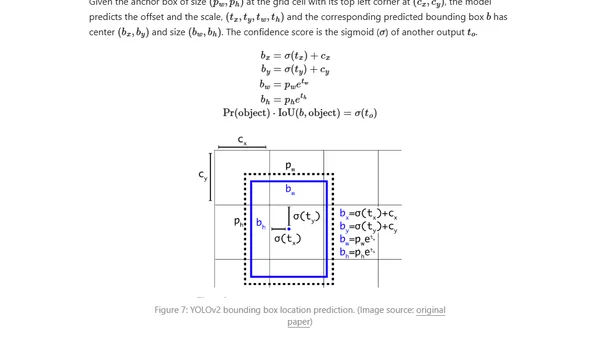

Explores fast, one-stage object detection models like YOLO, SSD, and RetinaNet, comparing them to slower two-stage R-CNN models.

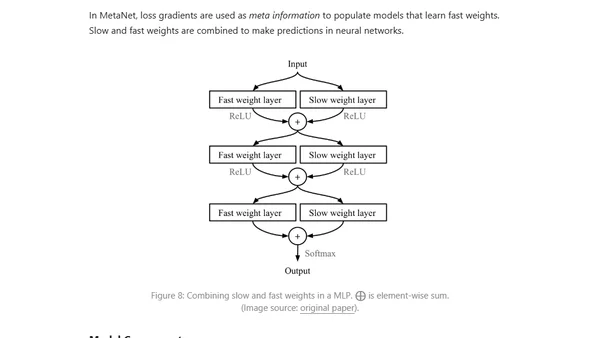

An introduction to meta-learning, a machine learning approach where models learn to adapt quickly to new tasks with minimal data, like 'learning to learn'.

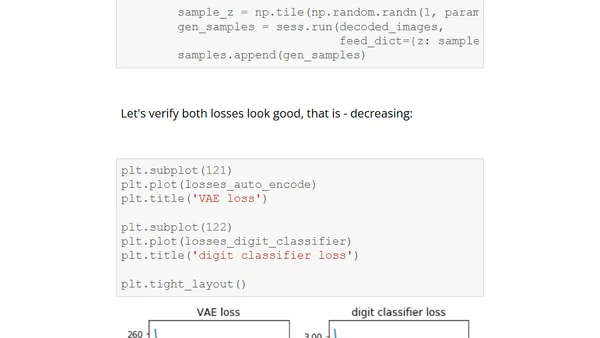

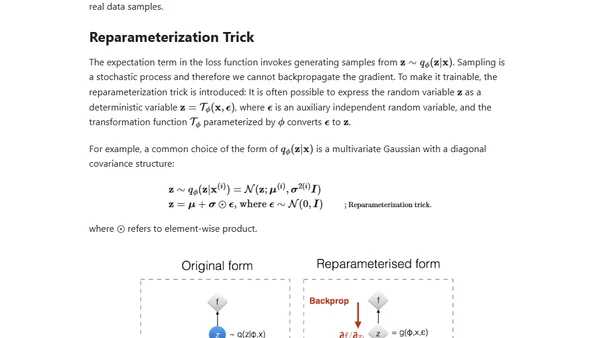

A detailed technical tutorial on implementing a Variational Autoencoder (VAE) with TensorFlow, including code and conditioning on digit types.

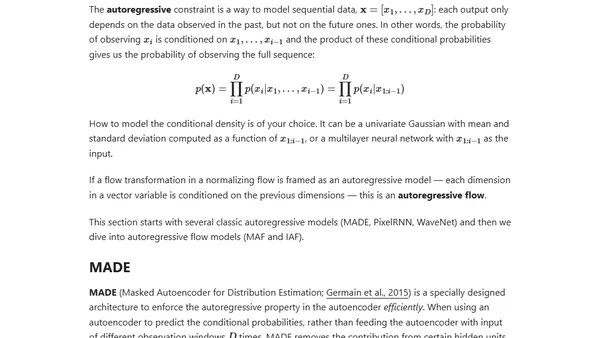

An introduction to flow-based deep generative models, explaining how they explicitly learn data distributions using normalizing flows, compared to GANs and VAEs.

An overview of tools and techniques for creating clear and insightful diagrams to visualize complex neural network architectures.

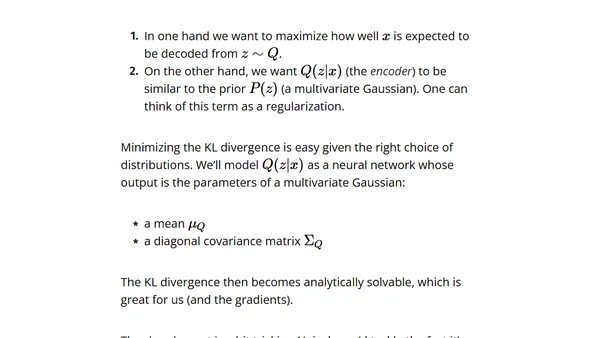

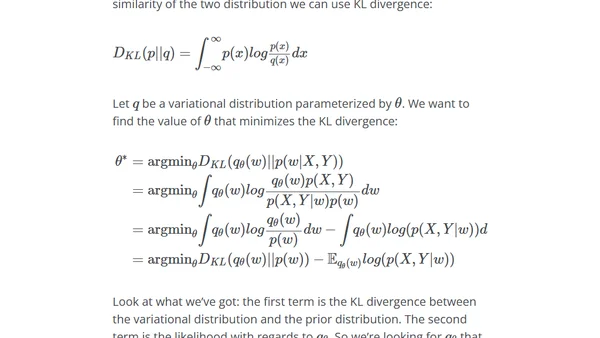

A technical explanation of Variational Autoencoders (VAEs), covering their theory, latent space, and how they generate new data.

Explores the evolution from basic Autoencoders to Beta-VAE, covering their architecture, mathematical notation, and applications in dimensionality reduction.

Explores Bayesian methods for quantifying uncertainty in deep neural networks, moving beyond single-point weight estimates.

A review and tips for Georgia Tech's OMSCS CS7642 Reinforcement Learning course, covering workload, projects, and key learnings.

Explains the attention mechanism in deep learning, its motivation from human perception, and its role in improving seq2seq models like Transformers.

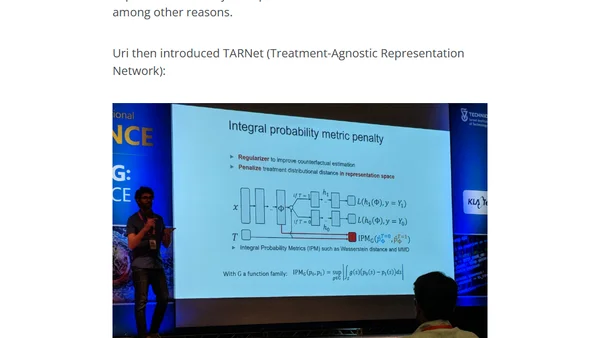

Highlights from a deep learning conference covering optimization algorithms' impact on generalization and human-in-the-loop efficiency.

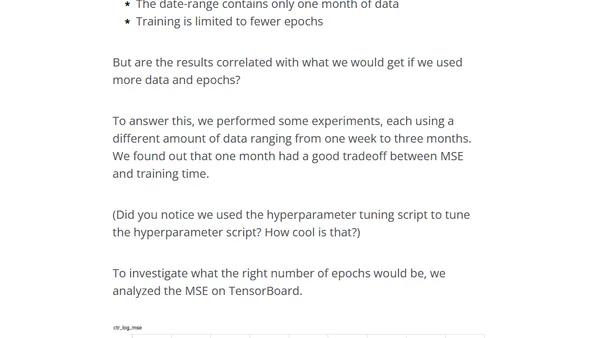

A practical guide to implementing a hyperparameter tuning script for machine learning models, based on real-world experience from Taboola's engineering team.