Mixture of Variational Autoencoders - a Fusion Between MoE and VAE

Explores an unsupervised approach combining Mixture of Experts (MoE) with Variational Autoencoders (VAE) for conditional data generation without labels.

Explores an unsupervised approach combining Mixture of Experts (MoE) with Variational Autoencoders (VAE) for conditional data generation without labels.

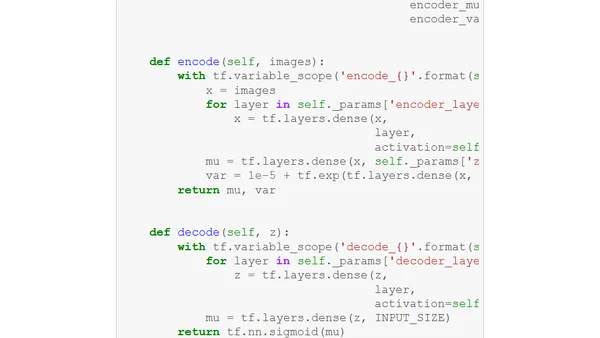

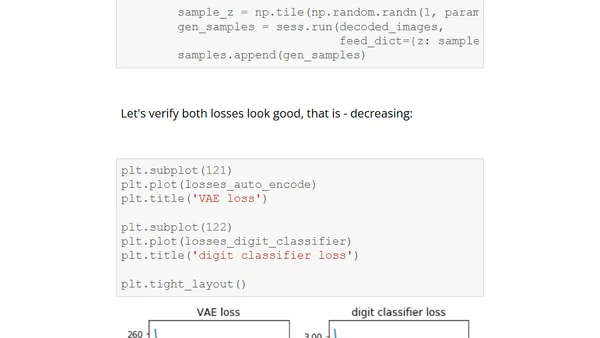

A detailed technical tutorial on implementing a Variational Autoencoder (VAE) with TensorFlow, including code and conditioning on digit types.

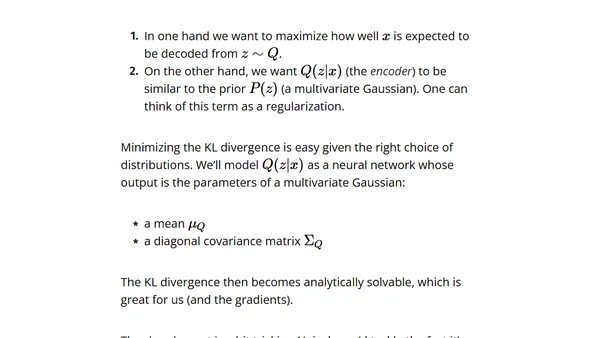

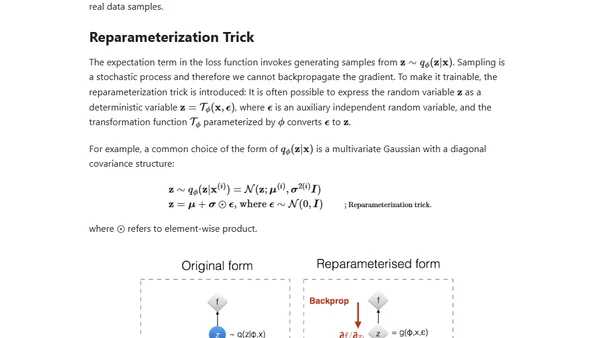

A technical explanation of Variational Autoencoders (VAEs), covering their theory, latent space, and how they generate new data.

Explores the evolution from basic Autoencoders to Beta-VAE, covering their architecture, mathematical notation, and applications in dimensionality reduction.

Explains the Gumbel-Softmax trick, a method for training neural networks that need to sample from discrete distributions, enabling gradient flow.