Do AIs reason or recite?

Explores whether large language models like ChatGPT truly reason or merely recite memorized text from their training data, examining their logical capabilities.

Explores whether large language models like ChatGPT truly reason or merely recite memorized text from their training data, examining their logical capabilities.

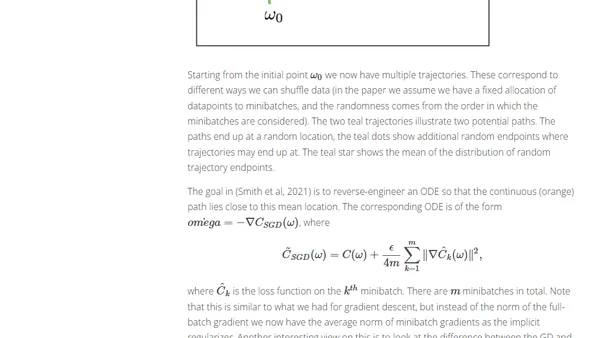

Explores how Stochastic Gradient Descent (SGD) inherently prefers certain minima, leading to better generalization in deep learning, beyond classical theory.

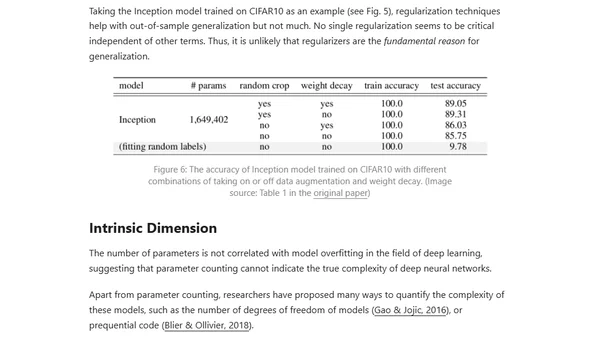

Explores the paradox of why deep neural networks generalize well despite having many parameters, discussing theories like Occam's Razor and the Lottery Ticket Hypothesis.

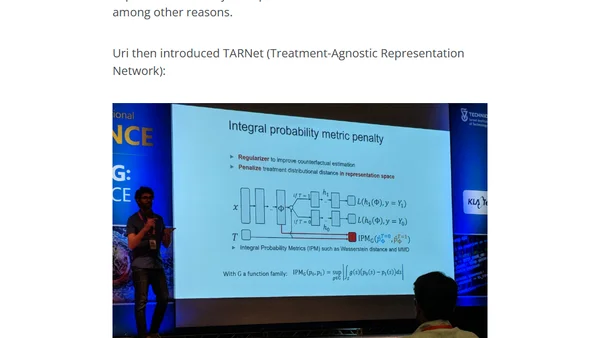

Highlights from a deep learning conference covering optimization algorithms' impact on generalization and human-in-the-loop efficiency.