American Wind Farms

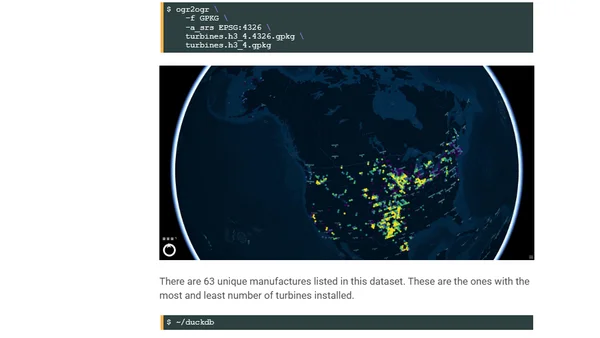

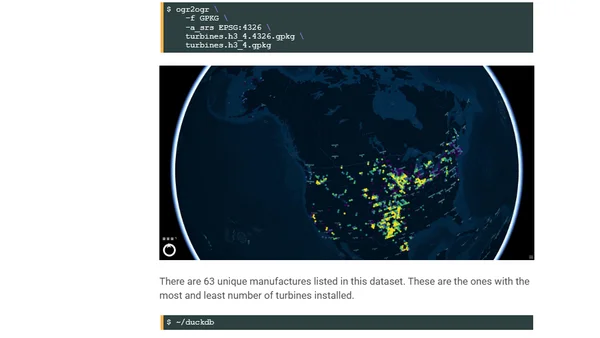

A technical walkthrough of converting the US Wind Turbine Database to Parquet format and analyzing it using tools like GDAL, DuckDB, and QGIS.

A technical walkthrough of converting the US Wind Turbine Database to Parquet format and analyzing it using tools like GDAL, DuckDB, and QGIS.

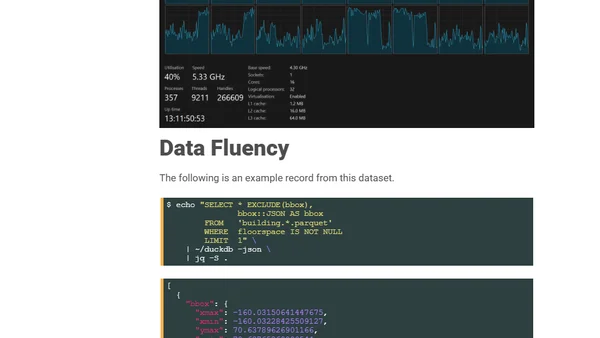

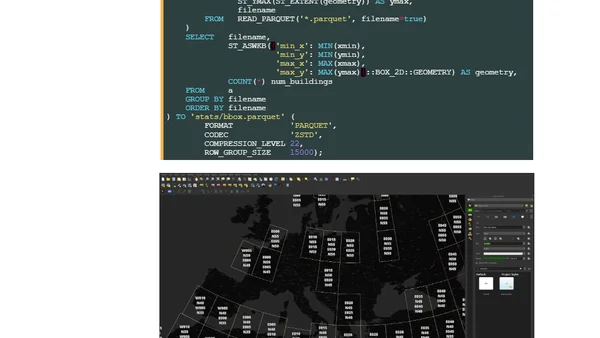

A technical walkthrough of converting the massive OpenBuildingMap dataset (2.7B buildings) into a columnar Parquet format for efficient cloud analysis.

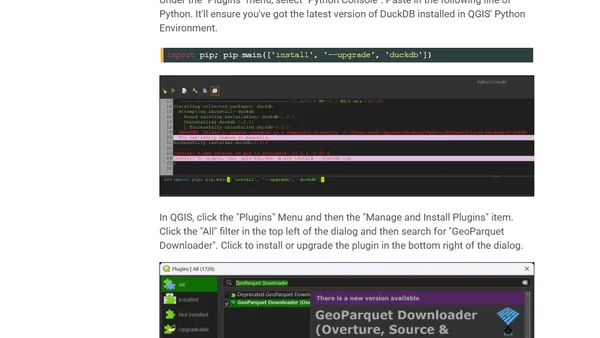

Exploring the Layercake project's analysis-ready OpenStreetMap data in Parquet format, including setup and performance on a high-end workstation.

Analysis of a new global building dataset (2.75B structures), detailing the data processing, technical setup, and tools used for exploration.

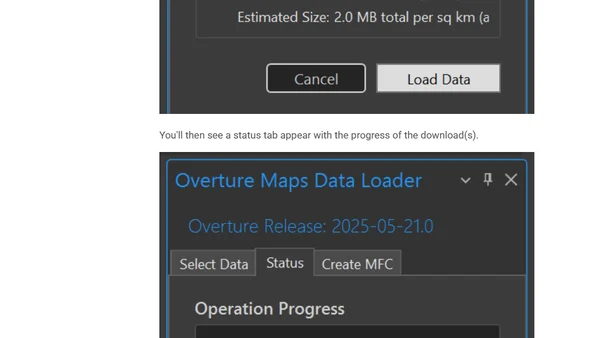

A guide on using the new ArcGIS Pro add-in to download and work with Overture Maps Foundation's global geospatial datasets via Parquet files and DuckDB.

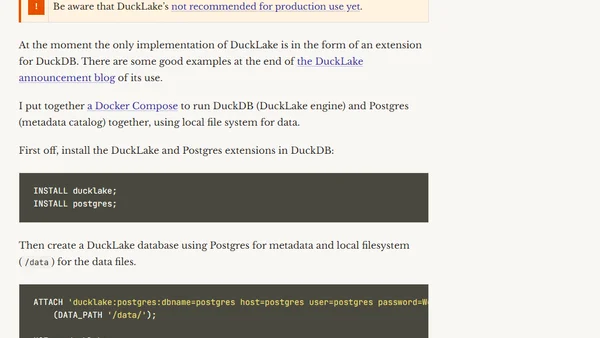

An analysis of DuckLake, a new open table format and catalog specification for data engineering, comparing it to existing solutions like Iceberg and Delta Lake.

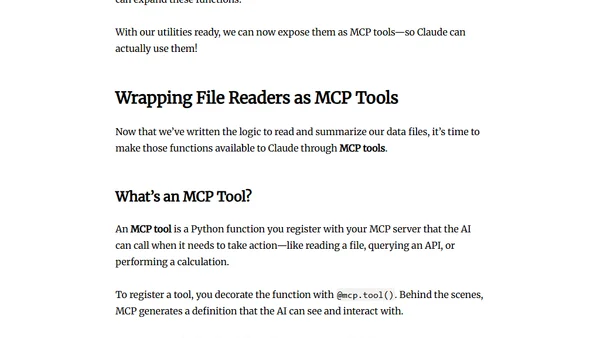

A tutorial on building a beginner-friendly Model Context Protocol (MCP) server in Python to connect Claude AI with local CSV and Parquet files.

Microsoft updates SQLPackage with preview support for Parquet files in Azure Blob Storage, enhancing data management and provisioning capabilities.

Explains encoding techniques in Parquet files, including dictionary, RLE, bit-packing, and delta encoding, to optimize storage and performance.

Explores compression algorithms in Parquet files, comparing Snappy, Gzip, Brotli, Zstandard, and LZO for storage and performance.

Explains how Parquet handles schema evolution, including adding/removing columns and changing data types, for data engineers.

Explains the hierarchical structure of Parquet files, detailing how pages, row groups, and columns optimize storage and query performance.

Explains Parquet's columnar storage model, detailing its efficiency for big data analytics through faster queries, better compression, and optimized aggregation.

An introduction to Apache Parquet, a columnar storage file format for efficient data processing and analytics.

Explores why Parquet is the ideal columnar file format for optimizing storage and query performance in modern data lake and lakehouse architectures.

Explores how metadata in Parquet files improves data efficiency and query performance, covering file, row group, and column-level metadata.

A practical guide to reading and writing Parquet files in Python using PyArrow and FastParquet libraries.

Final guide in a series covering performance tuning and best practices for optimizing Apache Parquet files in big data workflows.

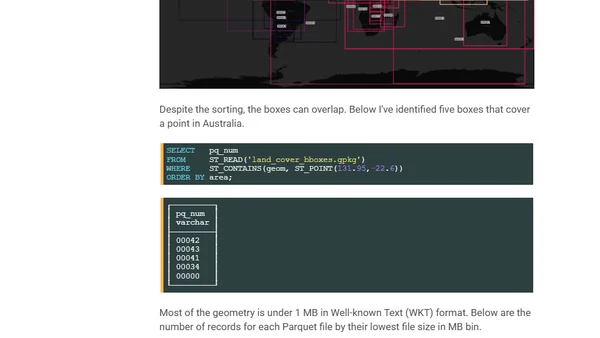

A technical guide on processing Overture Maps' global land cover dataset, focusing on extracting and analyzing Australia's data using DuckDB and QGIS.

A tutorial on using PyArrow for data analytics in Python, covering core concepts, file I/O, and analytical operations.