Connect AWS Glue Data Catalog to Dremio Cloud: Query and Manage Your AWS Iceberg Tables

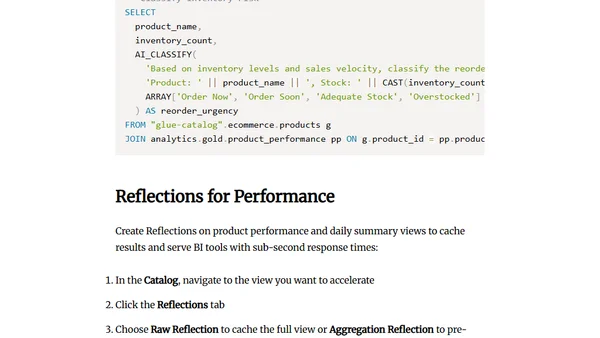

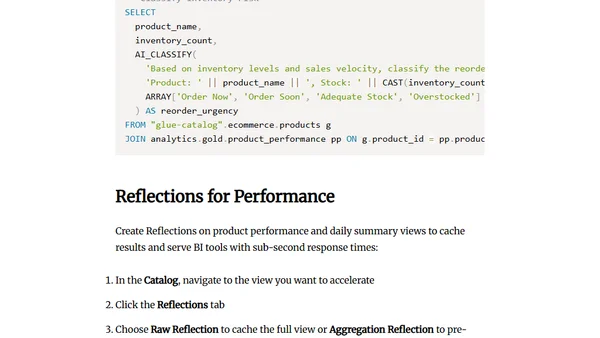

Guide on connecting AWS Glue Data Catalog to Dremio Cloud for querying and managing AWS Iceberg tables with full DML support and federation.

Guide on connecting AWS Glue Data Catalog to Dremio Cloud for querying and managing AWS Iceberg tables with full DML support and federation.

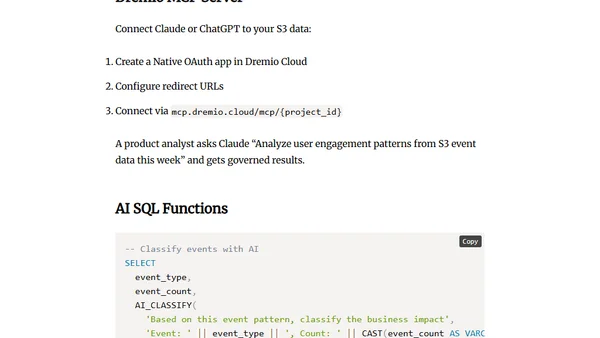

Guide on connecting Amazon S3 to Dremio Cloud to query data lakes with SQL, federation, and AI-powered analytics.

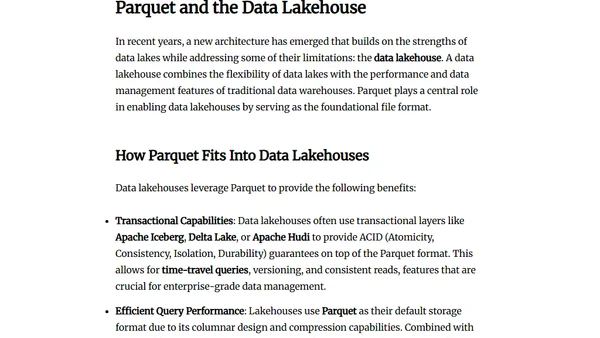

Explores why Parquet is the ideal columnar file format for optimizing storage and query performance in modern data lake and lakehouse architectures.

Final guide in a series covering performance tuning and best practices for optimizing Apache Parquet files in big data workflows.

Explains how Apache Iceberg brings ACID transaction guarantees to data lakes, enabling reliable data operations on open storage.

Explains the data lakehouse architecture, its layers (storage, table format, catalog, processing), and its advantages over traditional data warehouses.

Explores Apache Iceberg's advanced partitioning features, including hidden partitioning and transformations, for optimizing query performance in data lakes.

Explains three key Apache Iceberg features for data engineers: hidden partitioning, partition evolution, and tool compatibility.

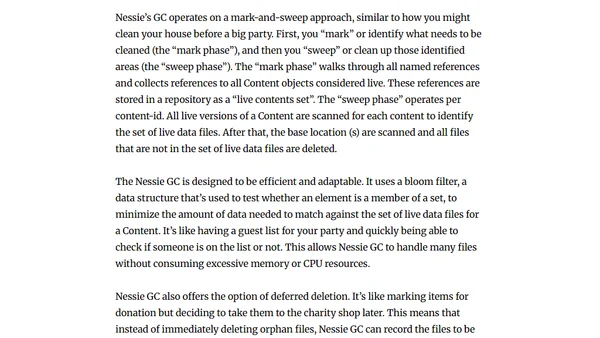

Project Nessie is a version control system for data lakes, bringing Git-like operations to manage and track changes in data assets.

Explains the data lakehouse concept, Dremio's role as a platform, and Apache Iceberg's function as a table format for modern data architectures.

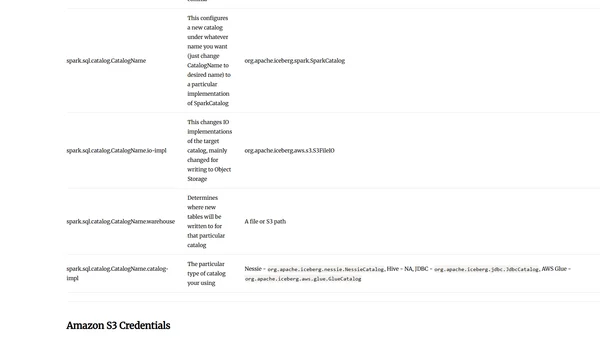

A guide to configuring Apache Spark for use with the Apache Iceberg table format, covering packages, flags, and programmatic setup.

A curated list of essential resources for data engineering, including articles, newsletters, podcasts, and tools.

A guide explaining key data engineering terms like data warehouses, data lakes, data mesh, and data pipelines, with definitions and comparisons.

A guide to building a prototype IoT analytics architecture on Azure, covering IoT Hub, Stream Analytics, and Power BI integration.