6/17/2025

•

EN

Understanding and Coding the KV Cache in LLMs from Scratch

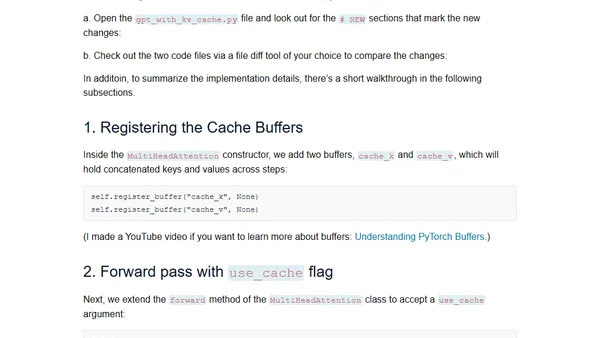

A technical tutorial explaining the concept and implementation of KV caches for efficient inference in Large Language Models (LLMs).