Running DeepSeek open reasoning models on GKE

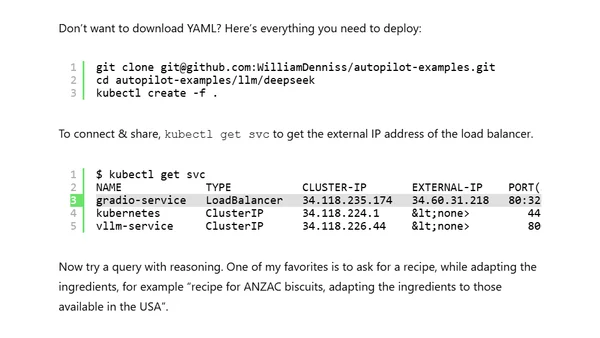

Read OriginalThis article provides a step-by-step tutorial for running DeepSeek's R1 open reasoning models, such as the 8B Llama distilled model, on Google Kubernetes Engine (GKE). It covers creating a GKE Autopilot cluster, setting up secrets for Hugging Face, deploying vLLM for model serving, and creating a custom Gradio application to stream responses and handle the model's unique thinking blocks. The guide includes specific YAML configurations and resource recommendations for GPUs like the Nvidia L4 or A100.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser