Neural Networks gone wild! They can sample from discrete distributions now!

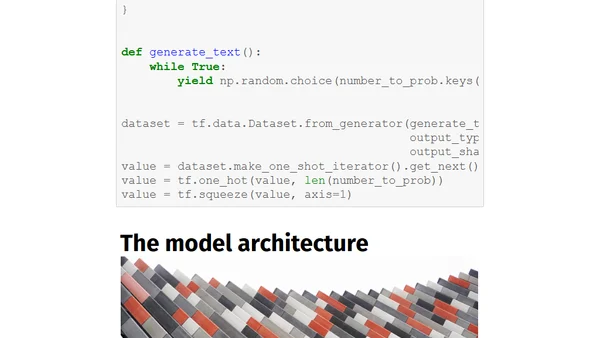

Read OriginalThis technical article addresses the challenge of training neural networks with stochastic nodes that sample from discrete distributions, where gradients cannot normally propagate. It introduces the Gumbel-Max trick and the Gumbel-Softmax (or Concrete) distribution as solutions, allowing for gradient-based optimization. The piece includes a breakdown of the Gumbel distribution and a practical, coded toy example for implementation.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

2

Better react-hook-form Smart Form Components

Maarten Hus

•

2 votes

3

AGI, ASI, A*I – Do we have all we need to get there?

John D. Cook

•

1 votes

4

Quoting Thariq Shihipar

Simon Willison

•

1 votes

5

Dew Drop – January 15, 2026 (#4583)

Alvin Ashcraft

•

1 votes

6

Using Browser Apis In React Practical Guide

Jivbcoop

•

1 votes