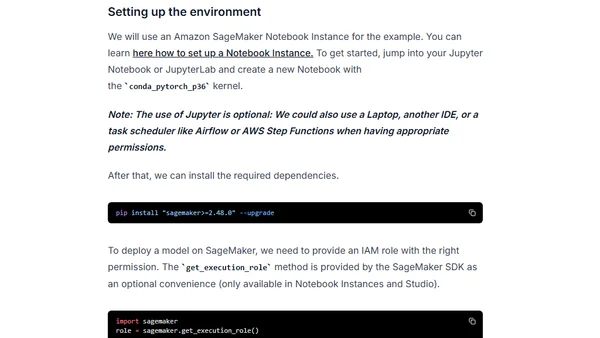

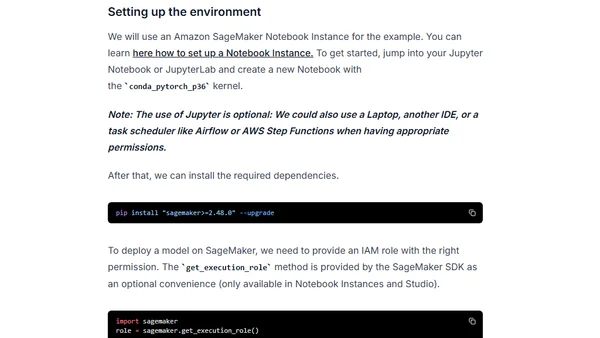

Deploy BigScience T0_3B to AWS and Amazon SageMaker

A tutorial on deploying the BigScience T0_3B language model to AWS and Amazon SageMaker for production use.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

A tutorial on deploying the BigScience T0_3B language model to AWS and Amazon SageMaker for production use.

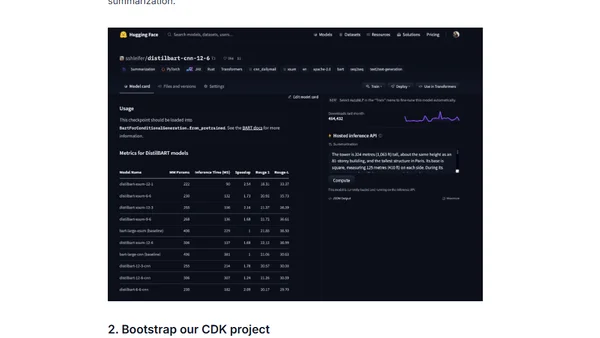

A tutorial on deploying Hugging Face Transformer models to production using AWS SageMaker, Lambda, and CDK for scalable, secure inference endpoints.

A guide to implementing few-shot learning using the GPT-Neo language model and Hugging Face's inference API for NLP tasks.

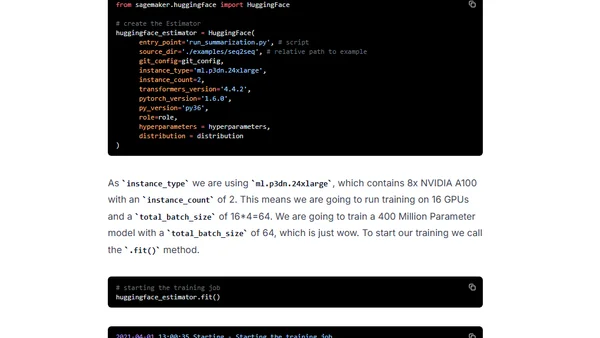

A tutorial on using Hugging Face Transformers and Amazon SageMaker for distributed training of BART/T5 models on a text summarization task.

Tutorial on building a multilingual question-answering API using XLM RoBERTa, HuggingFace, and AWS Lambda with container support.

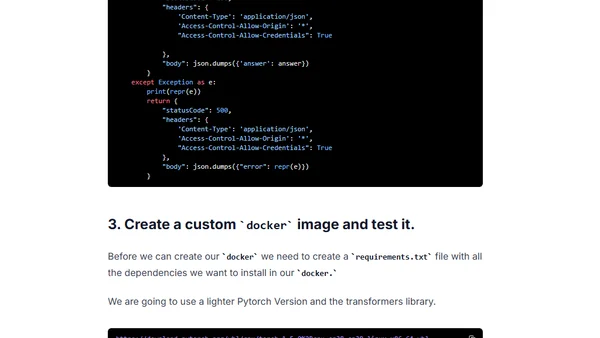

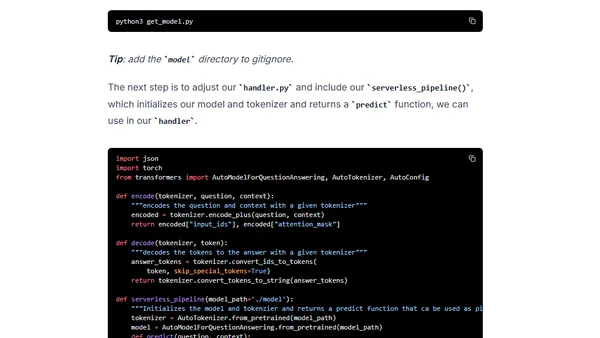

A tutorial on deploying a BERT Question-Answering API using HuggingFace Transformers, AWS Lambda with Docker container support, and the Serverless Framework.

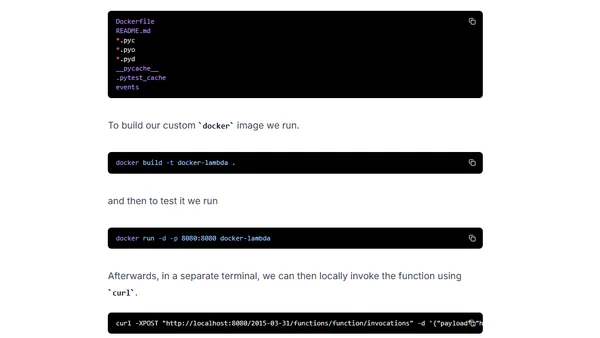

Guide to using custom Docker images as runtimes for AWS Lambda, including setup with AWS SAM and ECR.

A tutorial on building a serverless question-answering API using BERT, Hugging Face, AWS Lambda, and EFS to overcome dependency and model load limitations.

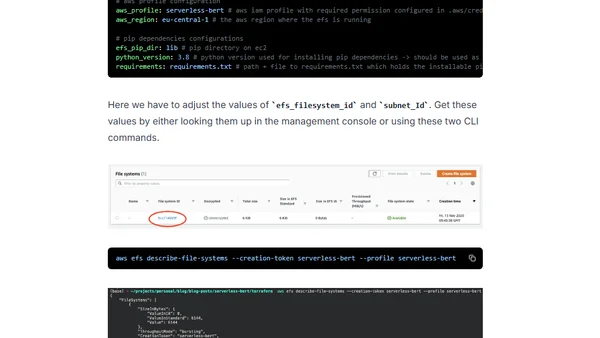

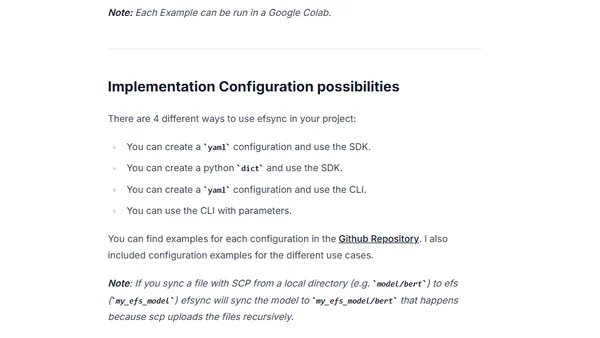

Introducing efsync, an open-source MLOps toolkit for syncing dependencies and model files to AWS EFS for serverless machine learning.

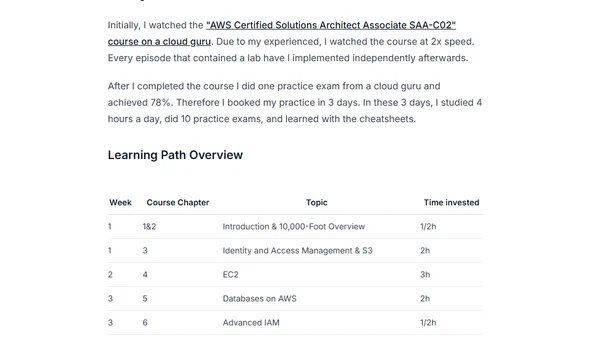

A machine learning engineer shares his 7-week journey and study plan to pass the AWS Certified Solutions Architect - Associate exam.

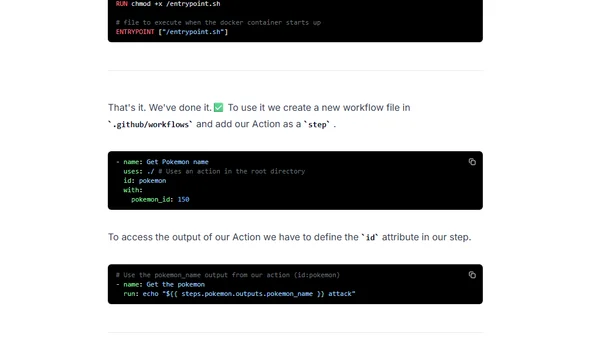

A tutorial on creating a custom GitHub Action in four steps, including defining inputs/outputs and writing a bash script.

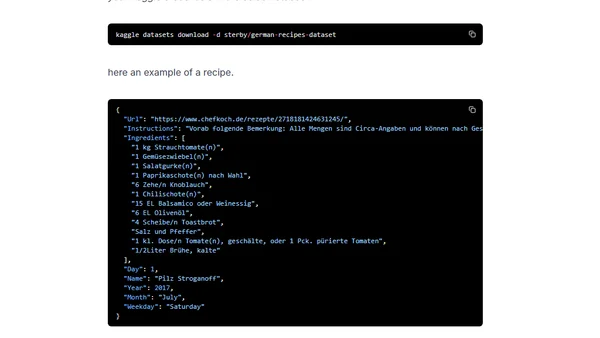

A tutorial on fine-tuning a German GPT-2 language model for text generation using Huggingface's Transformers library and a dataset of recipes.

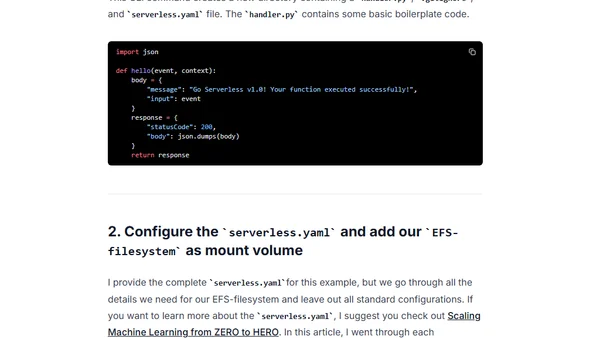

A tutorial on integrating AWS EFS storage with AWS Lambda functions using the Serverless Framework, focusing on overcoming storage limits for serverless applications.

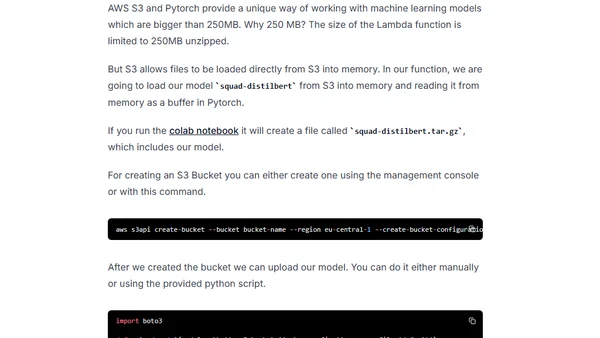

A tutorial on deploying a BERT question-answering model in a serverless environment using HuggingFace Transformers and AWS Lambda.

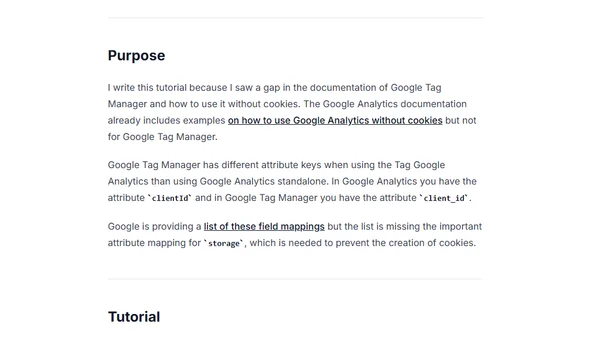

A tutorial on configuring Google Tag Manager and Google Analytics to operate without using cookies, addressing GDPR compliance concerns.

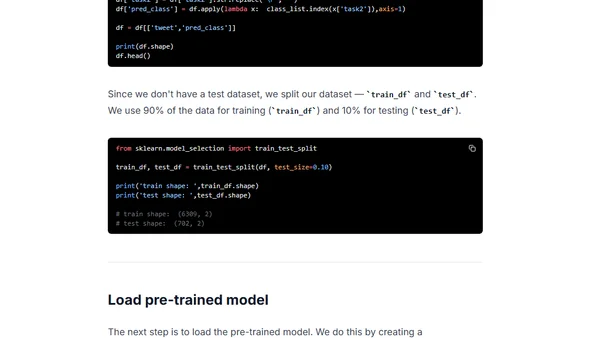

A tutorial on building a non-English text classification model using BERT and Simple Transformers, demonstrated with German tweets.

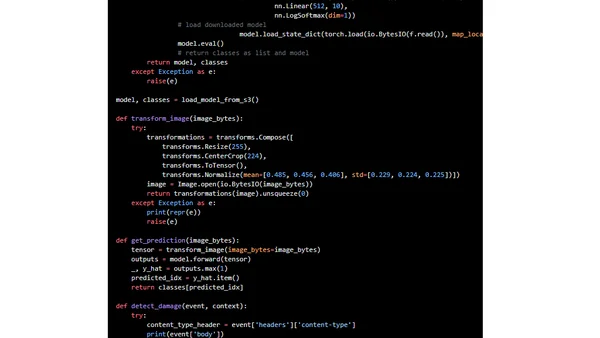

A step-by-step tutorial on deploying a custom PyTorch machine learning model to production using AWS Lambda and the Serverless Framework.

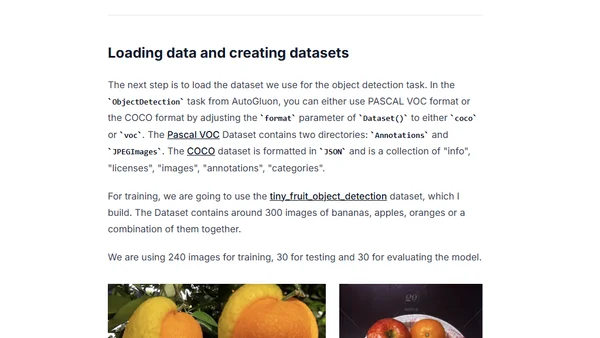

A tutorial on using AWS AutoGluon, an AutoML library, to build an object detection model with minimal code.

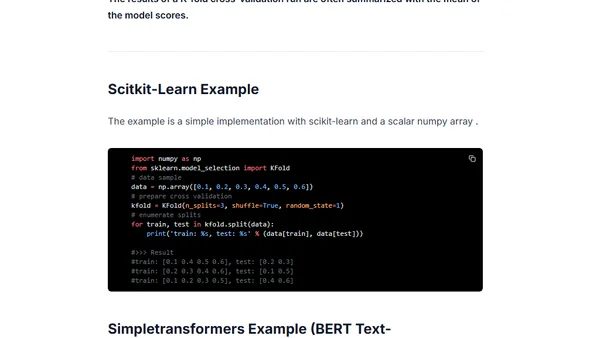

Explains K-Fold cross-validation for ML models with a practical example using BERT for text classification.

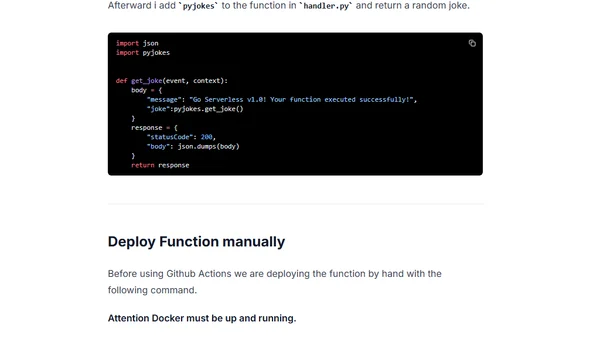

A step-by-step tutorial on setting up a CI/CD pipeline for AWS Lambda using GitHub Actions and the Serverless Framework.