AI Engineer 2025 - Improving RecSys & Search with LLM techniques

A presentation on using Large Language Model (LLM) techniques to enhance Recommendation Systems (RecSys) and Search, from the AI Engineer World's Fair 2025.

A presentation on using Large Language Model (LLM) techniques to enhance Recommendation Systems (RecSys) and Search, from the AI Engineer World's Fair 2025.

Summary of a panel discussion at NVIDIA GTC 2025 on insights and lessons learned from building real-world LLM-powered applications.

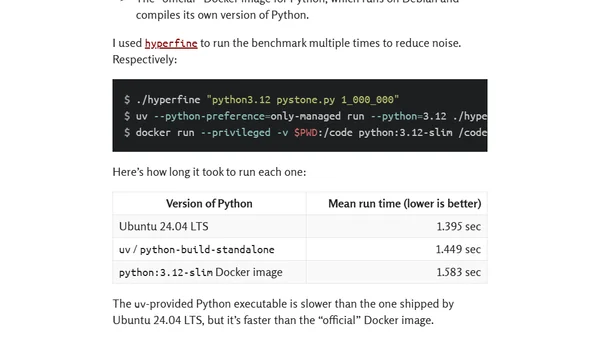

Analyzes the viability of using uv's managed Python in production, covering portability, performance, and security implications.

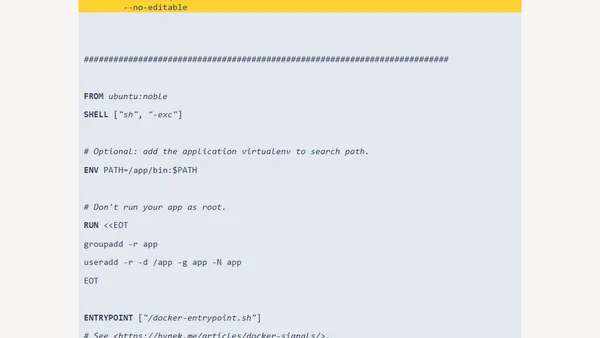

A guide to building fast, production-ready Docker containers for Python applications using the uv tool, focusing on multi-stage builds and caching strategies.

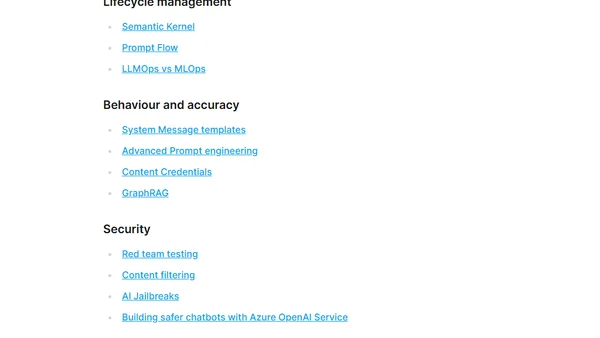

A guide on transitioning Generative AI applications from proof-of-concept to production, covering architecture, security, and operations.

Reflections on delivering the closing keynote at the AI Engineer World's Fair 2024, sharing lessons from a year of building with LLMs.

A summary of a keynote talk on essential building blocks for production LLM systems, covering evaluations, RAG, and guardrails.

A developer details their journey to diagnose and fix a persistent memory leak in their production Node.js application after a database migration.

![Ultimate Guide: NestJS Dockerfile For Production [2022]](https://alldevblogs.blob.core.windows.net/thumbs/article-5c56d5379647-full-92350107.webp)

A step-by-step tutorial on creating a production-optimized Dockerfile for NestJS applications, covering local testing and deployment.

A talk on system design for recommendation and search systems, covering architecture and production considerations.

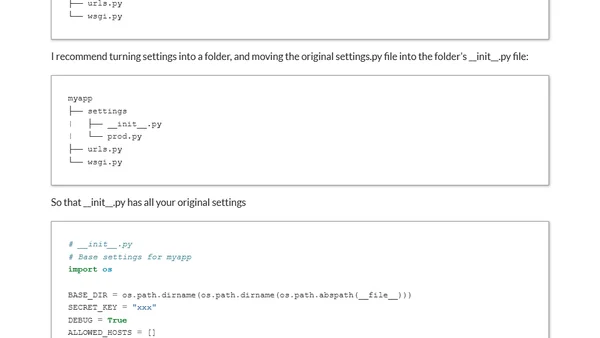

A guide to setting up a new Django project with a focus on organization, environment separation, and production readiness.

Learn how to deploy a Django site live, including choosing a production-ready Python application server like Gunicorn.

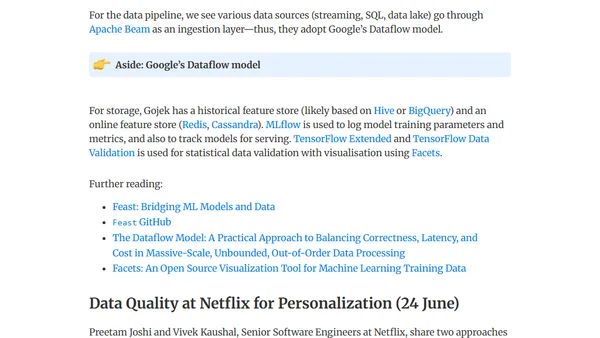

Notes from Spark+AI Summit 2020 covering application-specific talks on ML frameworks, data engineering, feature stores, and data quality from companies like Airbnb and Netflix.

A guide to common Django server setups, from simple local development to professional production deployments with Gunicorn and PostgreSQL.

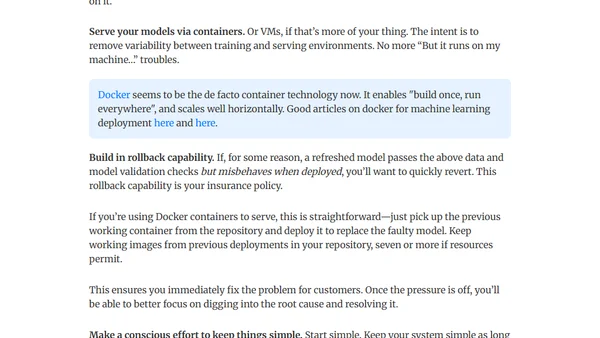

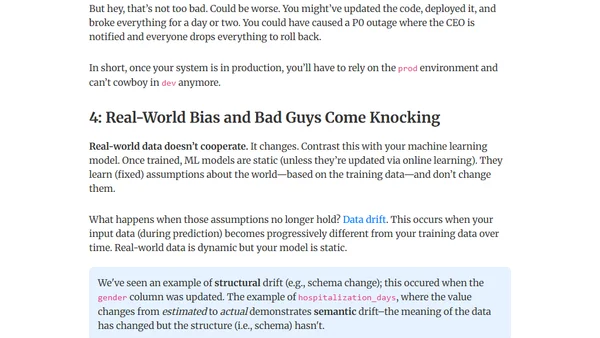

A guide to best practices for monitoring, maintaining, and managing machine learning models and data pipelines in a production environment.

Explores six unexpected challenges that arise after deploying machine learning models in production, from data schema changes to organizational issues.

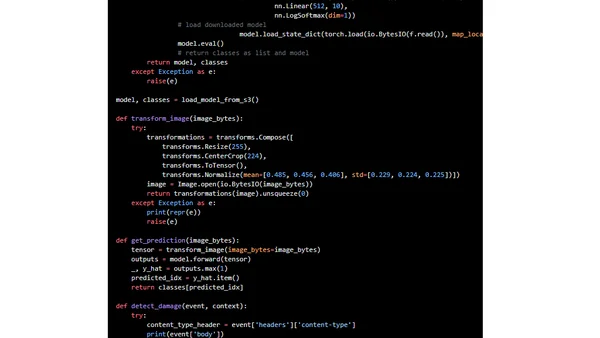

A step-by-step tutorial on deploying a custom PyTorch machine learning model to production using AWS Lambda and the Serverless Framework.

A guide on configuring Django to write application logs to a file in a production environment for easier debugging.

A guide on using React's Profiler API to monitor and track component render performance in production applications.

A call for more public discussion about running Python in production, sharing real-world experiences and lessons learned from web services.