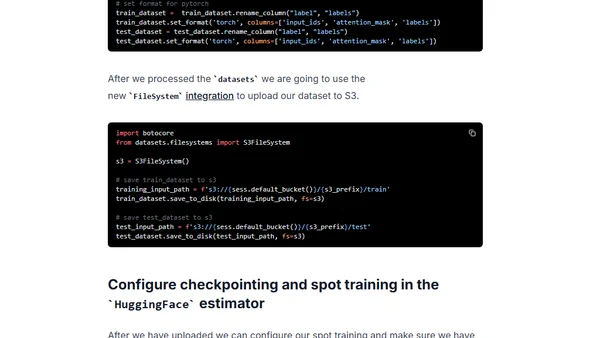

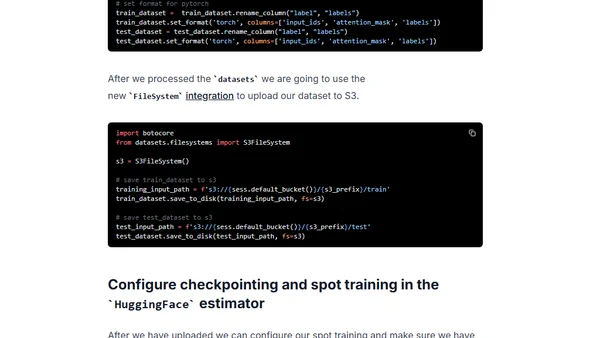

Save up to 90% training cost with AWS Spot Instances and Hugging Face Transformers

A technical guide on using AWS Spot Instances with Hugging Face Transformers on Amazon SageMaker to reduce machine learning training costs by up to 90%.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

A technical guide on using AWS Spot Instances with Hugging Face Transformers on Amazon SageMaker to reduce machine learning training costs by up to 90%.

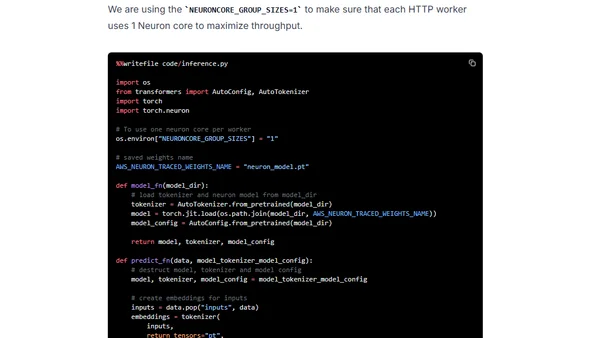

A tutorial on accelerating BERT model inference using Hugging Face Transformers and AWS Inferentia chips for cost-effective, high-performance deployment.

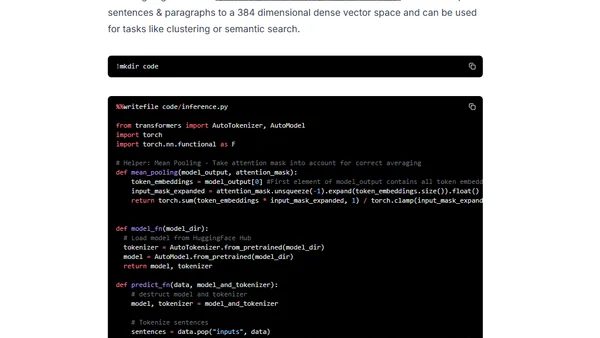

Guide to deploying a Sentence Transformers model on Amazon SageMaker for generating document embeddings using Hugging Face's Inference Toolkit.

A guide to deploying autoscaling Hugging Face Transformers (like BERT) on Amazon SageMaker using a Terraform module for real-time and asynchronous inference.

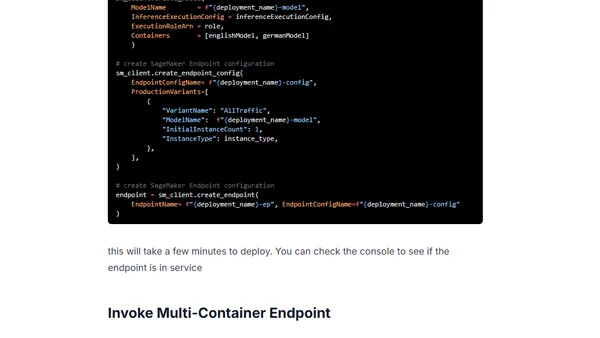

Guide to deploying multiple Hugging Face Transformer models as a cost-optimized Multi-Container Endpoint using Amazon SageMaker.

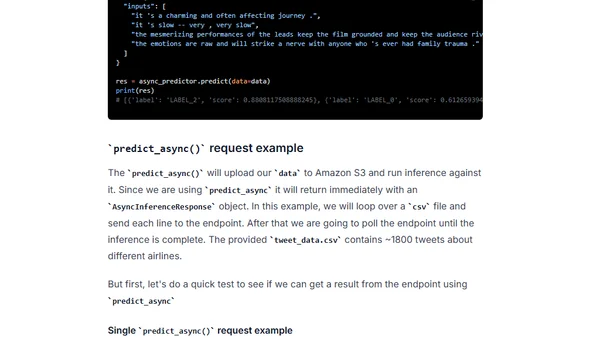

Guide to deploying Hugging Face Transformers models for asynchronous inference using Amazon SageMaker, including setup and configuration.

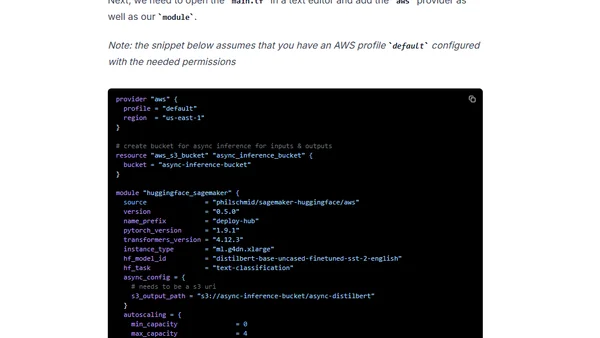

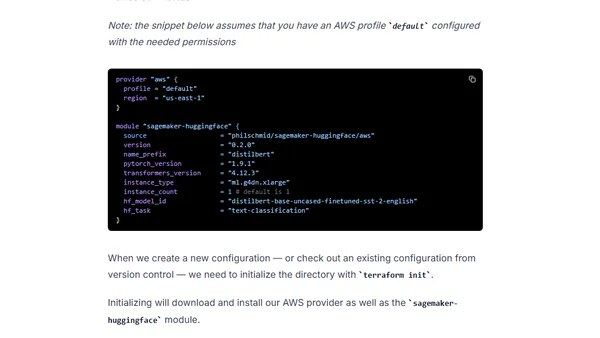

A technical guide on deploying a DistilBERT model to production using Hugging Face Transformers, Amazon SageMaker, and Infrastructure as Code with Terraform.

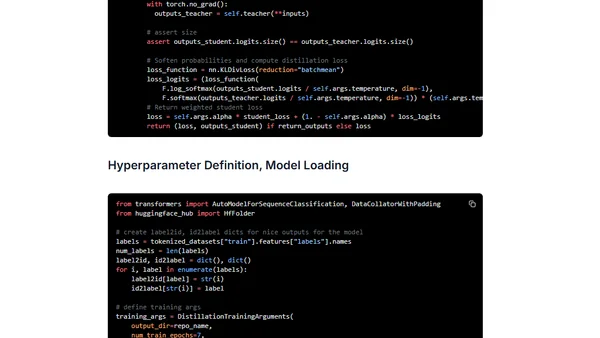

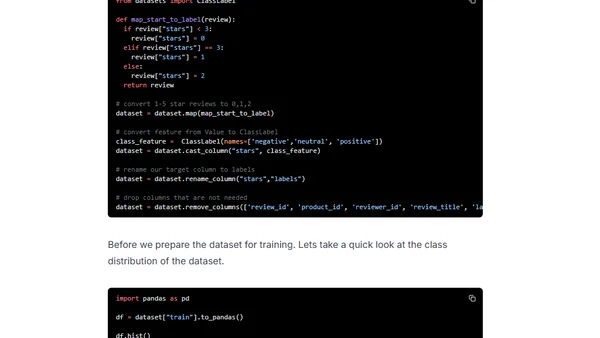

A tutorial on using task-specific knowledge distillation to compress a BERT model for text classification with Transformers and Amazon SageMaker.

A guide to accelerating multilingual BERT fine-tuning using Hugging Face Transformers with distributed training on Amazon SageMaker.

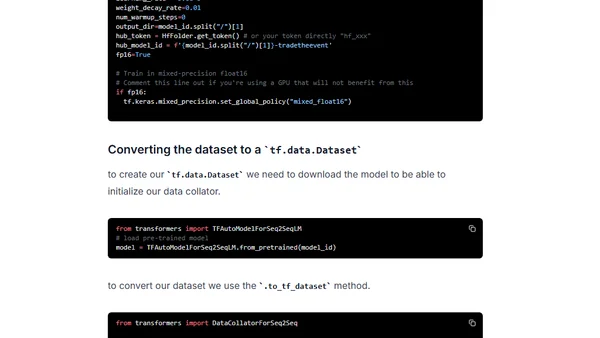

A tutorial on fine-tuning a Hugging Face Transformer model for financial text summarization using Keras and Amazon SageMaker.

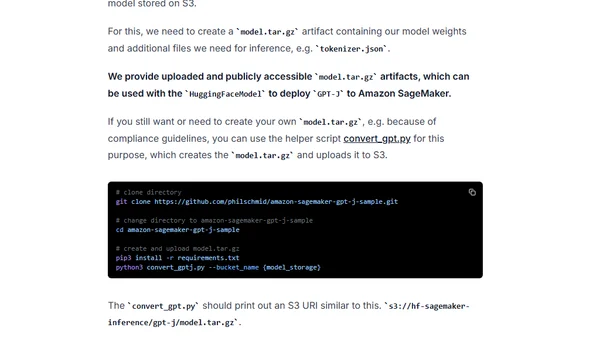

A guide to deploying the GPT-J 6B language model for production inference using Hugging Face Transformers and Amazon SageMaker.

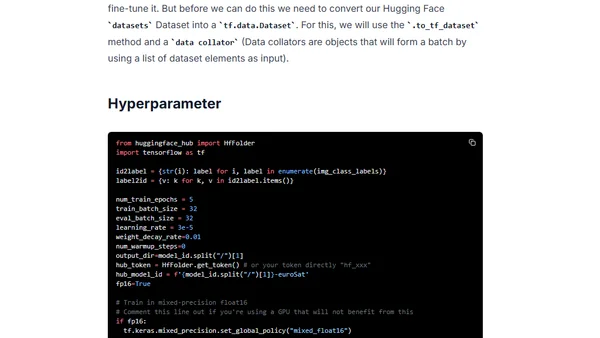

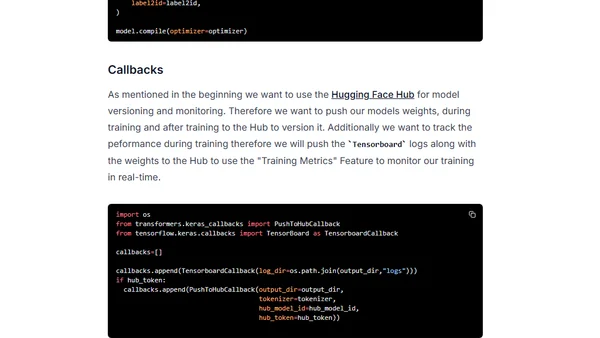

A tutorial on fine-tuning a Vision Transformer (ViT) model for satellite image classification using Hugging Face Transformers and Keras.

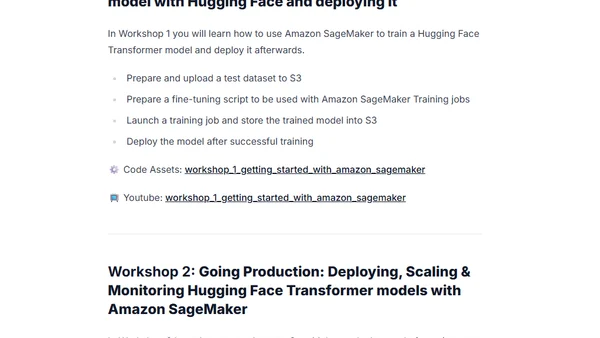

A workshop series on using Hugging Face Transformers with Amazon SageMaker for enterprise-scale NLP, covering training, deployment, and MLOps.

A tutorial on fine-tuning a non-English BERT model using Hugging Face Transformers and Keras for Named Entity Recognition tasks.

Guide to deploying Hugging Face Transformer models using Amazon SageMaker Serverless Inference for cost-effective ML prototypes.

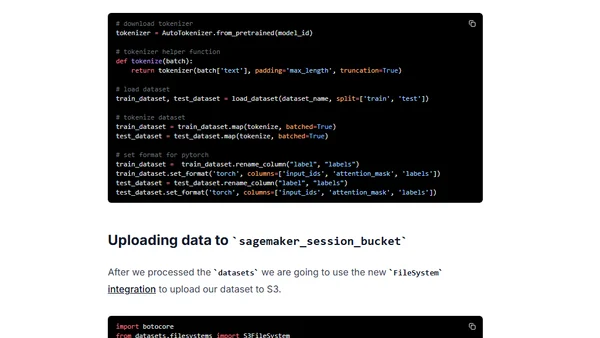

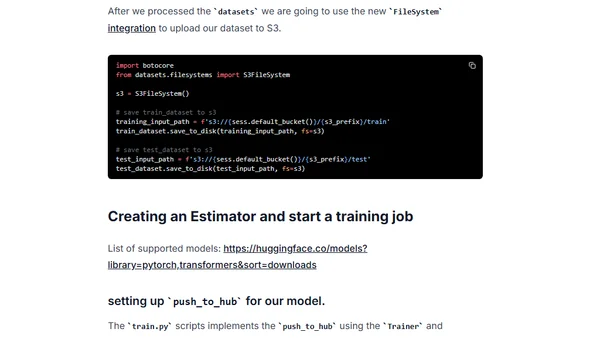

Guide to fine-tuning a Hugging Face BERT model for text classification using Amazon SageMaker and the new Training Compiler to accelerate training.

Learn how to integrate the Hugging Face Hub as a model registry with Amazon SageMaker for MLOps, including training and deployment.

A guide to attending AWS re:Invent 2021 machine learning and NLP sessions remotely, featuring keynotes and top session recommendations.

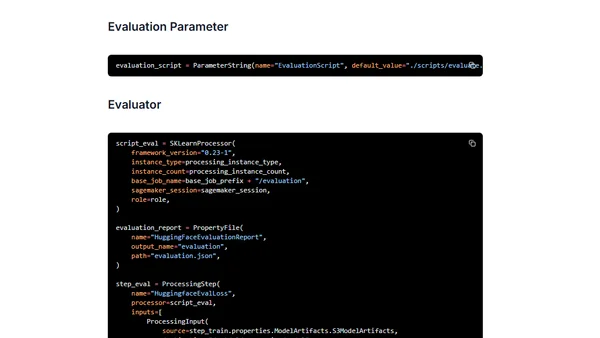

Guide to building an end-to-end MLOps pipeline for Hugging Face Transformers using Amazon SageMaker Pipelines, from training to deployment.

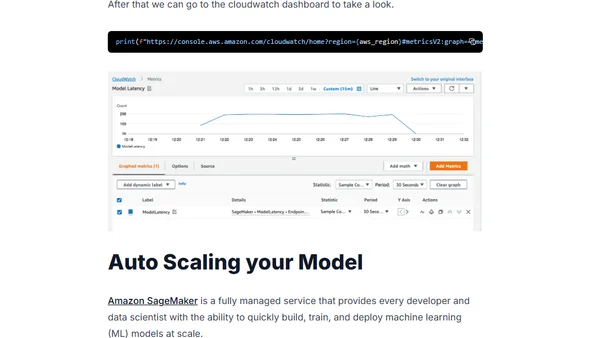

A guide to deploying and auto-scaling Hugging Face Transformer models for real-time inference using Amazon SageMaker.