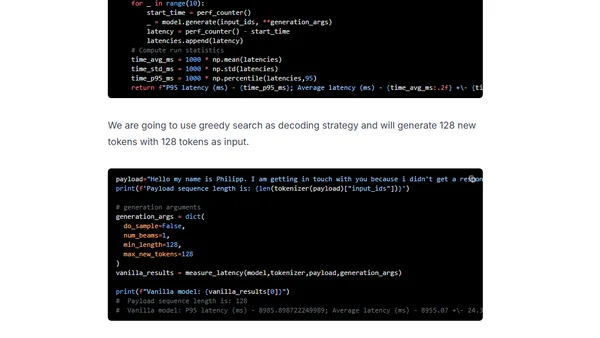

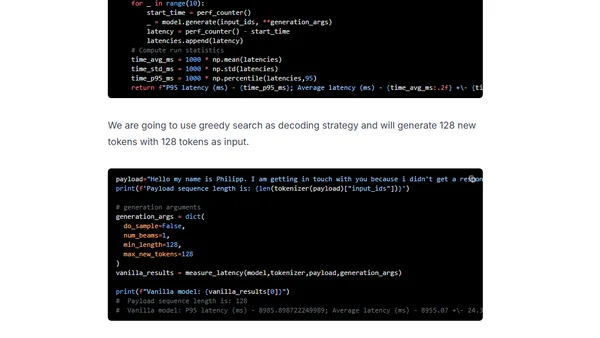

Accelerate GPT-J inference with DeepSpeed-Inference on GPUs

Learn to optimize GPT-J inference using DeepSpeed-Inference and Hugging Face Transformers for faster GPU performance.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

Learn to optimize GPT-J inference using DeepSpeed-Inference and Hugging Face Transformers for faster GPU performance.

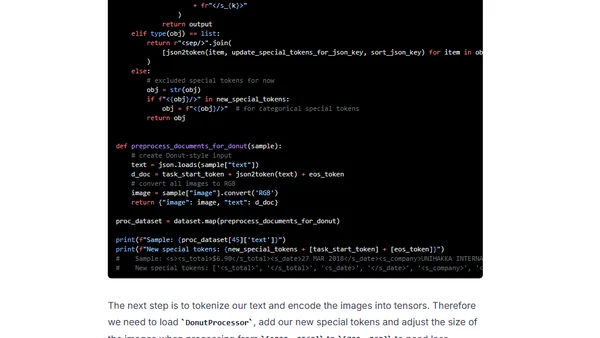

A tutorial on fine-tuning the Donut model for document parsing using Hugging Face Transformers and the SROIE dataset.

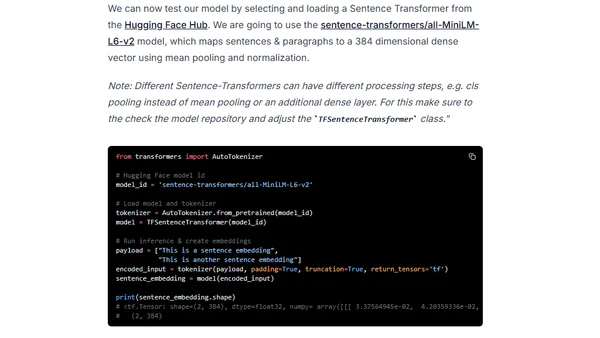

A tutorial on using Sentence Transformers models with TensorFlow and Keras to create text embeddings for semantic search and similarity tasks.

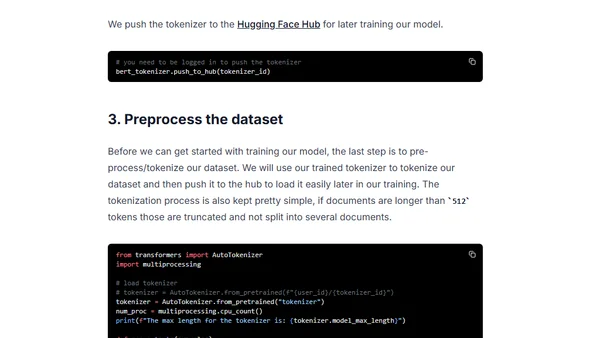

A tutorial on pre-training a BERT model from scratch using Hugging Face Transformers and Habana Gaudi accelerators on AWS.

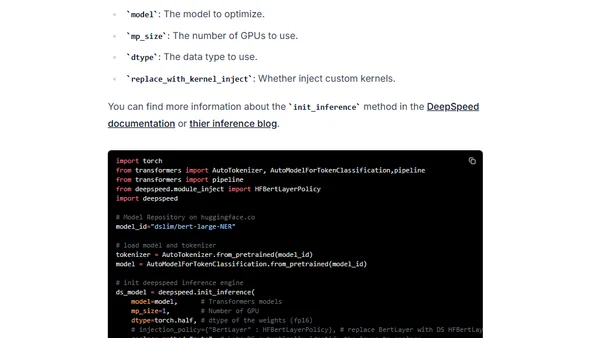

Learn to optimize BERT and RoBERTa models for faster GPU inference using DeepSpeed-Inference, reducing latency from 30ms to 10ms.

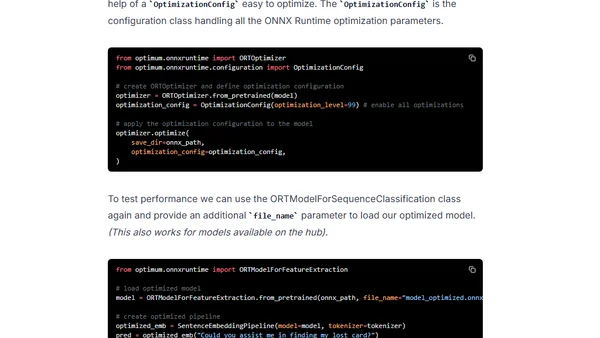

Learn to optimize Sentence Transformers models for faster inference using Hugging Face Optimum, ONNX Runtime, and dynamic quantization.

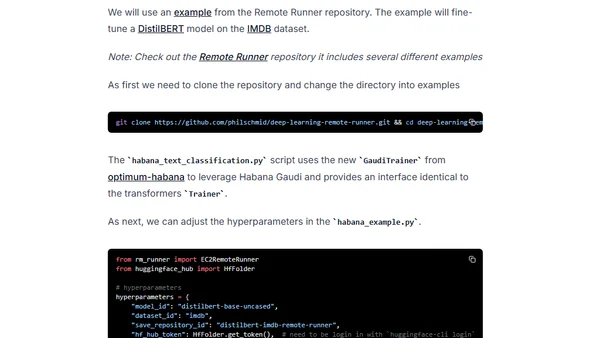

A guide to simplifying deep learning workflows using AWS EC2 Remote Runner and Habana Gaudi processors for efficient, cost-effective model training.

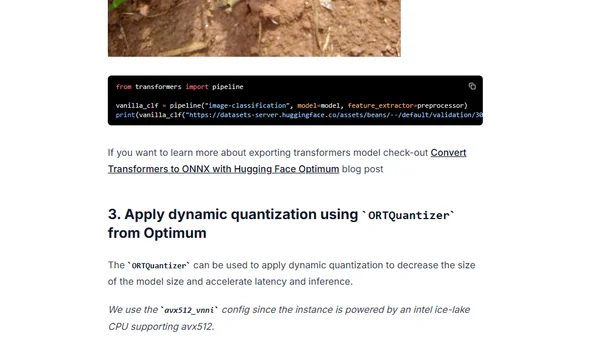

Learn to accelerate Vision Transformer (ViT) models using quantization with Hugging Face Optimum and ONNX Runtime for improved latency.

Learn to optimize Hugging Face Transformers models for GPU inference using Optimum and ONNX Runtime to reduce latency.

Learn how to fine-tune the XLM-RoBERTa model for multilingual text classification using Hugging Face libraries on cost-efficient Habana Gaudi AWS instances.

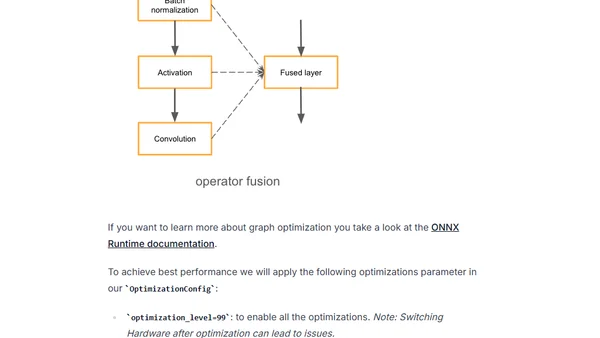

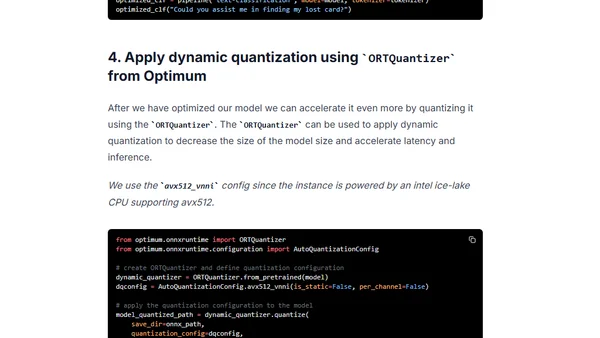

Learn to optimize Hugging Face Transformers models using Optimum and ONNX Runtime for faster inference with dynamic quantization.

A guide on converting Hugging Face Transformers models to the ONNX format using the Optimum library for optimized deployment.

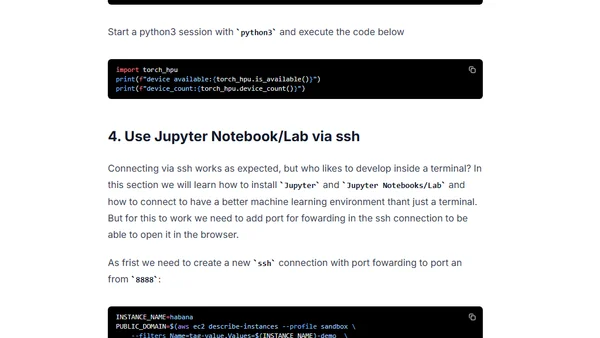

Guide to setting up a deep learning environment on AWS using Habana Gaudi accelerators and Hugging Face libraries for transformer models.

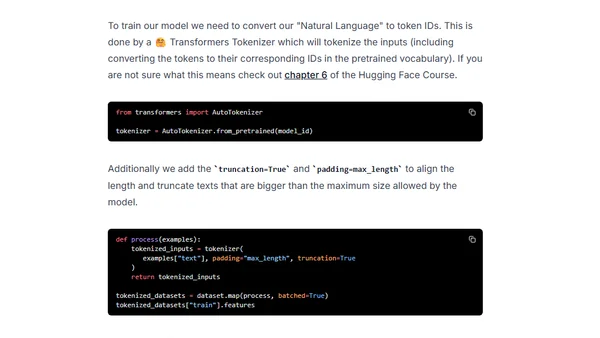

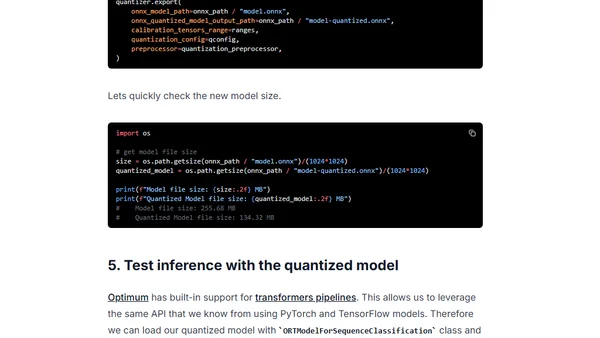

Learn how to use Hugging Face Optimum and ONNX Runtime to apply static quantization to a DistilBERT model, achieving ~3x latency improvements.

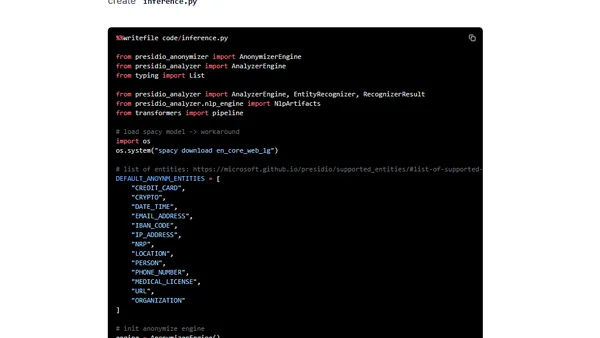

A technical guide on using Hugging Face Transformers and Amazon SageMaker to detect and anonymize Personally Identifiable Information (PII) in text.

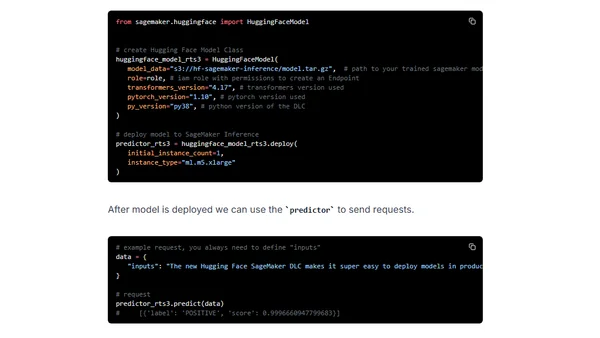

Compares Amazon SageMaker's four inference options for deploying Hugging Face Transformers models, covering latency, use cases, and pricing.

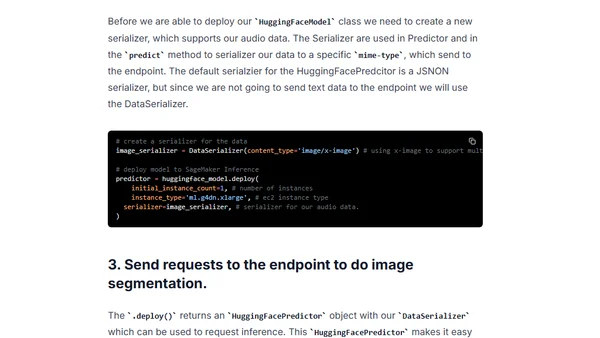

A technical guide on using Hugging Face's SegFormer model with Amazon SageMaker for semantic image segmentation tasks.

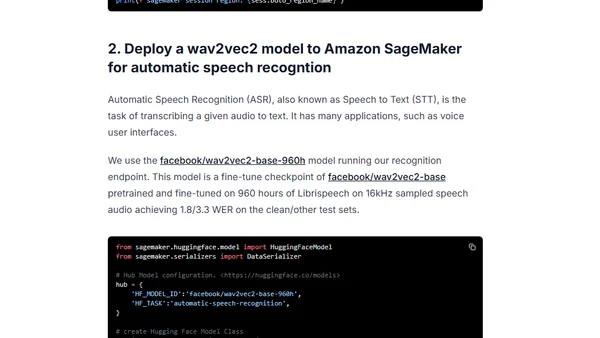

A tutorial on deploying Hugging Face's wav2vec2 model on Amazon SageMaker for automatic speech recognition using the updated SageMaker SDK.

A guide to deploying Hugging Face's DistilBERT model for serverless inference using Amazon SageMaker, including setup and deployment steps.

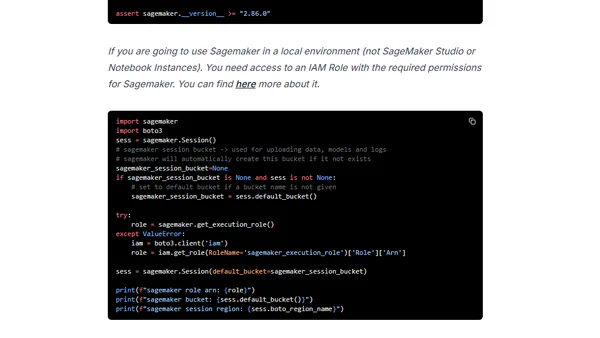

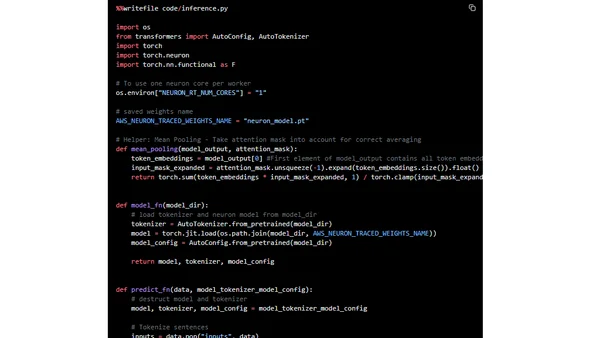

A tutorial on accelerating sentence embeddings using Hugging Face Transformers and AWS Inferentia chips for high-performance semantic search.