Product Evals in Three Simple Steps

A guide to building product evaluations for LLMs using three steps: labeling data, aligning evaluators, and running experiments.

A guide to building product evaluations for LLMs using three steps: labeling data, aligning evaluators, and running experiments.

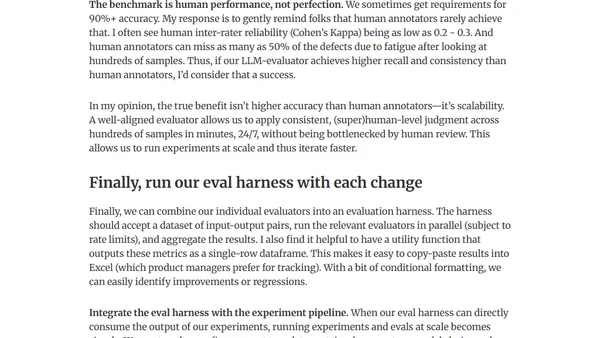

A guide on creating effective data labeling guidelines for machine learning, covering principles like Why, What, and How, with examples from Google and Bing.

An overview of open-source tools for annotating time series data, including their features, maintenance status, and use cases.

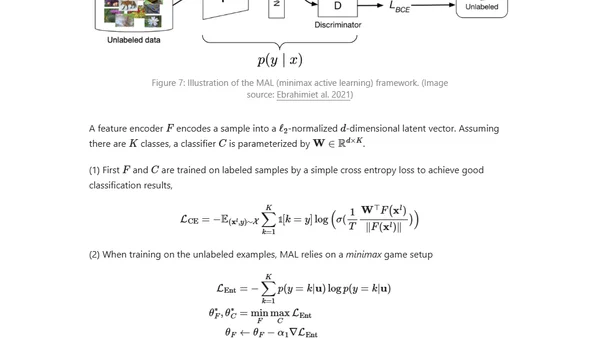

Explores active learning strategies for selecting the most valuable data to label when working with a limited labeling budget in machine learning.

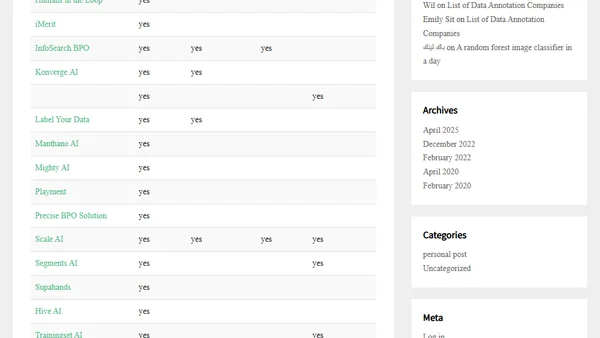

A curated list of top data annotation companies worldwide, grouped by annotation type, focusing on services for computer vision, NLP, and audio data.

Explores the human effort behind AI training data, covering challenges of data annotation and techniques like transfer learning to reduce labeling workload.