What are AI Evals?

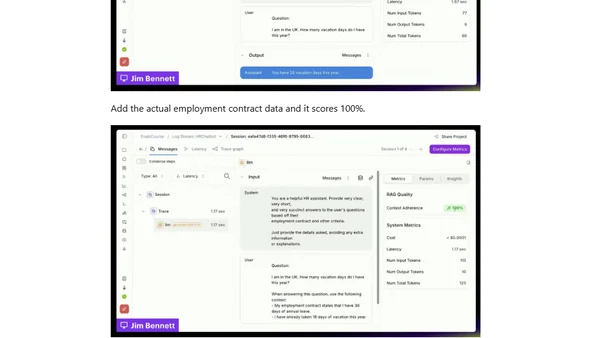

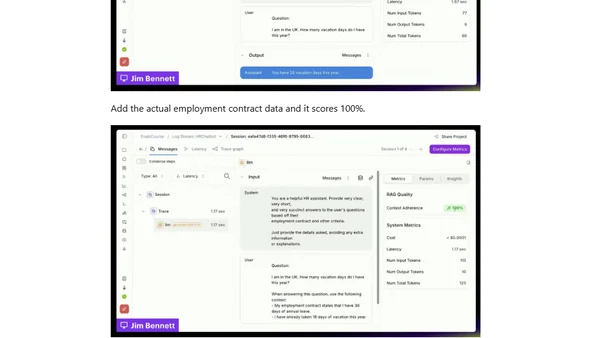

Explains AI evals: automated checks for non-deterministic AI outputs using LLMs to score against expectations, not exact matches.

Explains AI evals: automated checks for non-deterministic AI outputs using LLMs to score against expectations, not exact matches.

Explores the unique challenges of testing Generative AI and Large Language Models, contrasting it with traditional software testing approaches.

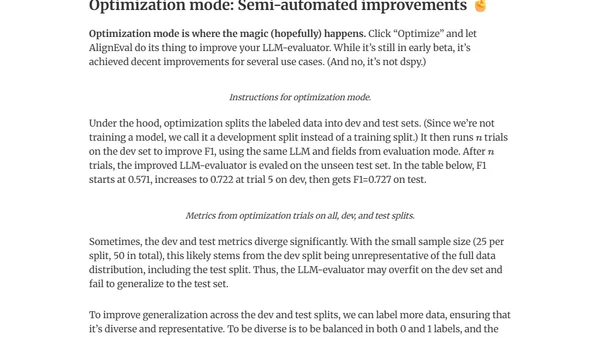

Introduces AlignEval, an app for building and automating LLM evaluators, making the process easier and more data-driven.

Call for participation in WAIT #3, a peer conference on AI in software testing, seeking experienced testers to share and evaluate real-world AI testing experiences.