A Journey from AI to LLMs and MCP - 10 - Sampling and Prompts in MCP — Making Agent Workflows Smarter and Safer

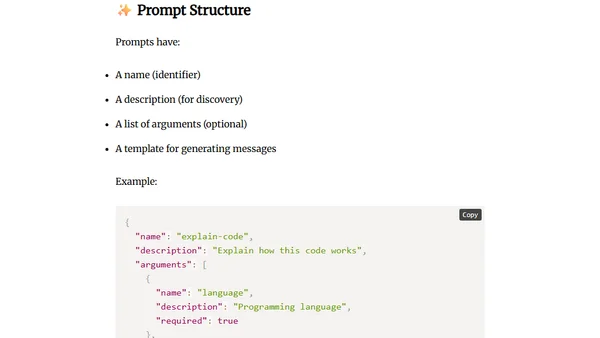

Explains how Sampling and Prompts in the Model Context Protocol (MCP) enable smarter, safer, and more controlled AI agent workflows.

Explains how Sampling and Prompts in the Model Context Protocol (MCP) enable smarter, safer, and more controlled AI agent workflows.

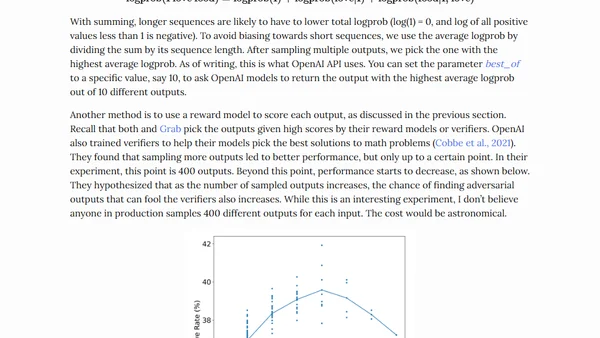

Explains key AI model generation parameters like temperature, top-k, and top-p, and how they control output creativity and consistency.

Explores optimal study design for raking/AIPW estimation, comparing it to IPW methods and analyzing efficiency trade-offs.

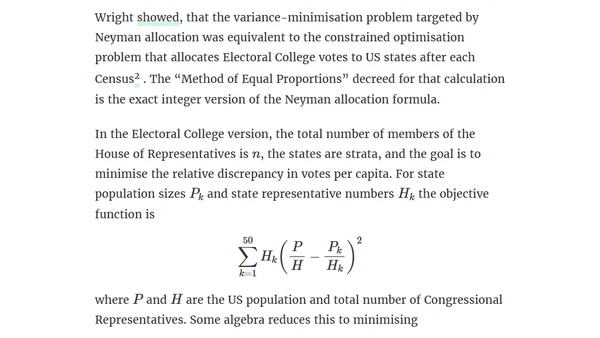

Explains Neyman allocation for optimal stratified sampling and its exact integer solution, linking it to US Electoral College apportionment.

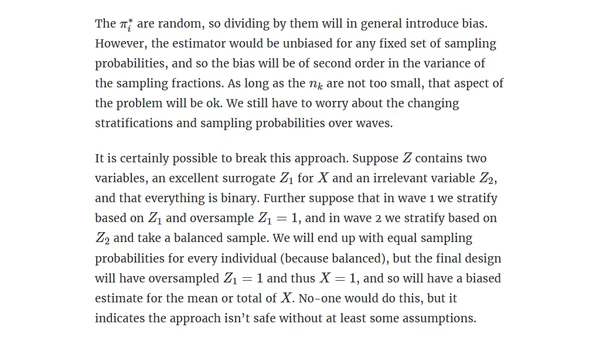

Explores the statistical challenges and potential bias when adjusting stratification variables during multi-wave sampling for population estimation.

A critique of the Oxford-Munich Code of Conduct for Data Scientists, focusing on its technical recommendations on sampling and data retention.

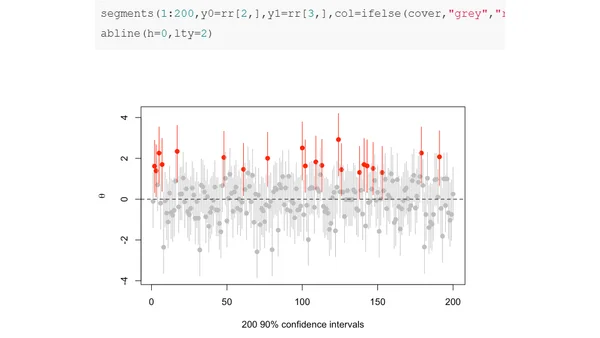

A statistical analysis discussing the limitations of confidence intervals, using examples from small-area sampling to illustrate their weak properties.

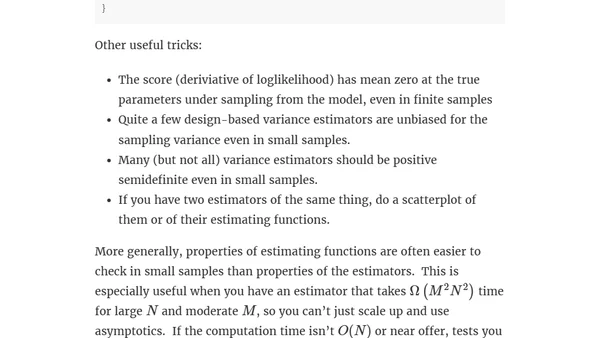

A technical article discussing debugging tricks for complex statistical models with symmetries, focusing on verification and small-sample testing.

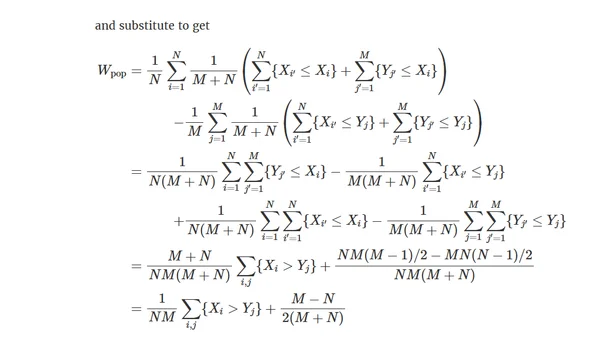

Explores statistical estimation for complex samples, focusing on design-weighted U-statistics and their Hoeffding projections for pair-based analyses.

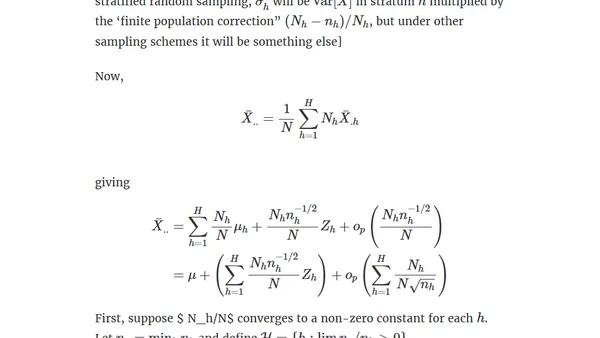

A technical discussion on asymptotic approximations in stratified sampling when sampling probabilities approach zero, relevant for rare disease studies.

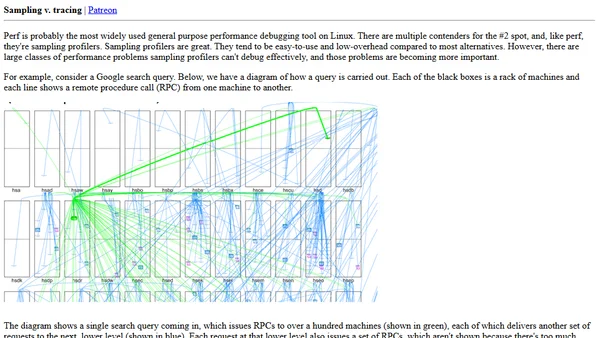

Explains why sampling profilers fail at debugging tail latency and introduces Google's event tracing framework as a solution.