A Journey from AI to LLMs and MCP - 10 - Sampling and Prompts in MCP — Making Agent Workflows Smarter and Safer

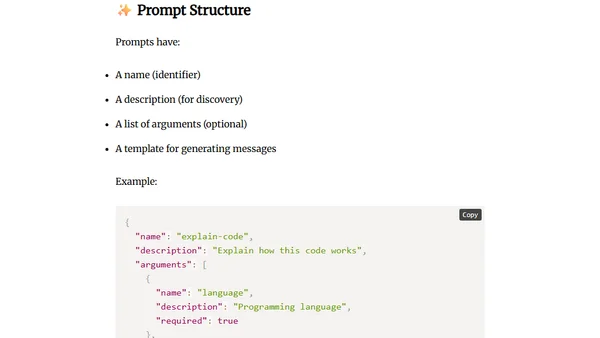

Read OriginalThis article, part of a series on AI and LLMs, details the Model Context Protocol's (MCP) Sampling and Prompts features. It explains how Sampling allows MCP servers to request LLM completions for decision-making, and how Prompts provide reusable templates for guided AI interactions. The post covers their technical implementation, best practices, security benefits, and how they combine to create dynamic, user-controlled agent workflows.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser

Top of the Week

1

The Beautiful Web

Jens Oliver Meiert

•

2 votes

2

Container queries are rad AF!

Chris Ferdinandi

•

2 votes

3

Wagon’s algorithm in Python

John D. Cook

•

1 votes

4

An example conversation with Claude Code

Dumm Zeuch

•

1 votes