Combine Amazon SageMaker and DeepSpeed to fine-tune FLAN-T5 XXL

Guide to fine-tuning the large FLAN-T5 XXL model using Amazon SageMaker managed training and DeepSpeed for optimization.

Philipp Schmid is a Staff Engineer at Google DeepMind, building AI Developer Experience and DevRel initiatives. He specializes in LLMs, RLHF, and making advanced AI accessible to developers worldwide.

183 articles from this blog

Guide to fine-tuning the large FLAN-T5 XXL model using Amazon SageMaker managed training and DeepSpeed for optimization.

A technical guide on fine-tuning large FLAN-T5 models (XL/XXL) using DeepSpeed ZeRO and Hugging Face Transformers for efficient multi-GPU training.

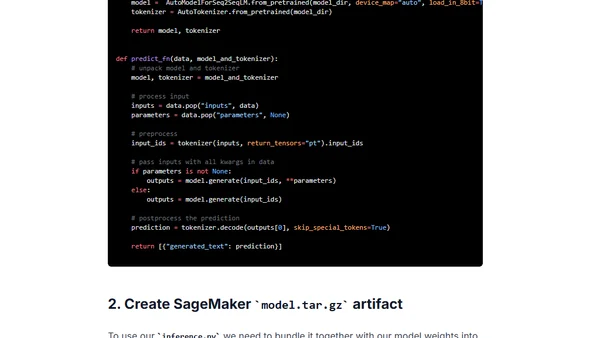

A technical guide on deploying the FLAN-T5-XXL large language model for real-time inference using Amazon SageMaker and Hugging Face.

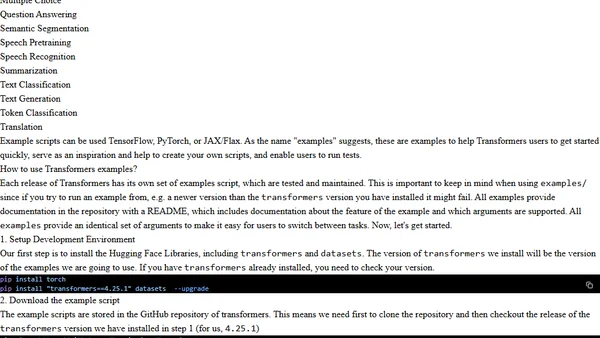

A guide to using Hugging Face Transformers library with examples for fine-tuning models like BERT and BART for NLP and computer vision tasks.

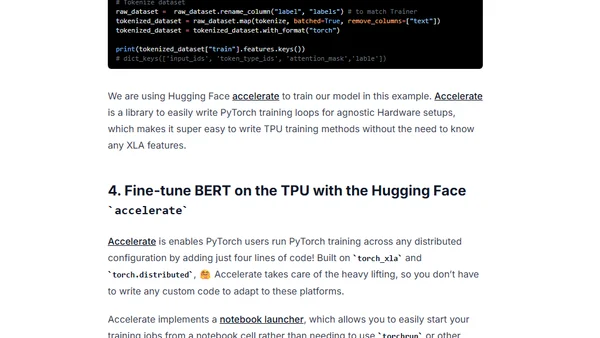

A tutorial on fine-tuning a BERT model for text classification using Hugging Face Transformers and Google Cloud TPUs with PyTorch.

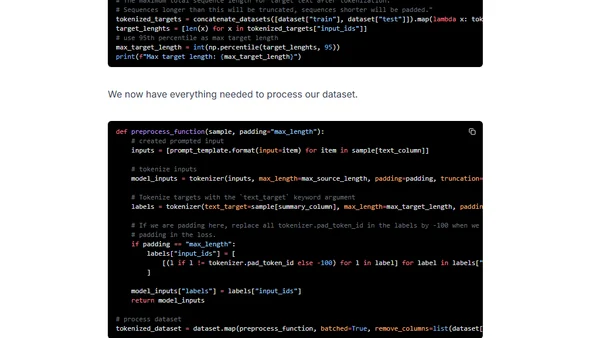

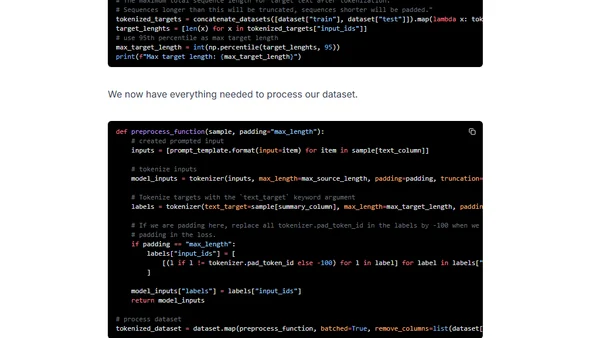

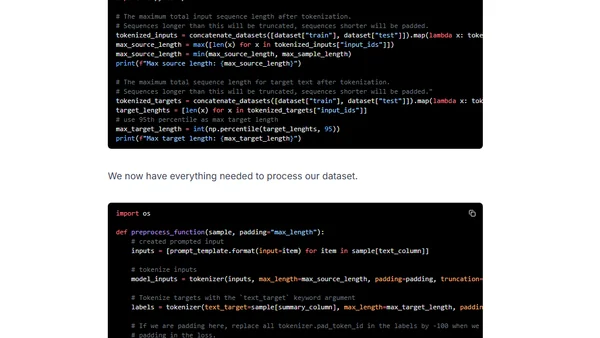

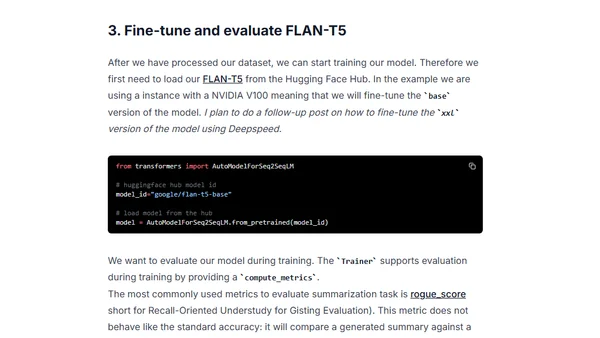

A tutorial on fine-tuning Google's FLAN-T5 model for summarizing chat and dialogue using the samsum dataset and Hugging Face Transformers.

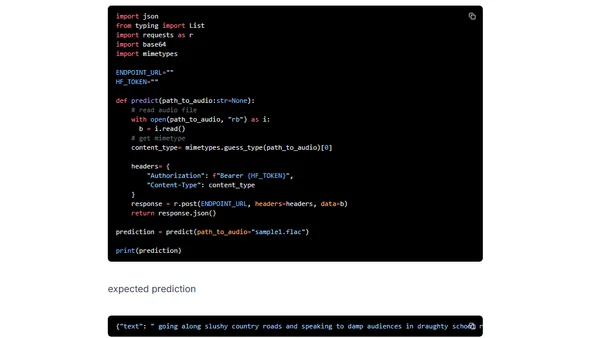

A tutorial on deploying OpenAI's Whisper speech recognition model using Hugging Face Inference Endpoints for scalable transcription APIs.

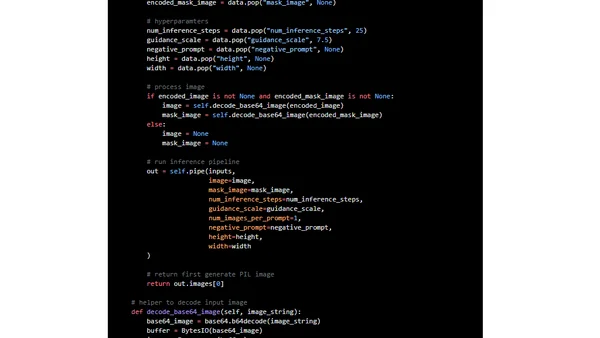

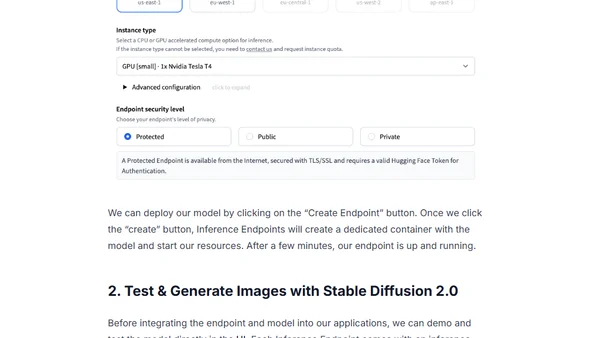

A tutorial on using Hugging Face Inference Endpoints to deploy and run Stable Diffusion 2 for AI image inpainting via a custom API.

A tutorial on deploying Stable Diffusion 2.0 for image generation using Hugging Face Inference Endpoints and integrating it via an API.

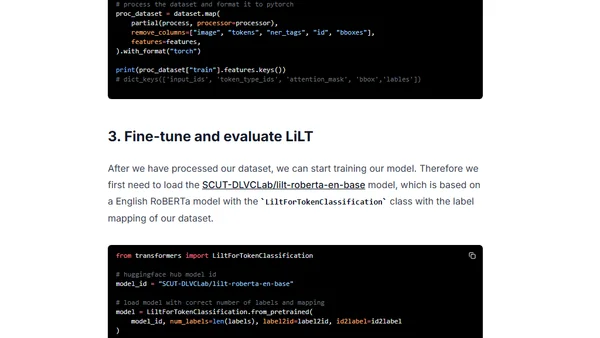

A tutorial on fine-tuning the LiLT model for language-agnostic document understanding and information extraction using Hugging Face Transformers.

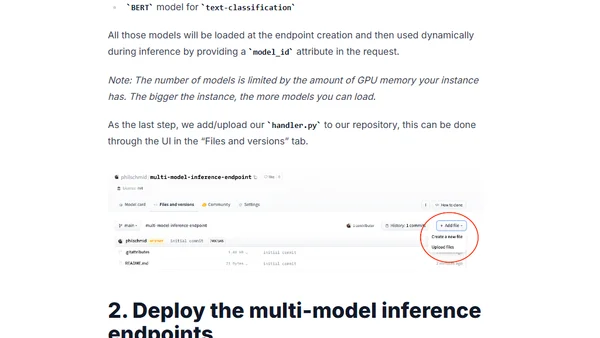

Learn how to deploy multiple ML models on a single GPU using Hugging Face Inference Endpoints for scalable, cost-effective inference.

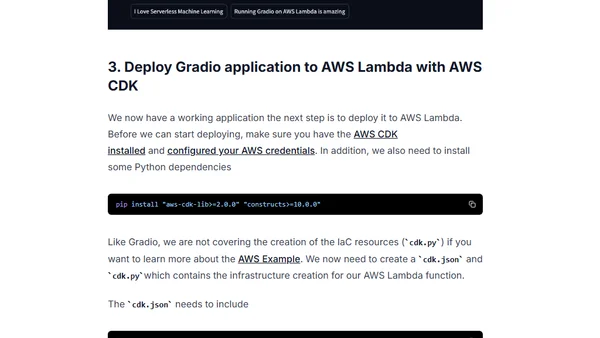

A tutorial on deploying a Hugging Face Gradio machine learning app for sentiment analysis to AWS Lambda using a serverless architecture.

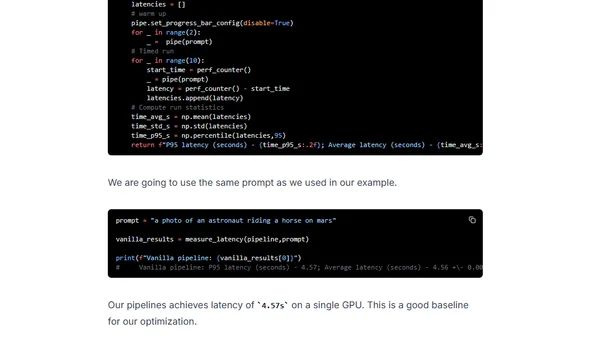

Learn to optimize Stable Diffusion for faster GPU inference using DeepSpeed-Inference and Hugging Face Diffusers.

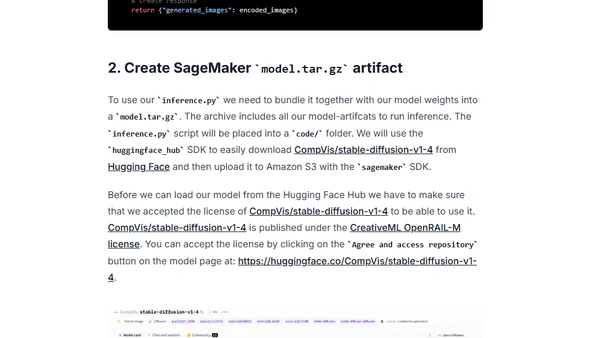

A technical guide on deploying the Stable Diffusion text-to-image model to Amazon SageMaker for real-time inference using the Hugging Face Diffusers library.

A tutorial on deploying the T5 11B language model for inference using Hugging Face Inference Endpoints on a budget.

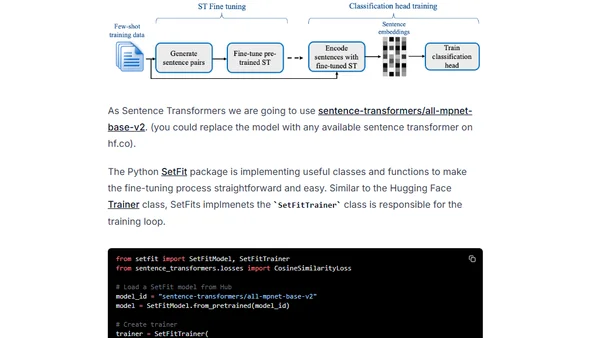

Learn how SetFit, a new approach from Intel Labs and Hugging Face, outperforms GPT-3 for text classification with minimal labeled data.

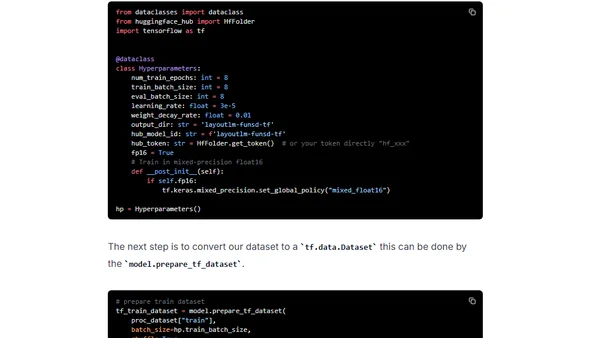

A tutorial on fine-tuning Microsoft's LayoutLM model for document understanding using TensorFlow, Keras, and the FUNSD dataset.

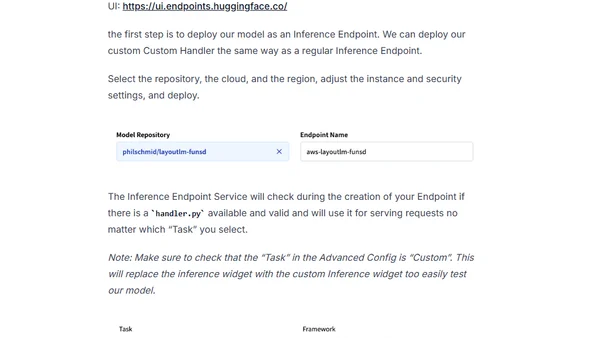

A tutorial on deploying the LayoutLM document understanding model using Hugging Face Inference Endpoints for production API integration.

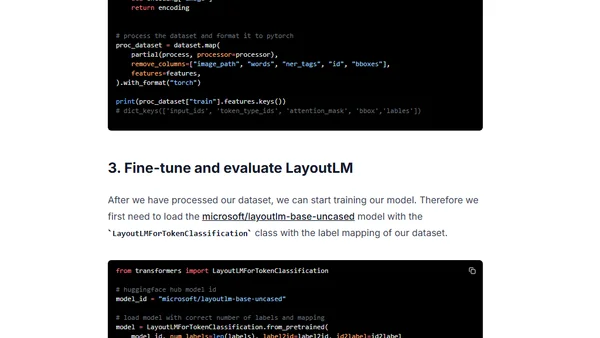

A tutorial on fine-tuning Microsoft's LayoutLM model for document understanding and information extraction using the Hugging Face Transformers library.

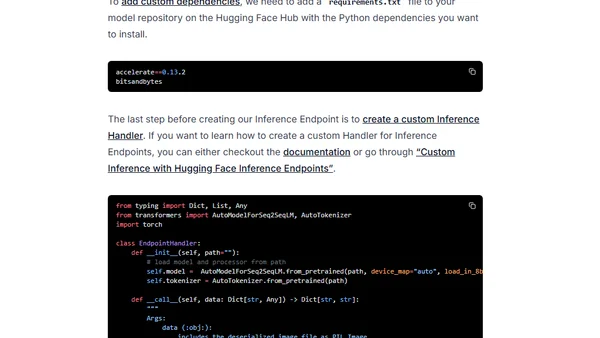

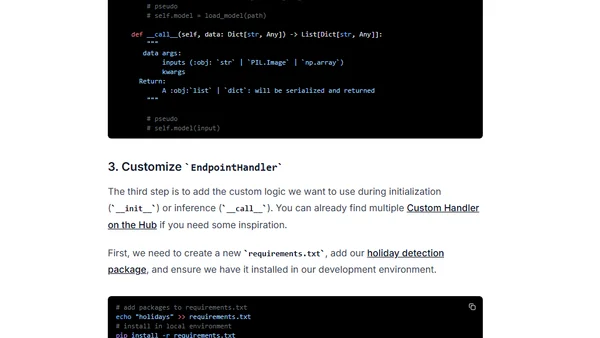

A tutorial on creating custom inference handlers for Hugging Face Inference Endpoints to add business logic and dependencies.