Prompting Fundamentals and How to Apply them Effectively

Explains core prompting fundamentals for effective LLM use, including mental models, role assignment, and practical workflow with examples.

Eugene Yan is a Principal Applied Scientist at Amazon, building AI-powered recommendation systems and experiences. He shares insights on RecSys, LLMs, and applied machine learning, while mentoring and investing in ML startups.

185 articles from this blog

Explains core prompting fundamentals for effective LLM use, including mental models, role assignment, and practical workflow with examples.

A practical guide sharing lessons learned from a year of building real-world applications with Large Language Models (LLMs).

A guide to effective and ineffective evaluation methods for LLMs on tasks like classification, summarization, and translation, including practical metrics.

Explains why mocking ML models in unit tests is problematic and offers guidelines for effectively testing machine learning code.

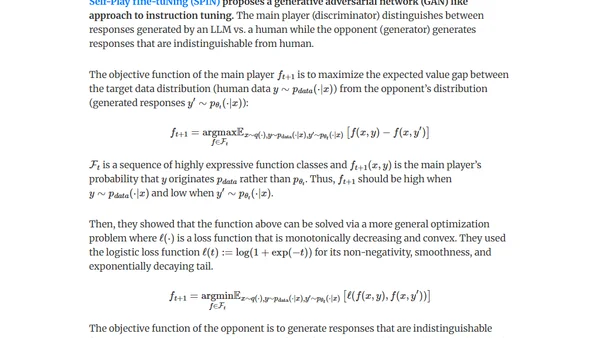

Explores methods for generating synthetic data (distillation & self-improvement) to fine-tune LLMs for pretraining, instruction-tuning, and preference-tuning.

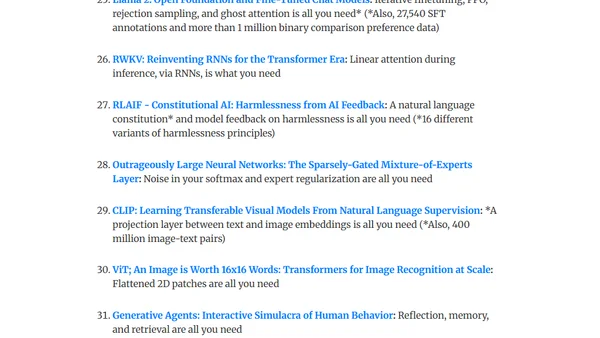

A curated reading list of fundamental language modeling papers with summaries, designed to help start a weekly paper club for learning and discussion.

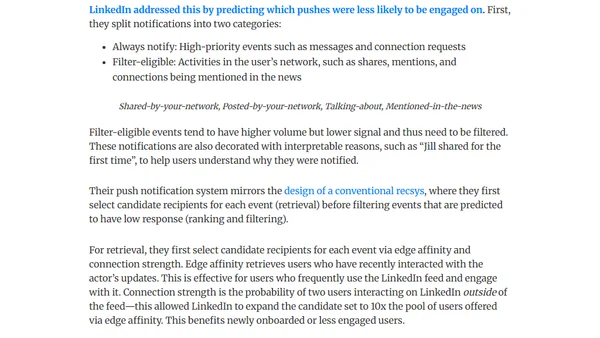

Analyzes push notifications as a recommender system, discussing intent, personalization, timeliness, and user engagement challenges.

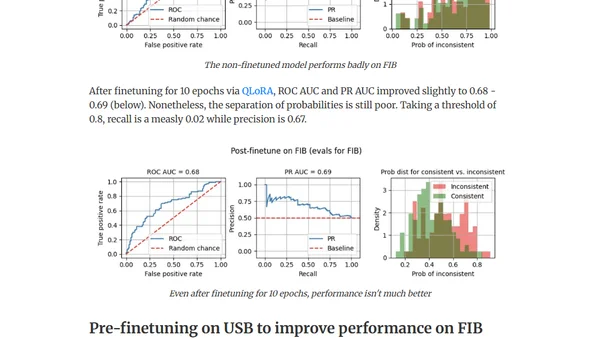

Explores using out-of-domain data to improve LLM finetuning for detecting factual inconsistencies (hallucinations) in text summaries.

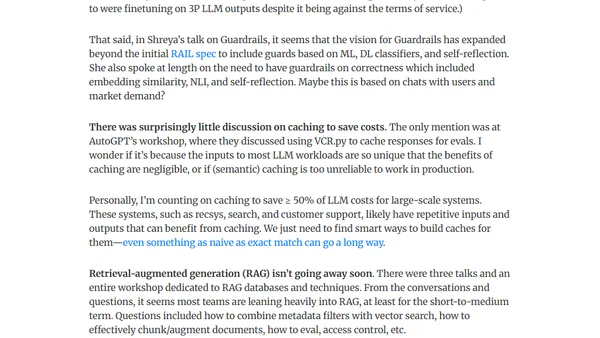

Key takeaways from the AI Engineer Summit 2023, focusing on challenges in LLM deployment like evaluation methods and serving costs.

A summary of a keynote talk on essential building blocks for production LLM systems, covering evaluations, RAG, and guardrails.

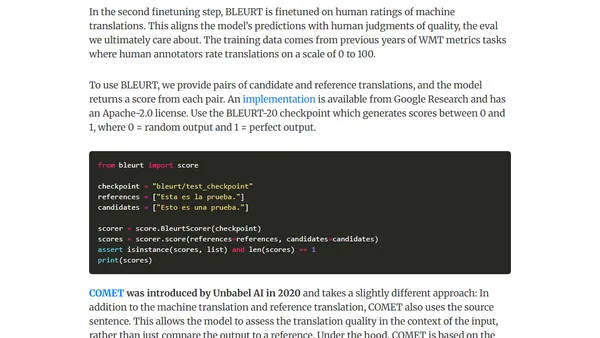

Explores methods for evaluating abstractive text summaries and detecting hallucinations, covering key dimensions and metrics like NLI and QA.

A guide to selecting the right LLM architectural patterns (like RAG, fine-tuning, caching) to solve common production challenges such as performance metrics and data constraints.

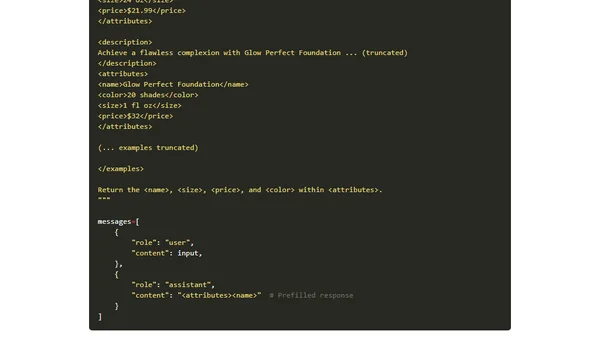

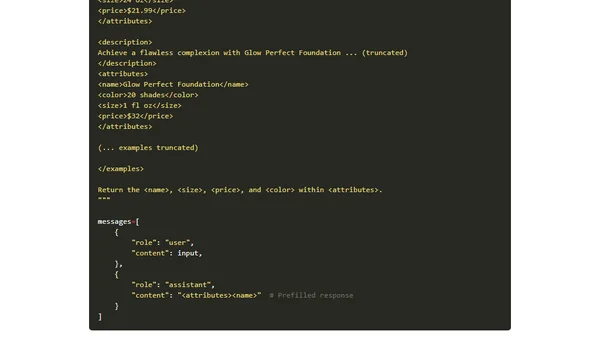

A practical guide outlining seven key patterns for integrating Large Language Models (LLMs) into robust, production-ready systems and products.

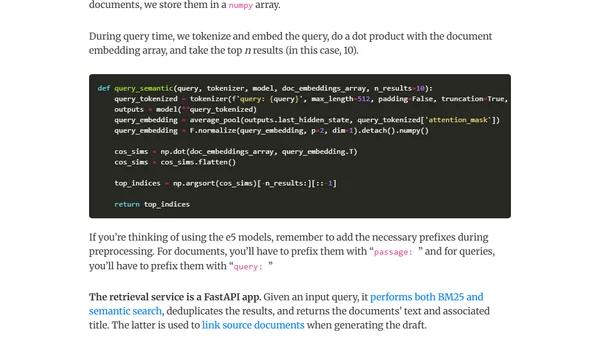

A technical overview of Obsidian-Copilot, a prototype AI assistant for drafting and reflecting within the Obsidian note-taking app using retrieval-augmented generation.

Explains the intuition behind the Attention mechanism and Transformer architecture, focusing on solving issues in machine translation and language modeling.

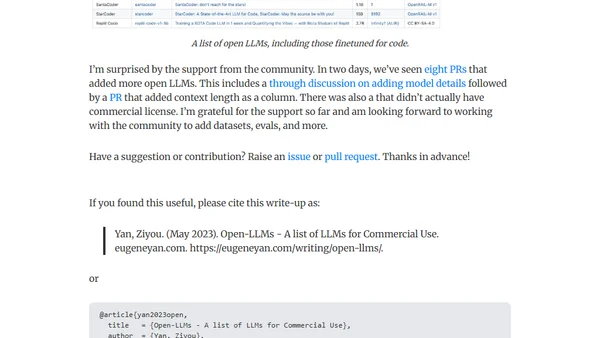

A curated list of open-source Large Language Models (LLMs) available for commercial use, including community-contributed updates and details.

Explores user interfaces for LLMs that minimize text chat, using clicks and user context for more intuitive interactions.

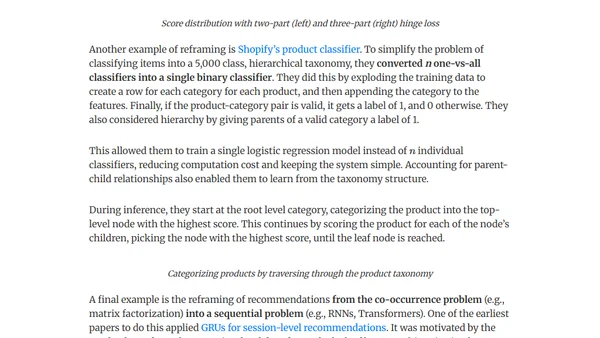

Explores essential design patterns for building efficient and maintainable machine learning systems in production, focusing on data pipelines and best practices.

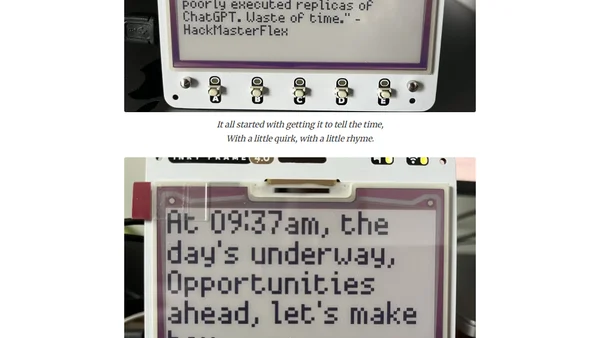

A developer explores running LLMs on a Raspberry Pi Pico with memory constraints, creating a witty e-ink display that generates content from news feeds.

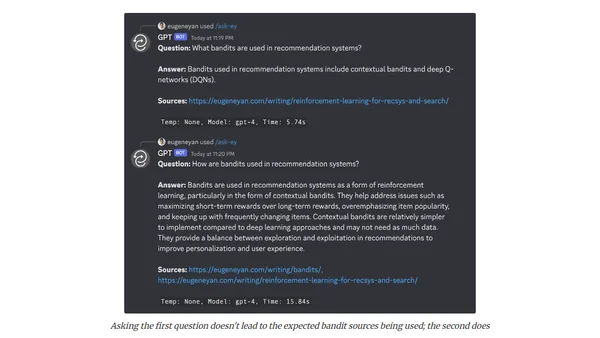

A developer shares experiments building LLM-powered tools for research, reflection, and planning, including URL summarizers, SQL agents, and advisory boards.