Train and Deploy open Embedding Models on Amazon SageMaker

A guide to fine-tuning and deploying custom embedding models for RAG applications on Amazon SageMaker using Sentence Transformers v3.

A guide to fine-tuning and deploying custom embedding models for RAG applications on Amazon SageMaker using Sentence Transformers v3.

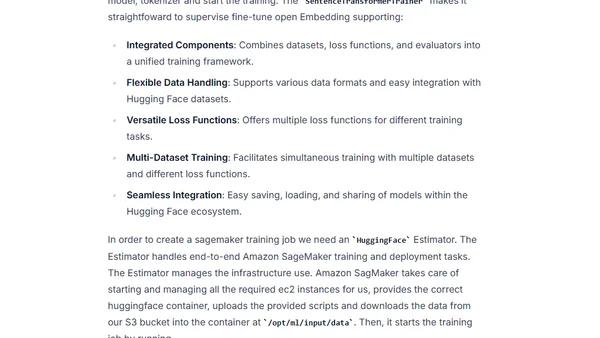

A tutorial on implementing a local RAG system using Phi-3, Semantic Kernel, and TextMemory in a C# console application.

Explains the limitations of Large Language Models (LLMs) and introduces Retrieval Augmented Generation (RAG) as a solution for incorporating proprietary data.

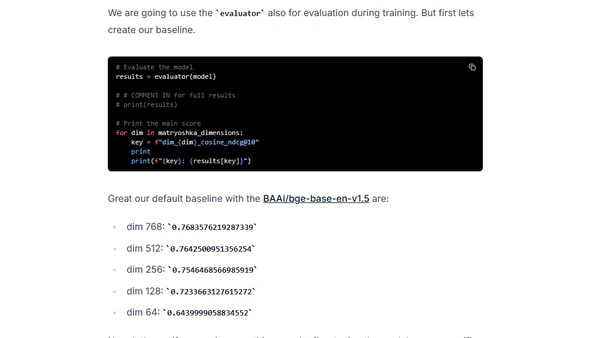

A guide to fine-tuning embedding models for RAG applications using Sentence Transformers 3, featuring Matryoshka Representation Learning for efficiency.

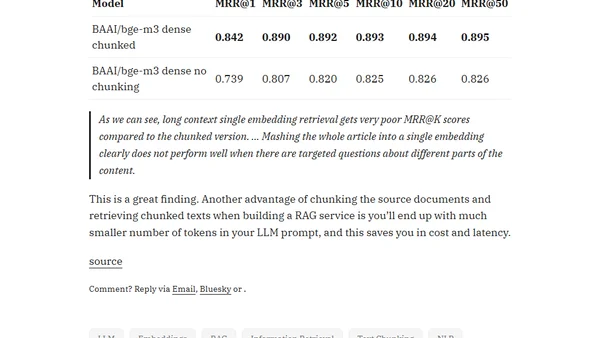

An experiment comparing retrieval performance of chunked vs. non-chunked documents using long-context embedding models like BGE-M3.

A practical guide sharing lessons learned from a year of building real-world applications with Large Language Models (LLMs).

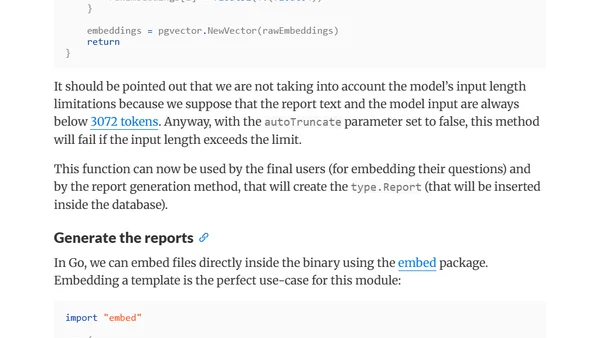

A technical guide on building a Retrieval-Augmented Generation (RAG) system in Go to query PostgreSQL tabular data using Google's Gemini LLM.

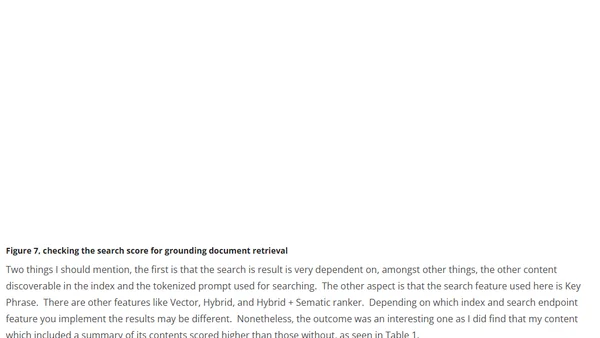

A technical guide on using Azure AI Language Studio to summarize and optimize grounding documents for improving RAG-based AI solutions.

Explains how to implement document retrieval with the Azure OpenAI Assistants API using a custom RAG approach, as the retrieval tool is not yet natively supported.

A simple explanation of Retrieval-Augmented Generation (RAG), covering its core components: LLMs, context, and vector databases.

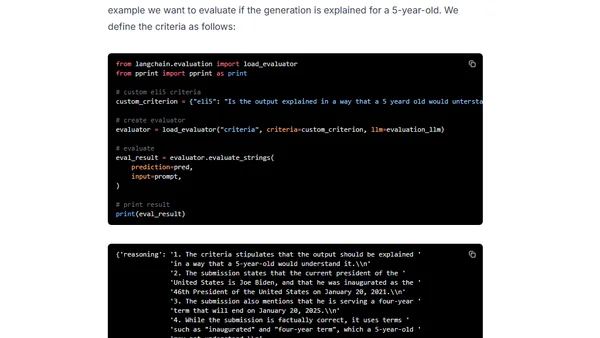

A hands-on guide to evaluating LLMs and RAG systems using Langchain and Hugging Face, covering criteria-based and pairwise evaluation methods.

A guide to selecting the right LLM architectural patterns (like RAG, fine-tuning, caching) to solve common production challenges such as performance metrics and data constraints.

A practical guide outlining seven key patterns for integrating Large Language Models (LLMs) into robust, production-ready systems and products.