4/23/2021

•

EN

On Information Theoretic Bounds for SGD

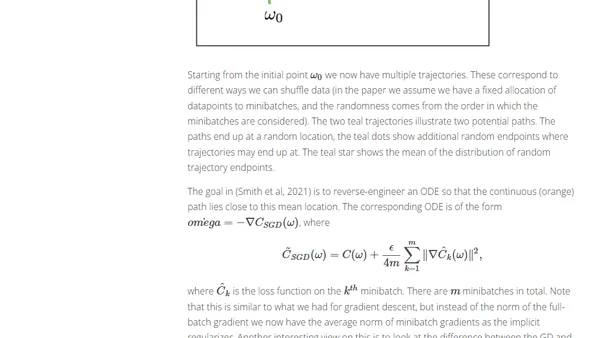

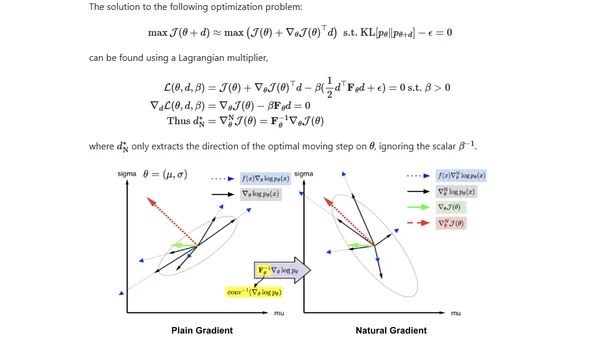

Explores how mutual information and KL divergence can be used to derive information-theoretic generalization bounds for Stochastic Gradient Descent (SGD).