Humans Need Entropy

Explores the concept of 'human collapse' from a tech podcast, arguing for seeking 'entropy' and new inputs to stay creative and unpredictable, with mentions of AI tools.

Explores the concept of 'human collapse' from a tech podcast, arguing for seeking 'entropy' and new inputs to stay creative and unpredictable, with mentions of AI tools.

Argues that AI image generation won't replace human artists, using information theory to explain their unique creative value.

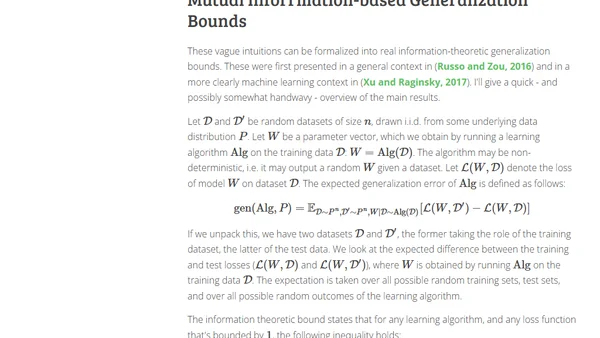

Explores how mutual information and KL divergence can be used to derive information-theoretic generalization bounds for Stochastic Gradient Descent (SGD).

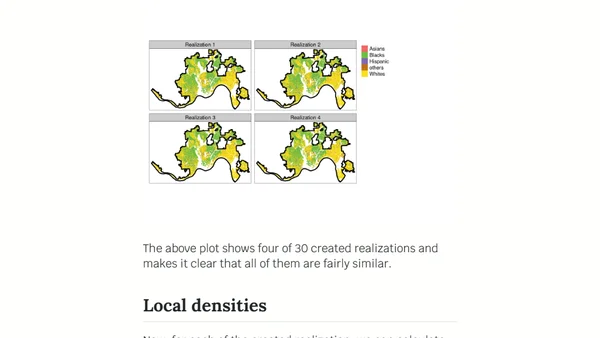

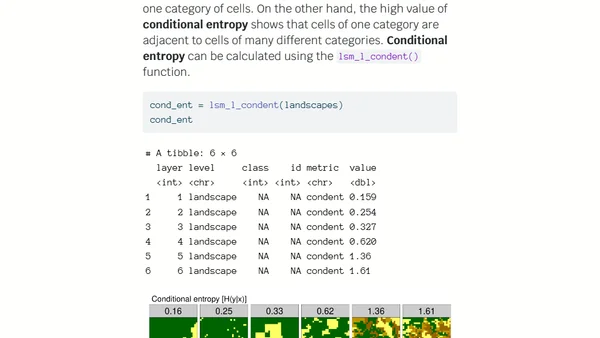

A technical guide on using the R 'raceland' package to analyze and visualize racial diversity and segregation using spatial data and information theory metrics.

Explains how information theory and R code can quantify and classify spatial patterns, with examples from landscape ecology.

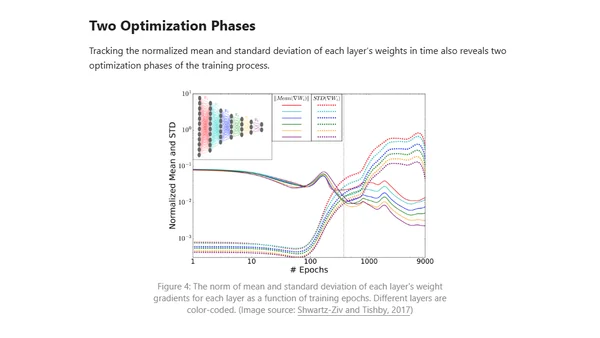

Explores applying information theory, specifically the Information Bottleneck method, to analyze training phases and learning bounds in deep neural networks.