Final notes on ‘Prompt Engineering for LLMs’

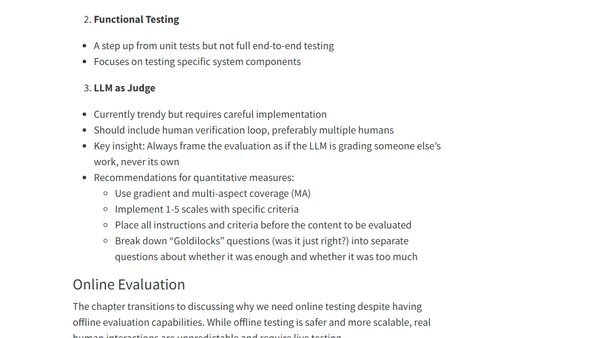

Read OriginalThis article summarizes key takeaways from a book on Prompt Engineering for Large Language Models (LLMs), focusing on Chapter 10 about evaluating LLM applications. It details an evaluation framework covering model capabilities, individual interactions, and system integration. It explains offline evaluation using example suites and synthetic data, approaches like gold standard matching and functional testing, the 'LLM as Judge' method, and the necessity of online A/B testing for real-world deployment.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser