Performance Is Not a Checklist

Explains why good Core Web Vitals scores don't guarantee a good user experience, arguing performance is about user goals, not just metrics.

Explains why good Core Web Vitals scores don't guarantee a good user experience, arguing performance is about user goals, not just metrics.

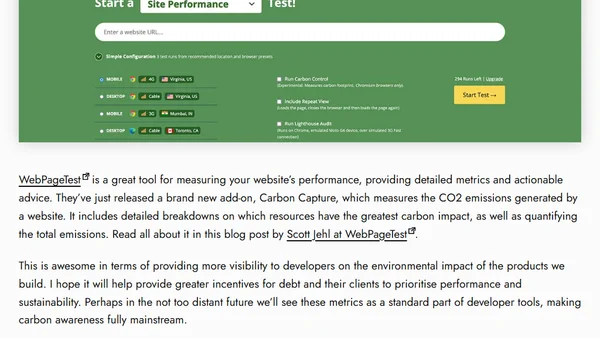

Introduces Carbon Capture, a new WebPageTest add-on for measuring and analyzing a website's estimated CO2 emissions to promote sustainable web development.

Web performance expert announces return to consulting, focusing on helping organizations build sustainable performance culture and tools.

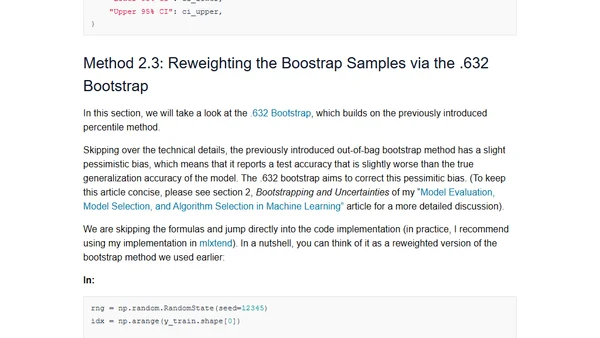

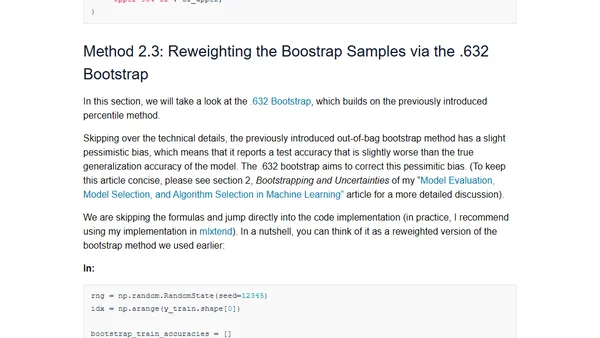

A guide to creating confidence intervals for evaluating machine learning models, covering multiple methods to quantify performance uncertainty.

A technical guide explaining methods for creating confidence intervals to measure uncertainty in machine learning model performance.

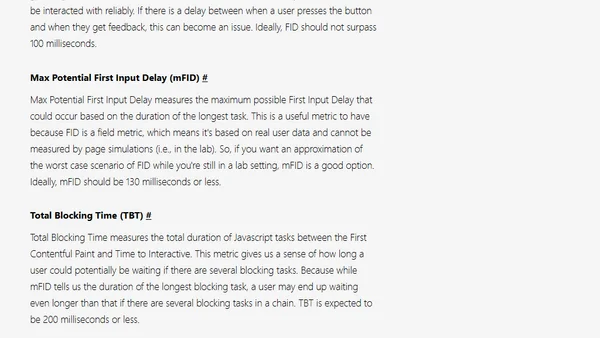

A comprehensive cheatsheet explaining key web performance metrics like LCP, FCP, and TTFB, based on a conference talk.

Philip Walton critiques the web performance community's over-reliance on synthetic metrics, arguing for more nuanced, real-world context in performance discussions.

Summary of key announcements from Chrome Dev Summit 2020, focusing on Core Web Vitals and new CSS performance properties.

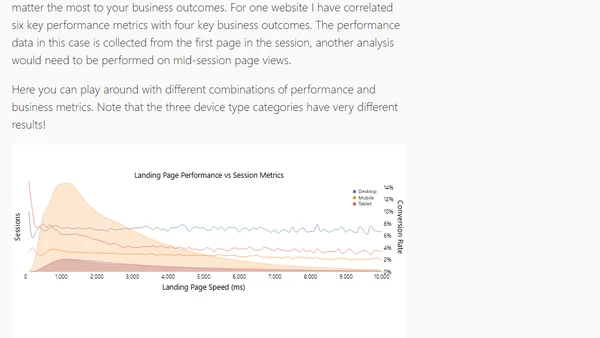

Analyzes Google's Core Web Vitals and other page speed metrics, explaining how to choose and correlate them with business outcomes.

The article argues for rebranding 'web performance' to more specific terms like 'site speed' to improve communication and business buy-in.

Argues that achieving the 95th percentile in many activities is not impressive, using examples from video games and real-world skills.

A guide to creating effective performance budgets for web development, focusing on concrete metrics and team integration.

A summary of key talks from Day 2 of the Delta V Conference, focusing on web performance metrics and Progressive Web Apps (PWAs).

Highlights from Day 1 of the Delta V conference, covering web performance strategies, metrics, and team structures from Pinterest and other tech talks.

Notes from a Pinterest performance team talk on building a dedicated team, custom metrics, regression fighting, and optimization strategies.

The author discusses their experience being interviewed on the Perfbytes podcast about the Visually Complete metric at a performance conference.

A developer shares his journey advocating for and witnessing the launch of Speed Index and Visually Complete metrics in Dynatrace's products.

Key takeaways from Velocity Europe on web performance measurement, focusing on flawed KPIs, user perception, and the pitfalls of gaming metrics.

Analyzing the real-world cost of website data usage globally, factoring in mobile data prices and purchasing power parity.