Anatomize Deep Learning with Information Theory

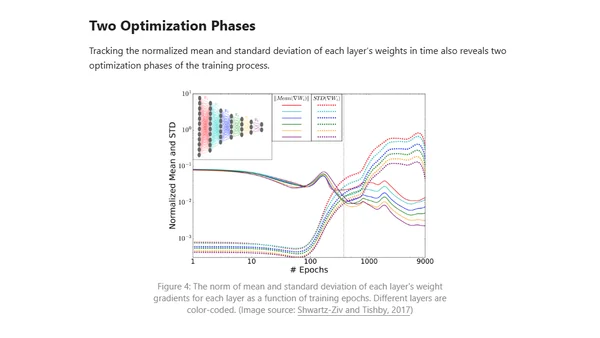

Read OriginalThis article summarizes Professor Naftali Tishby's work on using information theory to study deep learning. It explains concepts like the Information Bottleneck method, which provides a new learning bound for DNNs, and describes the two-phase training process of fitting data and then compressing representation. Key information theory concepts such as Markov chains, KL divergence, mutual information, and the Data Processing Inequality are covered in the context of neural networks.

Comments

No comments yet

Be the first to share your thoughts!

Browser Extension

Get instant access to AllDevBlogs from your browser